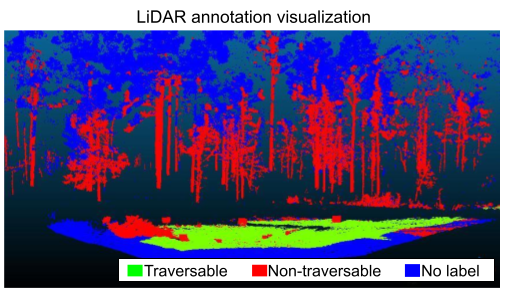

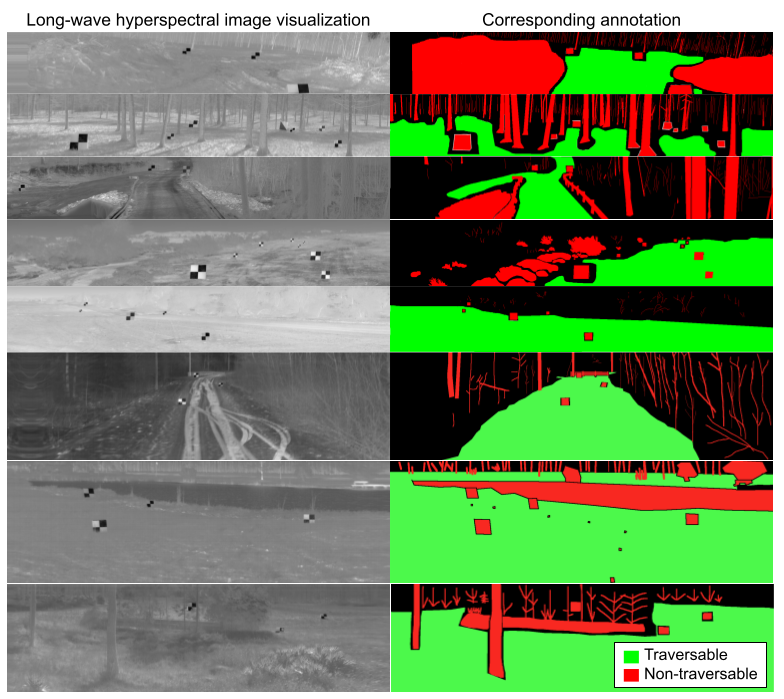

The DARPA Invisible Headlights Dataset is a large-scale multi-sensor dataset annotated for autonomous, off-road navigation in challenging off-road environments. It features simultaneously collected off-road imagery from multispectral, hyperspectral, polarimetric, and broadband sensors spanning wave-lengths from the visible spectrum to long-wave infrared and provides aligned LIDAR data for ground-truth shape. The dataset consists of approximately 145 paths from 4 different collection sites across the US. Data was collected in a variety of weather conditions, during multiple seasons, during both daytime and nighttime, and capturing challenging scenes and obstacles, such as forest, desert, snow, ponds, and fields. Camera calibrations, LiDAR registrations, and traversability annotations for a subset of the data are available and are being released with the dataset.

A subset of the dataset is publicly available. The full dataset has been released on the AWS Registry of Open Data.

Structure and content of the DARPA Invisible Headlights Dataset

The dataset consists of raw data collected by the sensors and derived data including camera calibrations, LiDAR registrations, and image/LiDAR annotations. Data is organized into a hierarchy of raw/derived data.

The raw data is then organized into a four-level hierarchy of collect, then path, then step, then imagery from various sensors. Each data collect is from a single collection location and was collected over the course of one week. Each path refers to a trajectory driven by the sensor-mounted vehicle. The steps are intermediate positions in the trajectory where the simultaneous images were captured by the sensors. The file names indicate which sensor was used, such as IHTest___DistStA., with sensor codes such as . Examples of sensor type are AutoLidar (low-resolution LiDAR), HiResLIDAR (high-resolution LiDAR), BBLW (broadband long-wave), BBSWIR (broadband short-wave infrared), BBVIS (broadband visual), LWHSI (long-wave hyperspectral imagery), and LWPol (long-wave polarimetric). Examples of sensor number/side are 0, 1, LEFT, and RIGHT, which are used to differentiate between multiple sensors of the same type (for example, when multiple sensors were used to collect stereo pairs).

The derived data is organized into a similar hierarchy with an additional final level indicating the type of derived data, such as stereo, LiDAR_annotations, or LWHSI_annotation. The derived data file names mimic the original file names and are appended with a description of the derived data type, such as _ann (annotation) or _traversability_ann_hsi (traversability annotation from hyperspectral imagery). Not all sensors were collected at every step, and some paths were collected without moving the vehicle for an extended period of time (i.e. a single step collected during thermal crossover).

Attribution

This dataset is licensed under CC BY 4.0.

This dataset was developed with funding from the Defense Advanced Research Projects Agency (DARPA). Distribution Statement A: Approved for public release. Distribution is unlimited.

We hope this dataset can facilitate future research. If you find this data useful, please cite our WACV 2024 publication:

F. Yellin, S. McCloskey, C. Hill, E. Smith, and B. Clipp, “Concurrent Band Selection and Traversability Estimation from Long-Wave Hyperspectral Imagery in Off-Road Settings,” in 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024.

bibtex

@InProceedings{Yellin_2024_WACV,

author = {Yellin, Florence and McCloskey, Scott and Hill, Cole and Smith, Eric and Clipp, Brian},

title = {Concurrent Band Selection and Traversability Estimation from Long-Wave Hyperspectral Imagery in Off-Road Settings},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024}

}