Why Everyone is Thinking About Ethical AI, and You Should Too

Facebook calls them the “Five Pillars of Responsible AI.” Google describes them as “Our Principles” for artificial intelligence at Google. The U.S. Intelligence Community has an “Ethics Framework” for artificial intelligence, and the U.S. Department of Defense has “Ethical Principles for Artificial Intelligence.” Each of these documents takes slightly different approaches to the same task: enumerating a set of conditions and principles that the development and deployment of artificial intelligence capabilities must follow to reflect the moral and ethical expectations of the society that uses them.

While there are variations in these statements, they make it clear that ethical and responsible AI is getting explicit attention not only within AI research communities, but also from the government and commercial customers we work with at Kitware.

Using AI for Good, Not Bad

It is undeniable that there are pros AND cons to using AI. But we believe when developed and used responsibly, the benefits of AI outweigh the risks. That’s why our team has worked to advance AI technology that’s used for positive social impact. For example, our do-it-yourself AI toolkit, VIAME, was originally developed in partnership with the National Oceanic and Atmospheric Administration to assess marine life populations to determine the health of our aquatic ecosystems.

Kitware is also heavily involved in efforts to defend against AI used for societal harm. One of the projects our team is currently leading is the DARPA SemaFor program. We are working to develop an algorithm that detects disinformation and other inconsistencies in multimedia articles and posts. Usually, such false information is generated using some form of AI. For example, one of our customers recently released a neural network that can create highly realistic synthetic imagery suitable for animation and video generation. Adversaries can use such tools to create false information, sow discord, and reduce trust in information sources.

Awareness Leads to Ethical AI Practices

The more people who know about ethical AI and incorporate it into their workflows, the safer our society will be. That’s why our team is dedicated to increasing awareness of the field of ethical AI. Over the past year, we published multiple academic papers (Explainable, interactive content-based image retrieval; XAITK: The explainable AI toolkit; X-MIR: Explainable Medical Image Retrieval (related to COVID); and Doppelganger Saliency: Towards More Ethical Person Re-Identification) and organized a workshop on ethical AI at the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. It is worth noting that many of the premier AI conferences, including CVPR, NeurIPS, and AAAI, now require reviewers to assess papers based on the researchers’ attention to ethical concerns such as fairness, privacy protection, the potential for societal harm, and adherence to human subjects research protocols.

Incorporating Ethical AI into Customer Projects

Many organizations are looking to adopt ethical AI practices, including U.S. government, academia, and commercial customers, emphasizing that responsible AI is becoming a significant priority. Kitware consistently focuses on ethics when developing and deploying artificial intelligence modules, regardless if they are purely for research or intended to be used in the real world. Our team works closely with our peer researchers and customers to develop these capabilities to comply with the ethical principles they have established to govern this work.

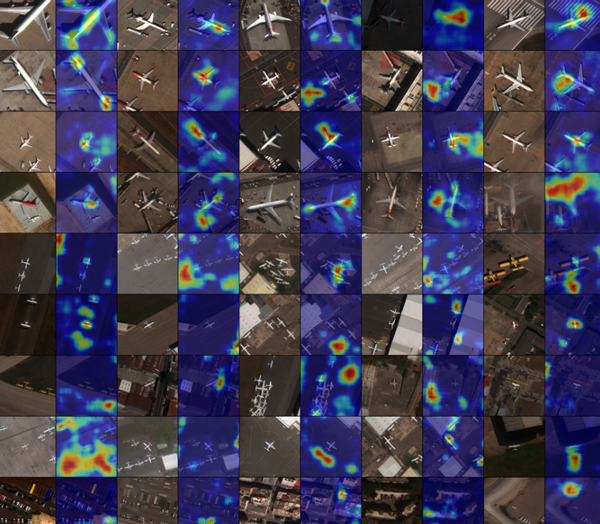

There is clear evidence suggesting increased interest in ethical AI among our customers and collaborators. During a recent contract negotiation with one of our defense customers, we were asked to describe how our work on the project would conform to the DoD ethical AI principles. Under our government-funded DARPA URSA project, additional funding was available to explore how explicitly considering legal, moral, and ethical (LME) elements can affect the development and evaluation of AI-based capabilities. We found that such considerations frequently led to product enhancement rather than creating constraints or restrictions. For example, the Laws of Armed Conflict include the concepts of “Honor,” dealing with fairness and respect among adversaries, and “Distinction,” dealing with differentiating between combatants and non-combatants. To address these concepts on URSA, which conducts sensing in complex, adversarial urban environments, we added a “no-sense zone” capability. This allows mission commanders to designate sensitive areas, such as religious or child care facilities, for reduced or completely prohibited sensing. This feature also significantly helps the evaluation effort – establishing a no-sense zone on a test range provides space for field test personnel to observe without polluting the test environment.

Making Ethical AI a Priority

Like most areas of technology development, there will always be risks associated with AI. But Kitware takes the approach of finding the best use of the technology and using it for good, and we encourage others to do the same. We look forward to continuing this work with our customers and collaborators as they seek to develop artificial intelligence capabilities ethically and responsibly. Contact our AI team to learn more about how Kitware can help you integrate ethical AI into your software and algorithms.