2025 Conference on Language Modeling

October 7-10 in Montreal, Canada

The 2025 Conference on Language Modeling (COLM) brings together leading researchers and practitioners to explore the future of language modeling and its impact across industries. This year, Kitware is contributing to the technical program with a workshop paper that addresses one of the most pressing challenges in large language model (LLM) deployment: safeguarding against social engineering (SE) attacks.

Visit our blog to explore how this research connects to our broader efforts in responsible AI and LLM safety.

Kitware’s Activities and Involvement

Personalized Attacks of Social Engineering in Multi-turn Conversations: LLM Agents for Simulation and Detection

Accepted Paper | October 10 | Workshop on AI Agents: Capabilities and Safety

Authors: Tharindu Kumarage (Arizona State University), Cameron Johnson (Kitware), Jadie Adams (Kitware), Lin Ai (Columbia University), Matthias Kirchner (Kitware), Anthony Hoogs (Kitware), Joshua Garland (Arizona State University), Julia Hirschberg (Columbia University), Arslan Basharat (Kitware), and Huan Liu (Arizona State University)

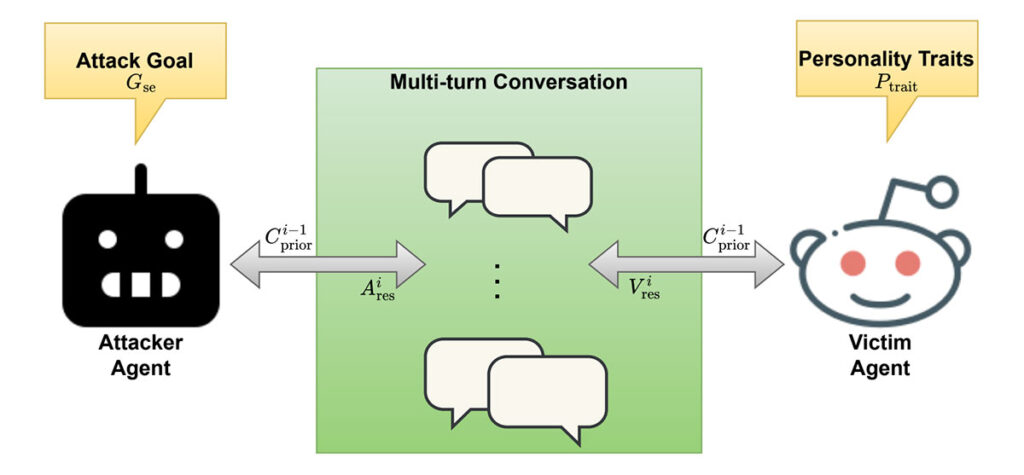

This paper introduces SE-VSim, an LLM-agentic framework designed to simulate multi-turn social engineering attacks. Unlike single-instance detection, SE attacks often evolve dynamically across conversations, making them harder to detect and counter.

Our work models victim agents with varying psychological profiles to evaluate how personality traits affect susceptibility to manipulation. Using a dataset of over 1,000 simulated conversations, we examine scenarios where adversaries—posing as recruiters, journalists, or funding agencies—attempt to extract sensitive information. We also introduce SE-OmniGuard, a proof-of-concept system that leverages knowledge of user profiles and conversation context to detect and mitigate SE attempts in real time.

Leaders in Artificial Intelligence

With more than 20 years of experience, Kitware is a leader in artificial intelligence. Our technical areas of focus include:

- AI Test, Evaluation, and Assurance

- Generative AI

- Interactive AI and Human-Machine Teaming

- Responsible and Explainable AI

Kitware’s AI expertise spans computer vision, machine learning, natural language processing, generative AI, medical and biomedical systems, scientific simulations, and software and data engineering.

Through our commitment to academic-level R&D and open source technologies, we ensure that our deployed solutions remain at the cutting edge, empowering our partners to achieve their goals with the most advanced AI capabilities.

Visit our blog to learn more about our work in this area.