Cumulus: Enabling Complex HPC Workflows

We define the high-performance computing/high-throughput computing (HPC/HTC) workflow as the flow of tasks that need to be executed to compute on HPC/HTC resources. Cumulus, an open source platform, dynamically executes HPC/HTC workflows. In particular, it offers functionality in several key areas: workflow orchestration, cluster provisioning, job submission and data management.

Workflow Orchestration

Tasks within the HPC/HTC workflow can be jobs that run on HPC/HTC resources or auxiliary assignments that run outside of HPC/HTC resources. Example tasks include uploading data to a cluster, submitting a job and performing an analysis. To orchestrate these tasks, Cumulus provides you with a task execution engine, which is built on Celery—a proven distributed task-queuing system.

Cluster Provisioning

Cumulus launches and provisions HPC/HTC resources. It leverages traditional HPC/HTC clusters, as well as dynamic clusters that come from virtual servers in public or private cloud computing environments such as Amazon Web Services (AWS). A major benefit of Cumulus is that it allows you to match the size and characteristics of each cluster to your workflow.

Job Submission

Facilities provide access to HPC/HTC resources through job schedulers. These schedulers add jobs to a queue until processors and memory become available. Through its unified interface, Cumulus enables you to submit jobs to several schedulers.

Data Management

By their very nature, HPC/HTC workflows are data-driven. Cumulus is well-equipped for data management, thanks to Girder. Girder is a scalable data management system that is based on MongoDB. It uses abstractions, called assetstores, to represent repositories that store the raw content of files. As a plugin for Girder, Cumulus leverages these assetstores to access data hosted on remote HPC/HTC cluster file systems. This means that you do not need to move potentially large files onto your local file system.

Cumulus in Action

Cumulus not only dynamically executes HPC/HTC workflows, but it provides you with the necessary infrastructure to build applications that leverage HPC/HTC resources. Its simple RESTful interface hides much of the complexity associated with these resources, so you can concentrate on application development. Many open source software solutions currently leverage Cumulus. Examples include HPCCloud, Computational Model Builder (CMB) and Open Chemistry.

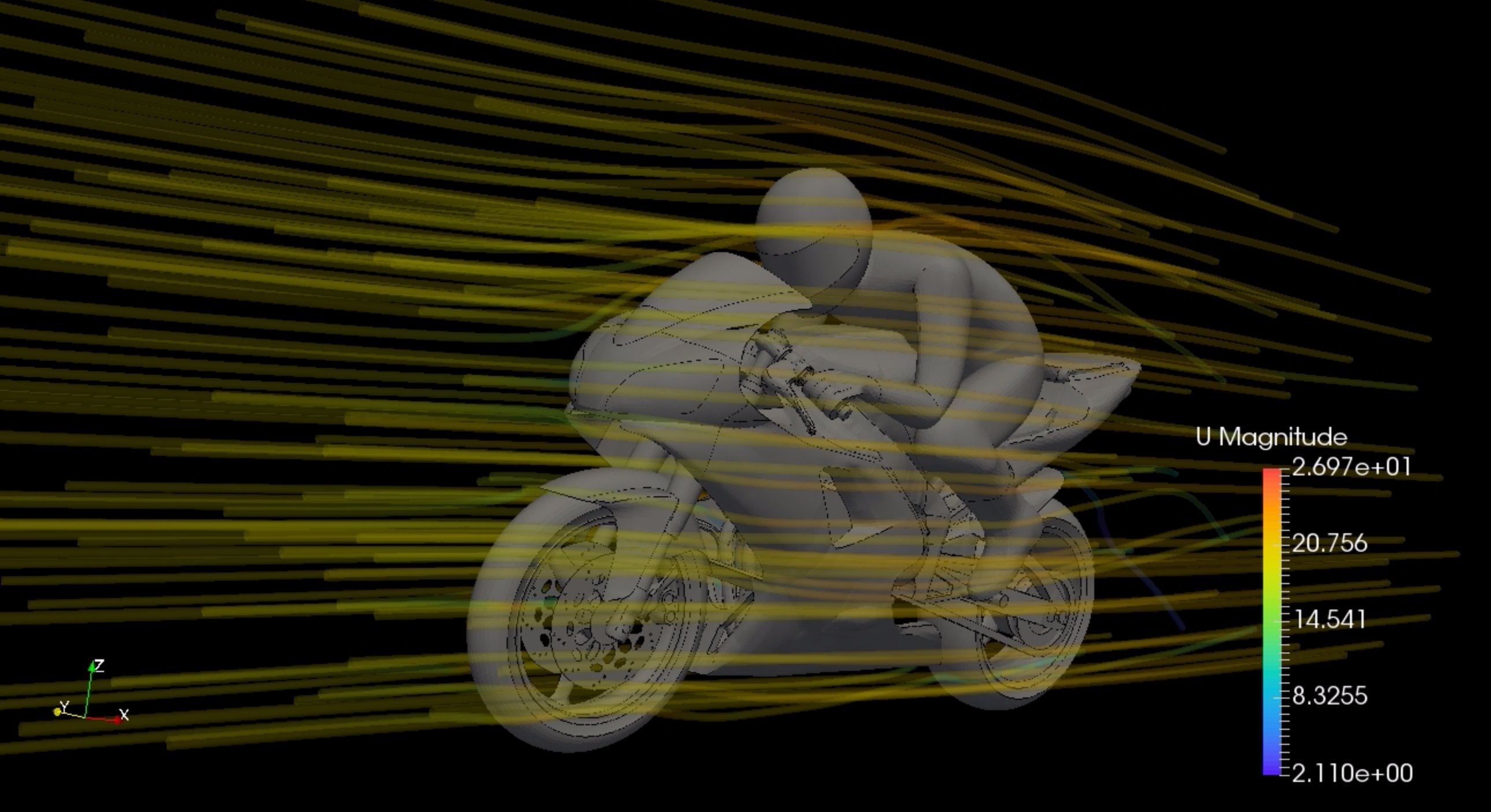

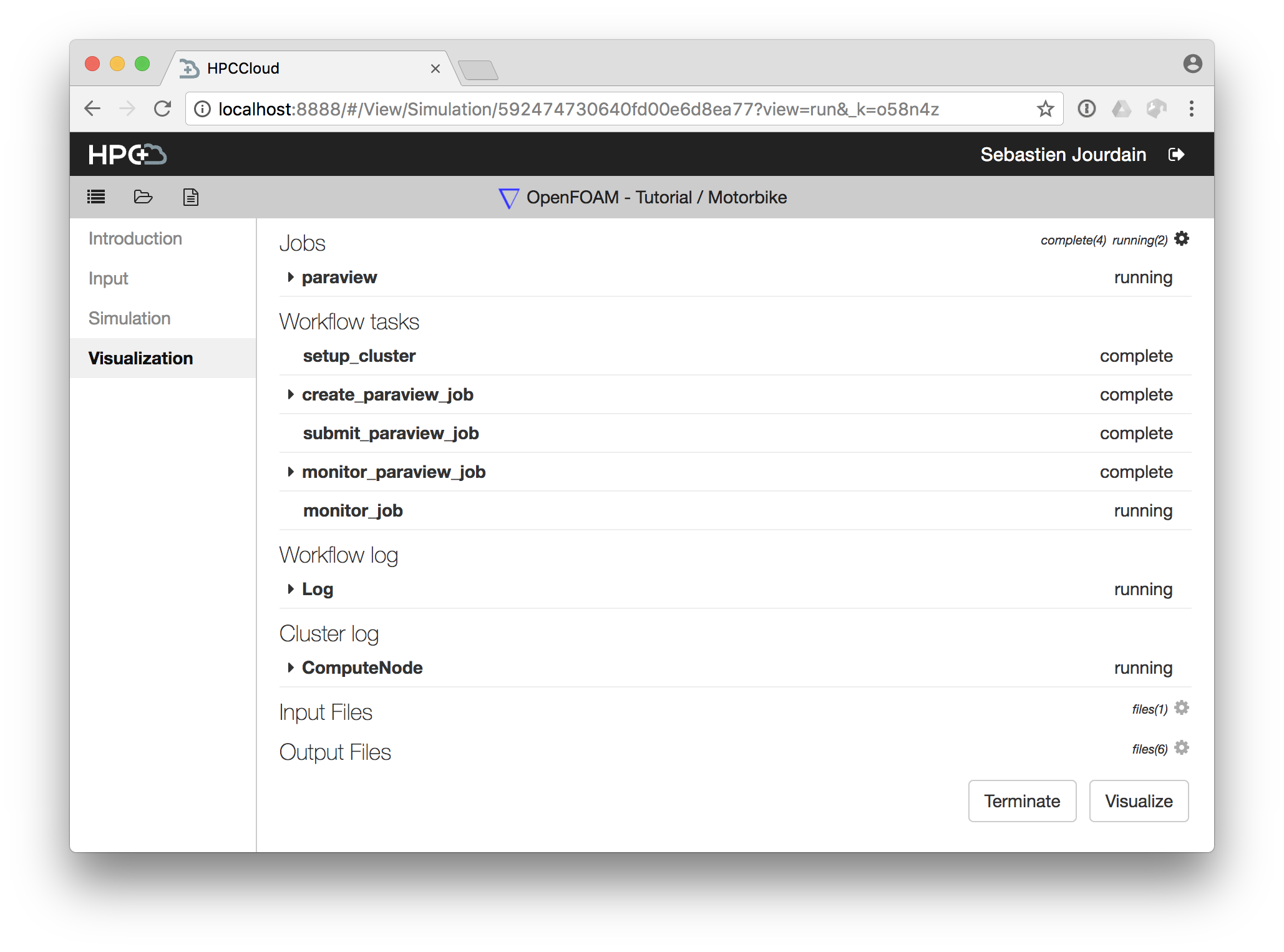

HPCCloud

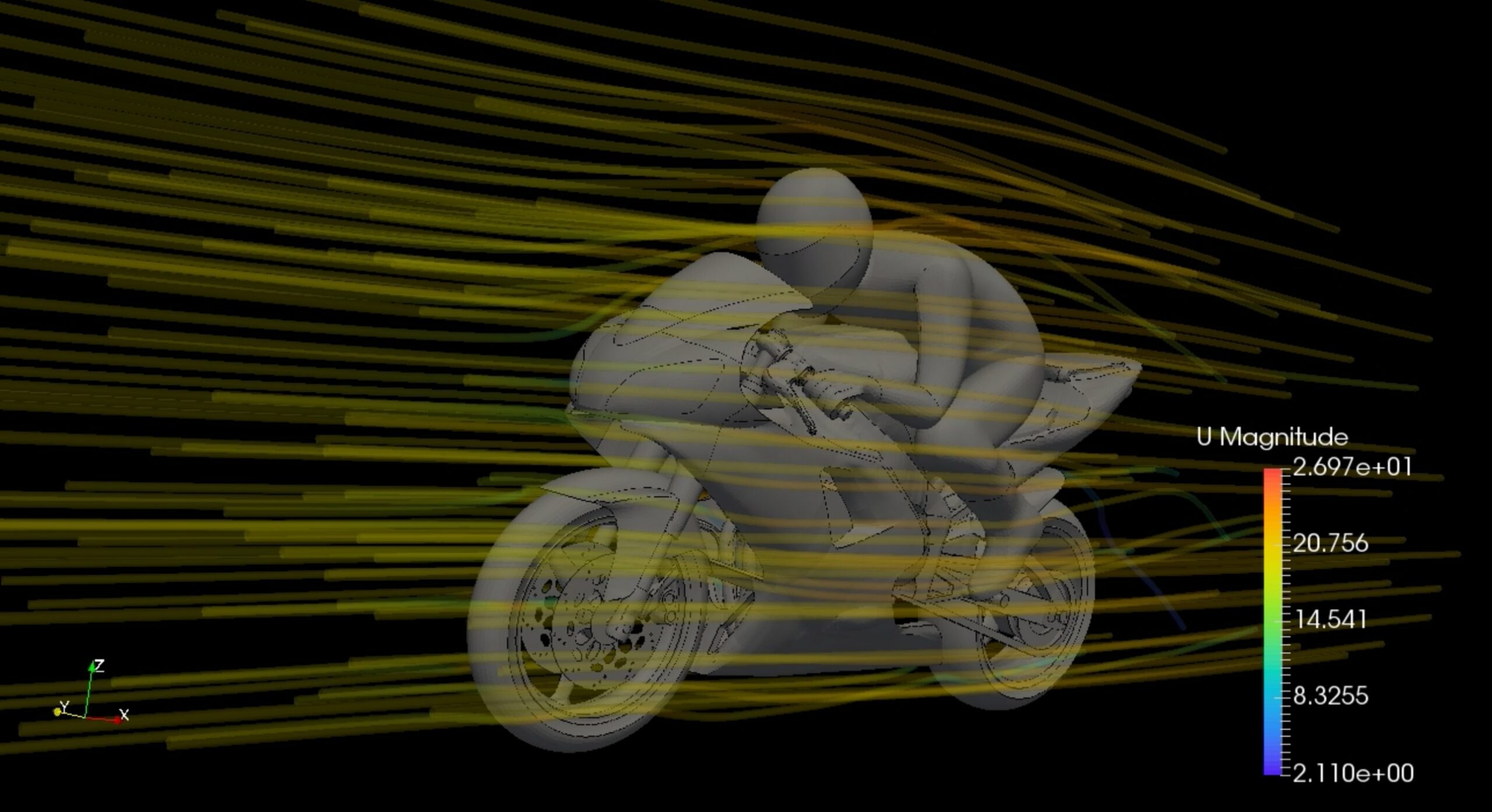

HPCCloud is a web platform that lets you run simulation workflows without leaving your browser. HPCCloud uses Cumulus to manage complex computation jobs related to meshing, simulation and visualization on Amazon Elastic Compute Cloud (EC2) and user cluster hardware.

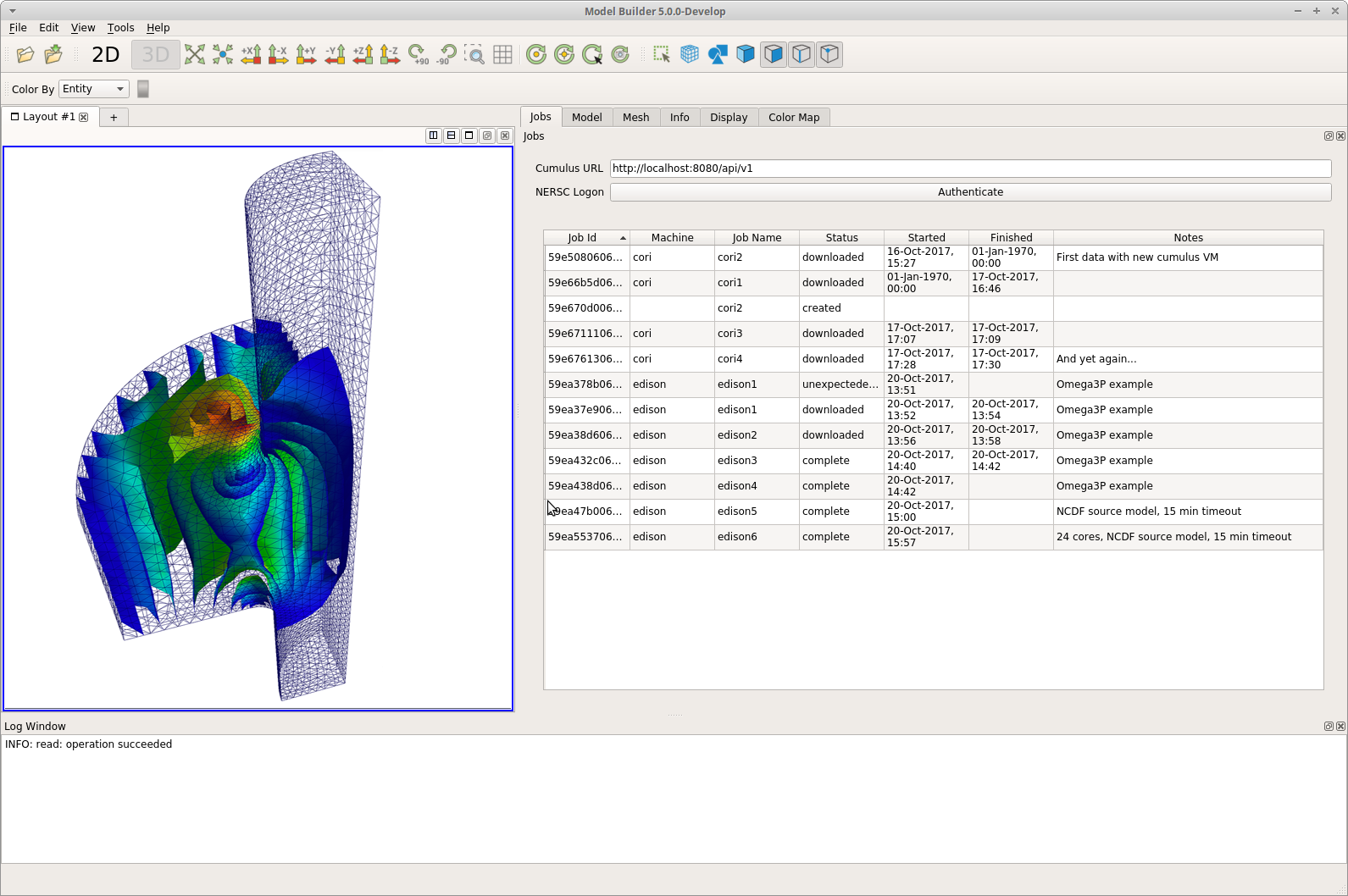

Computational Model Builder

Cumulus interacts with CMB to help you intuitively access HPC facilities on your desktop. Together, the platforms make it easy to interact with complex simulation workflows, such as the high-energy physics workflow that is pictured below. This workflow is based on the Advanced Computational Electromagnetic Simulation Suite (ACE3P) from SLAC.

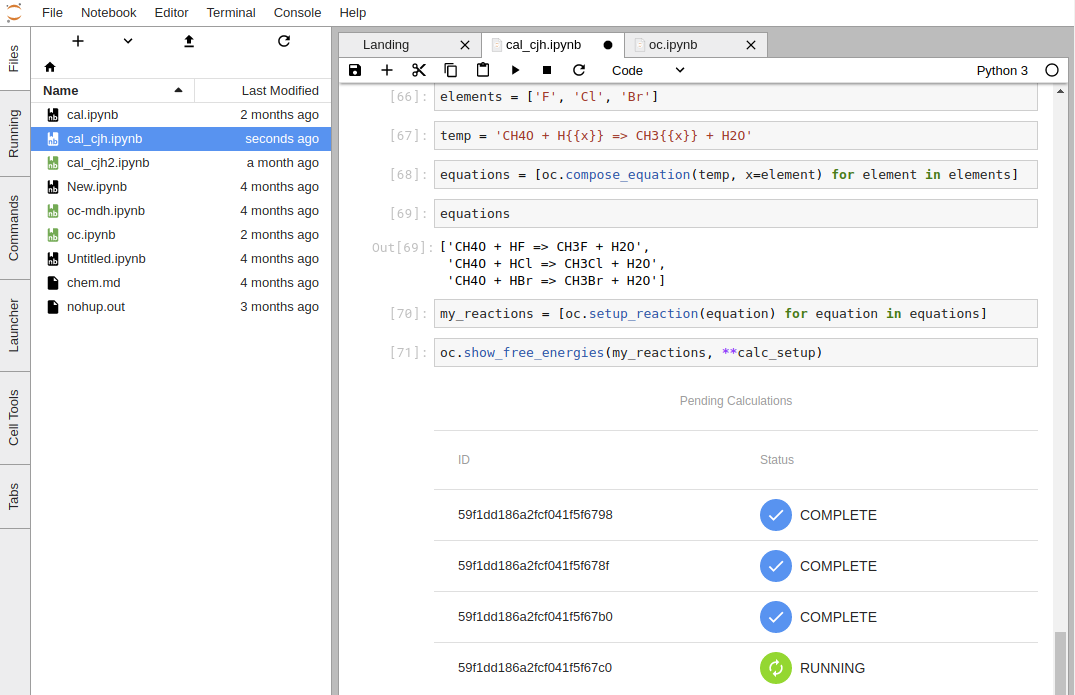

Open Chemistry

Open Chemistry uses Cumulus and JupyterLab to provide you with a user-friendly platform for reproducible research that embraces modern web and data standards. In other words, the platform is extensible and federated. The primary interface of Open Chemistry extends JupyterLab with the capabilities to look up structures, perform various calculations on these structures and visualize results in notebooks. Within these notebooks, Cumulus orchestrates the submission and monitors the status of calculations. The below image offers an example.

Getting Started

As HPCCloud, Computational Model Builder and Open Chemistry demonstrate, Cumulus can be used for a variety of applications, from modern web applications, to interactive Jupyter notebooks, to more traditional desktop applications. You can read more documentation on Cumulus and download the most recent version of the platform on GitHub.

Acknowledgement

This material is based upon work supported by the U.S. Department of Energy, Office of Science, Office of Acquisition and Assistance, under Award Number DE-SC0012037.

This report was prepared as an account of work sponsored by an agency of the United States Government. Neither the United States Government nor any agency thereof, nor any of their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States Government or any agency thereof. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof.