Delivering Innovation in Medical Image Visualization

Kitware is extremely grateful to the NIH for funding the continued expansion of the capabilities of VTK, in support of medical image visualization. We are entering the fourth year of our NIH R01 from the National Institute of Biomedical Imaging and Bioengineering (NIBIB), “Accelerating Community-Driven Medical Innovation with VTK (R01EB014955)”, and this is a look back on our accomplishments during 2021 and our plans for 2022, relevant to that grant.

As background, VTK (https://vtk.org/) is Kitware’s Visualization Toolkit, an open source, freely available software system for computer graphics, modeling, volume rendering, scientific visualization, and plotting. It supports a wide variety of visualization algorithms and advanced modeling techniques, and it takes advantage of both threaded and distributed memory parallel processing for speed and scalability, respectively. It is written in C++ and available in many other languages via wrapping, e.g., Python, Java, C#, and Unity.

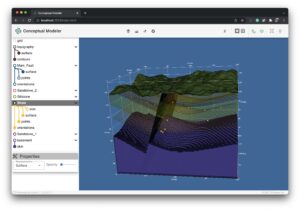

VTK.js (https://kitware.github.io/vtk-js/index.html) is an open source, implementation of VTK using plain JavaScript (ES6) to bring the power of VTK into the web browser, running on any modern platform. The focus of the implementation, so far, has been the rendering pipeline, the pipeline infrastructure, and frequently used readers, e.g., obj, stl, vtp, and vti. Some of the most commonly used VTK filters are also provided; however, we are not aiming for VTK.js to provide the same set of filters that is available in VTK. It is easy to export 3D and 4D scenes from VTK-based applications such as ParaView and 3D Slicer and then display those scenes in a browser using VTK.js.

Admittedly, many exciting VTK and VTK.js accomplishments in 2021 were not funded by this NIH grant; however, it is beyond the scope of this blog to capture all of that work. Herein we focus simply on what was funded and/or significantly enabled by this specific grant. For a broader picture, we suggest reviewing the release notes and blog posts that can be found at the Kitware, VTK, and VTK.js websites.

With the NIH “VTK Innovation” grant, there are four specific aims, and we are extremely happy with how the aims we wrote four years ago are even more relevant today. Those aims and our 2021 accomplishments for each are as follows:

Aim 1: Adaptive visualization framework

From the grant proposal, “The growing prevalence of algorithms involving large data, web and cloud services, and specialized hardware (e.g., GPUs and deep learning chips) and the growing diversity of client hardware (e.g., phones, tablets, desktops, and supercomputers) challenge the design of visualization solutions. This Aim will produce an integrated framework that supports visualization applications that can adapt to a wide spectrum of application requirements and available local and cloud resources.”

This year we achieved and demonstrated the utility of this aim by releasing “trame” (https://kitware.github.io/trame/). Trame is a French word that means “the core that ties things together”, and that definition is perfect for what we have produced. Trame is a framework that leverages VTK, ParaView, and VTK.js and that demonstrates three key features:

- 3D Visualization: With best-in-class VTK and ParaView platforms at its core, trame provides complete control of 3D visualizations and data movements. Developers benefit from a write-once environment while trame exposes both local and remote rendering through a single method made possible through VTK.js. The resulting system can range from a local application to a remote service, running on a supercomputer or in the cloud, and accessed through a phone, tablet, or laptop browser.

- Rich features: Trame leverages our existing frameworks, VTK and ParaView, along with other open source tools such as matplotlib, Plotly, Altair, Vega, deck.gl, and more, to create vivid content for analysis and visualization applications. Trame simplifies building professional-looking, browser-based user interfaces using Python by exposing the Vuetify widget library. And trame is extensible to add any missing components as plugins.

- Problem focused: By relying simply on Python, trame enables users to focus on their data and the associated analysis and visualizations while hiding the complications of web development. Why Python? Python is a popular programming language widely used in data science, scientific computing, artificial intelligence, and medical imaging.

Together, these three features of trame make analysis and visualization applications ubiquitous while enabling the developer to be more efficient. Trame reduces the time and expertise required to write prototype applications. Additionally, trame delivers a smooth pathway to convert these rapid prototypes into comprehensive, production-ready applications that run everywhere.

Our work with trame has just begun. We announced trame at a tutorial for SuperComputing 2021 on Nov 17, 2021. The tutorial is now available online: https://vimeo.com/651667960. The continued expansion and dissemination of trame will be major efforts during 2022.

Aim 2: Integrated, interactive applications

“Modern applications must support a diversity of implementation languages ranging from C++ to JavaScript to Python as well as advanced tools such as Jupyter Labs and the PyTorch/TensorFlow deep learning libraries. We aim to extend our current support of Python and JavaScript and develop new methods that streamline the integration of deep learning into VTK pipelines and support visualization in deep learning research.”

We made outstanding progress on Aim 2, with major milestones completed or near completion, during 2021. Of those, the two that perhaps have the most potential to be impactful, and that were funded by this grant, are the following:

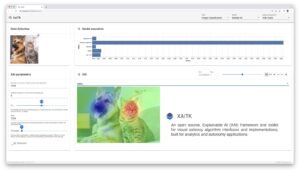

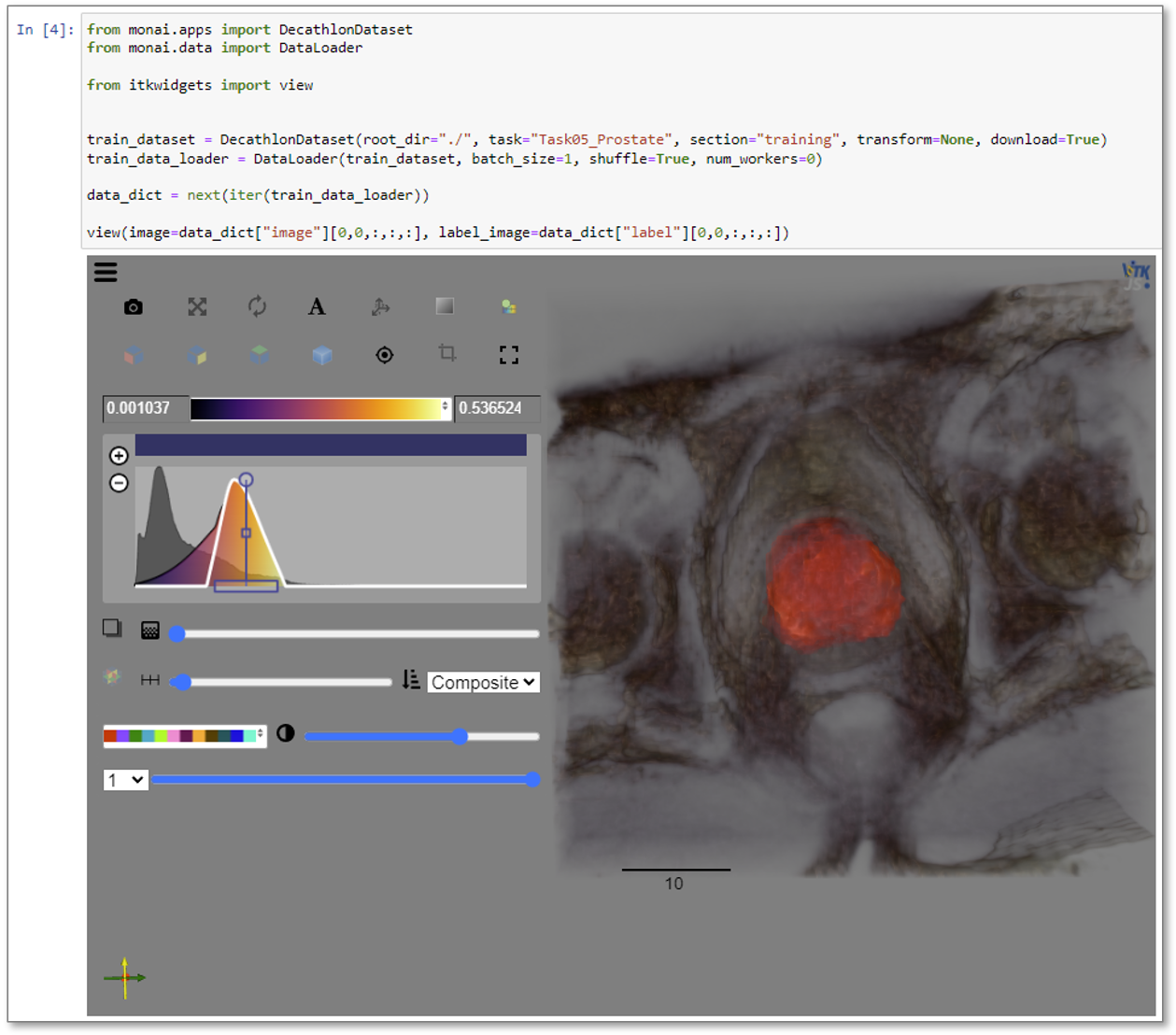

- itkWidgets: Built using VTK.js, itkWidgets (https://github.com/InsightSoftwareConsortium/itkwidgets) are now a leading medical data visualization tool for Jupyter Notebooks / Jupyter Labs. Jupyter notebooks are the dominant platform for deep learning research and education in medicine. Dr. Aylward is serving as chair of the MONAI advisory board. MONAI is the Medical Open Network for AI that is the leading open source deep learning python library for medical image deep learning research and development: https://monai.io. As chair, Dr. Aylward is tasked with learning about the needs of the medical image deep learning community, and it has become very apparent that there is a strong need for medical image visualization tools that can run with MONAI in jupyter notebooks. itkWidgets exactly addresses that need. It is now a featured visualization tool in MONAI tutorials, blogs, example code, and pipelines. We are also in discussions with NIH’s The Cancer Imaging Archive (TCIA, https://www.cancerimagingarchive.net/) and NIH’s Imaging Data Commons (IDC, https://datacommons.cancer.gov/repository/imaging-data-commons) to have itkWidgets featured in the deep learning jupyter notebooks that they are developing to highlight how their data can be used with MONAI.

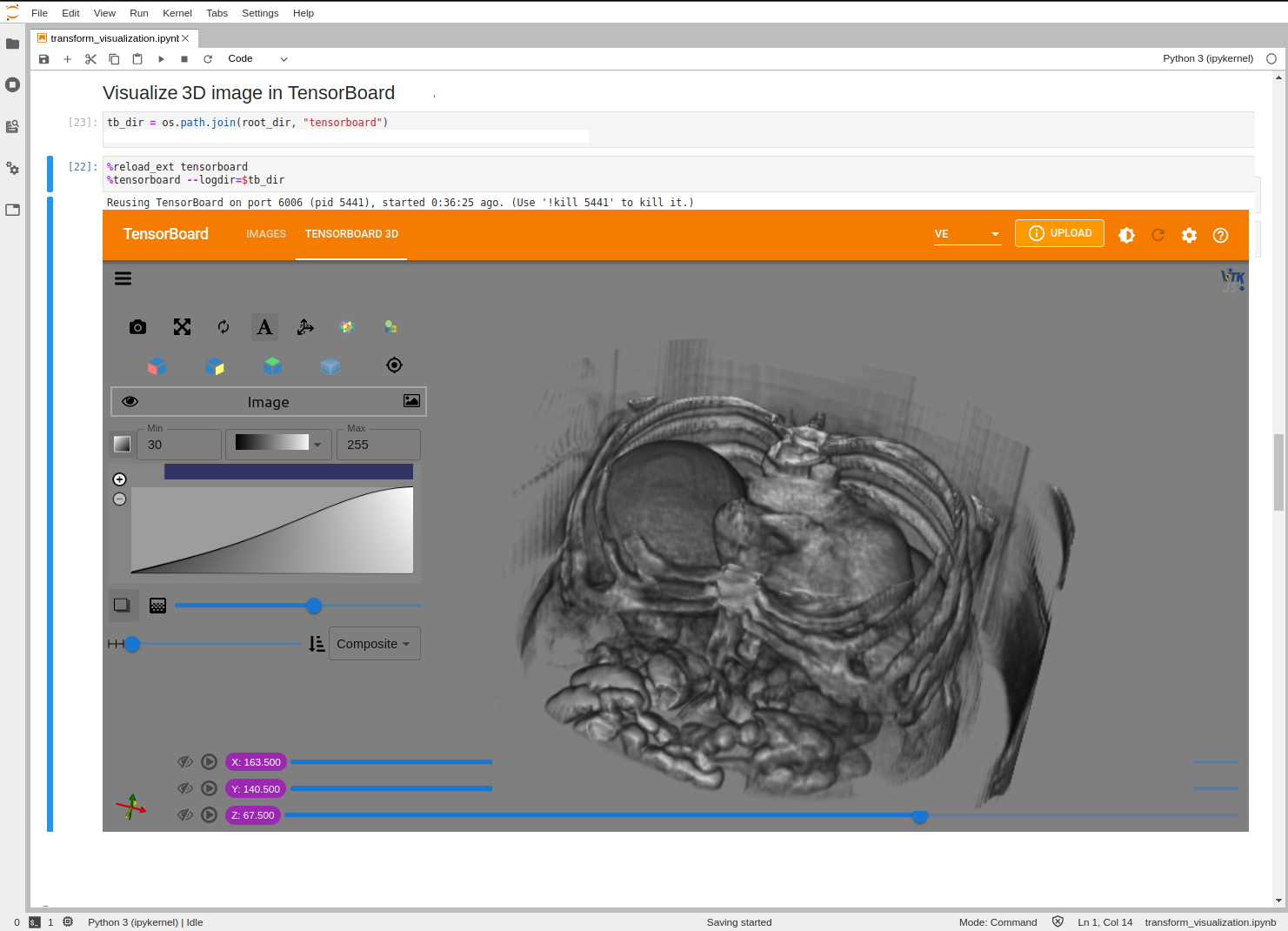

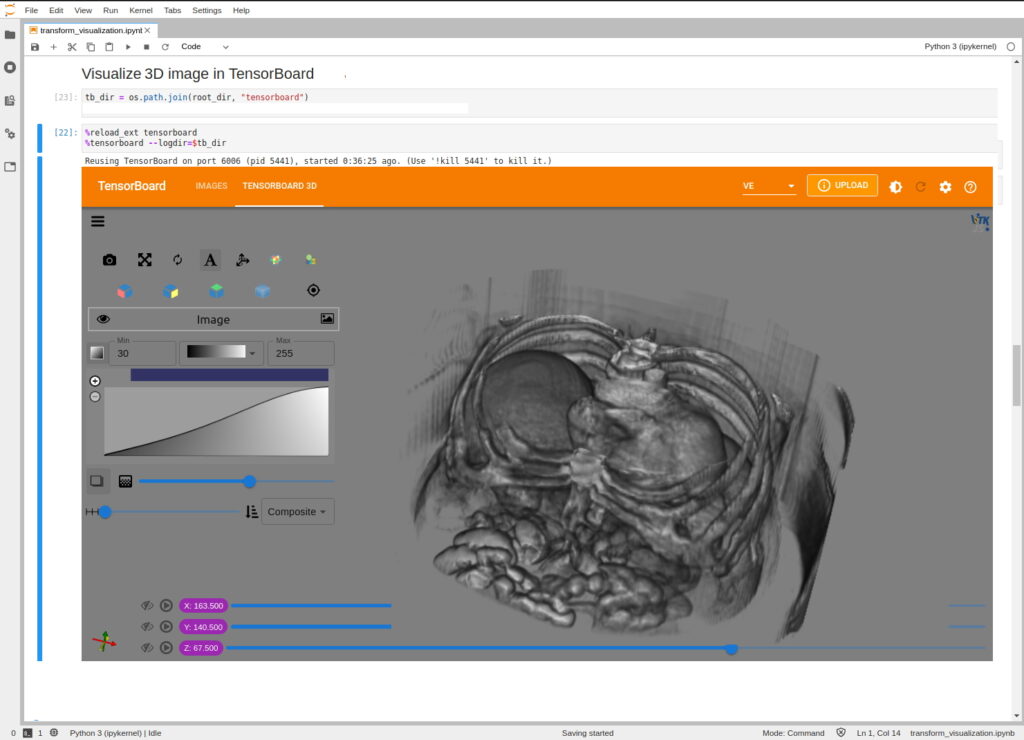

- 3D Tensorboard: Tensorboard (https://www.tensorflow.org/tensorboard) is arguably the most popular general purpose tool for visualizing deep learning experiments. It allows a researcher to look at multiple metrics over time, as a network trains; and it allows a researcher to visualize network outputs (e.g., image segmentation results). Tensorboard, however, only supports 2D image visualization, which is problematic for most medical imaging applications. To address this shortfall, we have created a Tensorboard plugin called “Tensorboard 3D” that is built using VTK.js. We plan on releasing this plugin in Q1, 2022. By incorporating medical image visualization into this common deep learning tool, traditional (e.g., computer vision) deep learning researchers will be able to use their existing tools to explore medical imaging applications, and medical imaging researchers will be able to take advantage of the wealth of knowledge and techniques surrounding this popular tool.

Aim 3: Advanced multi-platform rendering, including AR/VR

“Virtual and augmented reality technologies are maturing in terms of reduced costs and increased capabilities. Combined with portable computing devices such as phones, point-of-care ultrasound transducers, and other biometric sensors; low-cost, visually-oriented applications are emerging to help monitor, guide, and deliver advanced healthcare.”

The three most significant accomplishments on this grant regarding Aim 3 during 2021 are the following:

- WebGPU: VTK.js was originally developed using WebGL to take advantage of local GPU capabilities; however, WebGPU is rapidly becoming the favored JavaScript standard, replacing WebGL. We are pleased to announce that we anticipated this transition and have been working for over a year on updating VTK.js to WebGPU. This is a work-in-progress, but we are “ahead of the curve” in its adoption. This new standard provides improved performance and cross-web-browser compatibility. We have highlighted our VTK.js+WebGPU accomplishments as interactive examples on the web: https://kitware.github.io/vtk-js/docs/develop_webgpu.html

- WebXR: We are working towards a future in which web technologies are driving VR and AR displays in applications throughout the medical field. The explosion of consumer interest and investment in VR/AR technologies is going to make this future a reality faster than most people realize. As with our advanced investment in WebGPU, we are investing in the VR/AR future of the web by adopting WebXR as a primary component of VTK.js. WebXR is becoming the industry standard for web-based VR/AR technologies, with adoption by devices ranging from Meta’s Oculus Quest to Microsoft’s Hololens. If you have a Quest, Vive, Hololens, or similar device, you can see the integration of VTK.js into your AR/VR experience simply by visiting select VTK.js examples and clicking on the “Send to VR” button: https://kitware.github.io/vtk-js/examples/VR.html. Those examples show how VTK.js can be used to create a VR experience in only a few lines of code. Similarly, albeit under separate funding, the VR/AR capabilities of VTK have also been significantly expanded, with support for Unity, OpenXR, and Hololens 2 added during 2021. As indicated by the plans for 2022 that are listed below, expect significantly more VR/AR work from Kitware in 2022, with the goal of making VR/AR a user-friendly and developer-friendly technology that provides significant value to a wide variety of existing and new clinical applications.

- Holographic Displays: We also continued and advanced our support for Looking Glass Factory’s Holographic Displays. As mentioned in last year’s report, this display provides a true 3D visualization for multiple viewers, without the need for any headgear or glasses. We have helped one company integrate these displays into their product that has been submitted for FDA approval. We recently helped Looking Glass Factory define their licensing terms so that their SDK is more compatible with open source efforts such as ours. We have completed the integration of these displays with 3D Slicer and ParaView. https://www.kitware.com/gain-new-insights-using-holographic-displays/

Aim 4: Infrastructure, Outreach, and Validation

“From its inception, the community has driven the success of VTK. As we introduce new technologies and infrastructure, we will engage the community regarding outreach and validation via videos and interactive web-based content. Also, we will work with our External Advisory Board and conduct annual surveys to ensure that we are meeting their needs. All work will be contributed to VTK using VTK’s permissive open-source license and high-quality software processes.”

Overall, our infrastructure and outreach efforts have been successful during 2021, but we know that we can do more!

We have been working with select members of our advisory board very well, and we’re working to better engage with all of them more effectively. Additionally, we have made excellent progress with several new software releases, new tutorials, many new interactive examples/documentation, and commercial and academic adoption. These are briefly listed here, along with relevant links.

- VTK.js advanced from release v16 to release v21, with continuous improvements in its capabilities and its testing infrastructure throughout the year.

- VTK Release 9.1, in C++ and with significantly improved Python (including Python 3.9) support

- ParaView Glance releases spanned from v4.15 to v4.18.2

- New and updated VTK.js video tutorials

- VTK and VTK.js are central to MANY commercial medical image visualization applications that Kitware helped to deploy in 2021 and for more than 20 years prior. Most commercial developments are covered by non-disclosure agreements, but a few do allow public disclosure of their use of VTK, e.g., SonoVol and Zimmer are particularly strong, public supporters of VTK.

- VTK.js has been chosen by the OHIF team to power its 3D visualizations. OHIF has become the standard web-based viewer used by NIH’s TCIA and in hundreds of clinical projects around the world

Plans for 2022

As predicted by this grant proposal and indicated by our progress during 2021, we see 2022 as the year of VR/AR and AI.

Special emphasis will be placed on WebXR (AR/VR) technologies working with VTK.js. The future of AR/VR is here – consumer demand is going to rapidly advance the AR/VR field to the benefit of the medical imaging community. A similar cascade of technology happened in the 1990s, when consumer demand for computer games propelled the development of GPUs that made 3D medical image visualization commonplace. Our goal is to make VR/AR a commonplace, user-friendly, and developer-friendly technology that provides significant value to a wide variety of existing and new clinical applications: for diagnosis and monitoring using point-of-care ultrasound in austere environments as well as in homes and hospitals, for planning and guiding interventions by experts and novices, and for training and simulation, particularly in collaboration with Pulse, iMSTK, and Kitware’s other open source platforms.

We will also give additional attention to generating tutorials and applications that are both functional and excellent demonstrations of best practices. ParaView Glance is one example of a functional/demonstration VTK.js application that we created in 2020. Its popularity as an end-user application and as a demonstration application have steadily grown. In 2022, we will create demonstrations/applications that not only emphasize VTK.js and WebXR, but also trame and AI methods, such as our ongoing jupyter notebooks and MONAI integrations via itkWidgets and the soon-to-be-released 3D Tensorboard plug-in.

We also welcome community feedback on how we can better improve VTK and VTK.js. Please join us on our discourse forums (https://discourse.vtk.org/) to share your ideas, kudos, and questions.

Enjoy VTK!