3D reconstruction from satellite images

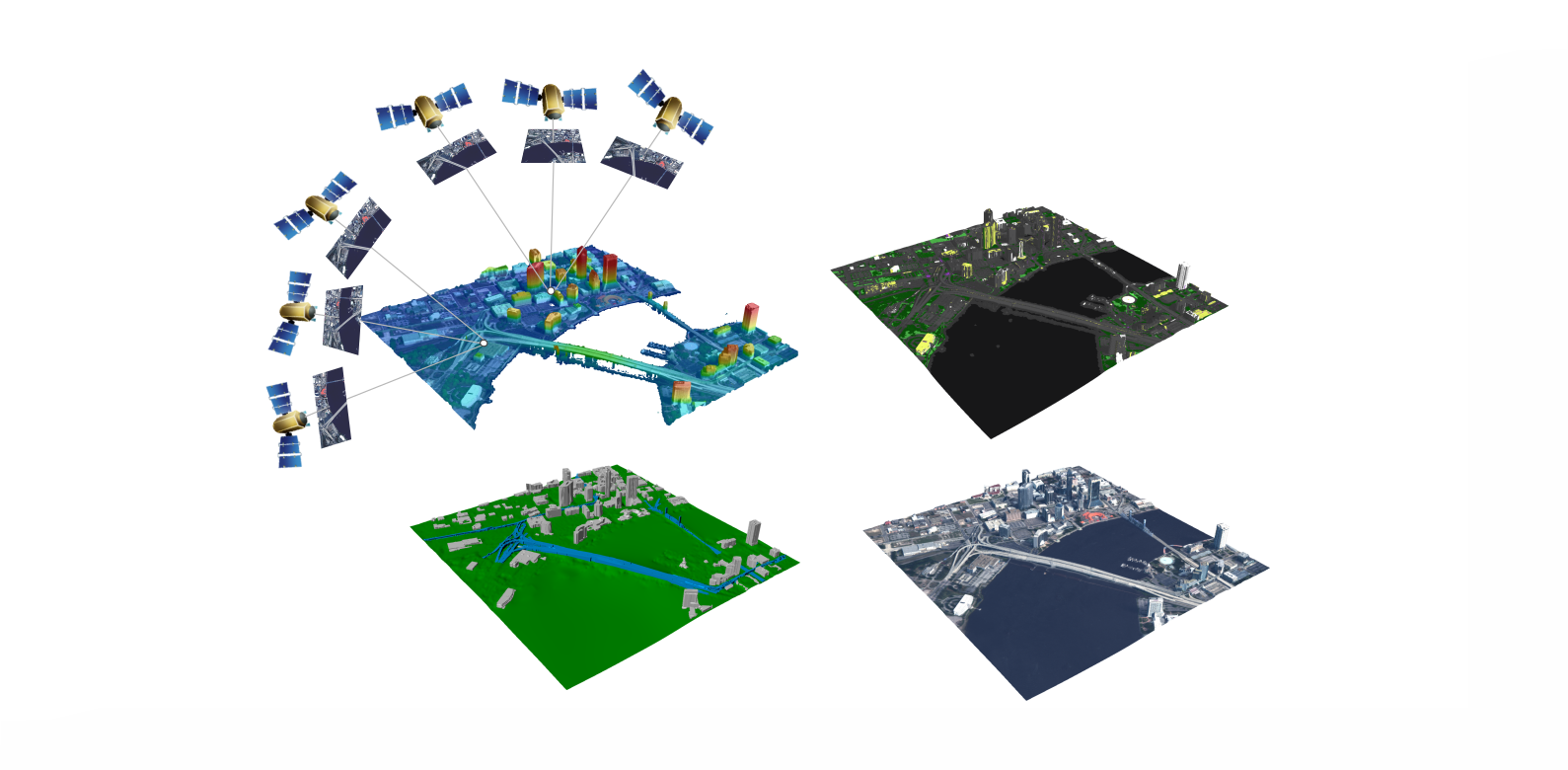

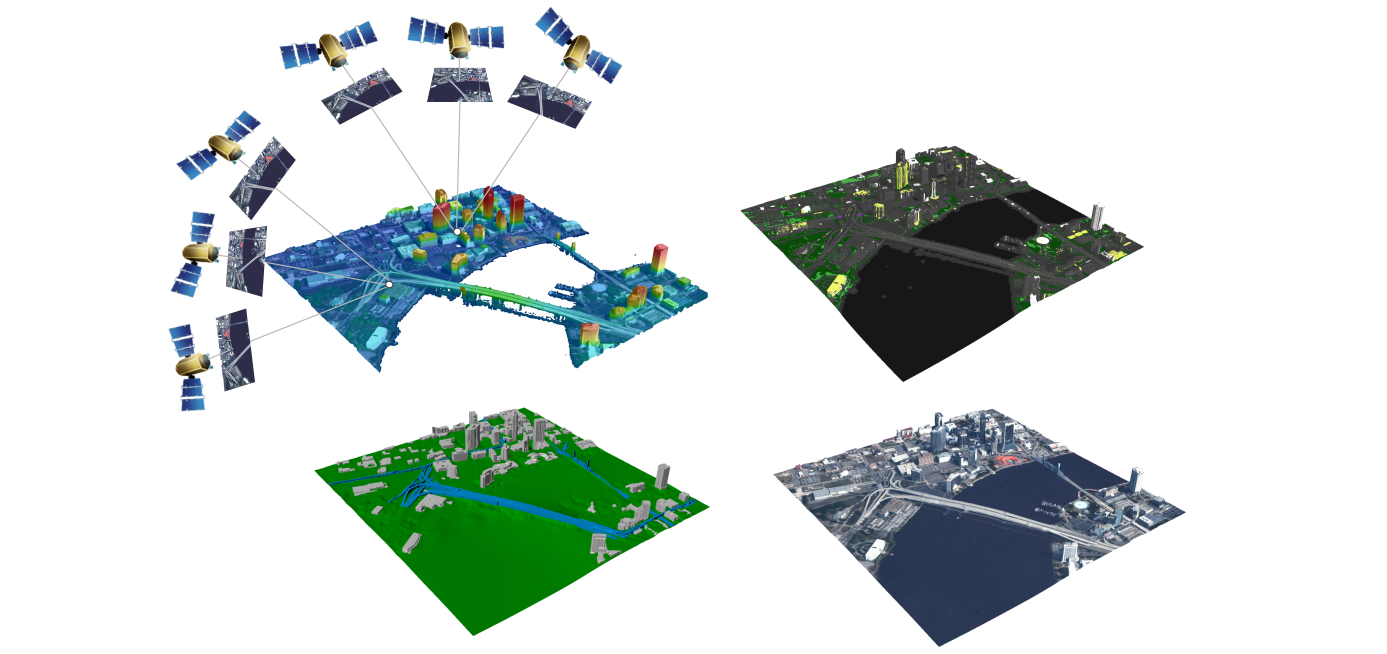

In this blog, we present our end-to-end system with web-based user interface for 3D buildings reconstruction from satellite images.

CORE3D program

These tools were developed as part of the IARPA CORE3D program (Creation of Operationally Realistic 3D Environment), which was focused on automatic generation of urban 3D models from satellite imagery.

The goal of the research was to move beyond point clouds and dense surface meshes and instead construct models from simplified geometric primitives resembling the way a human might build a city model in a 3D modeling application. A second goal was to identify material properties of the surfaces.

The work has been carried out in a close collaboration between industry and academy. Our team included Kitware Inc & SAS, Raytheon IIS & SAS, Columbia University, Purdue University, and Rutgers University.

System Overview

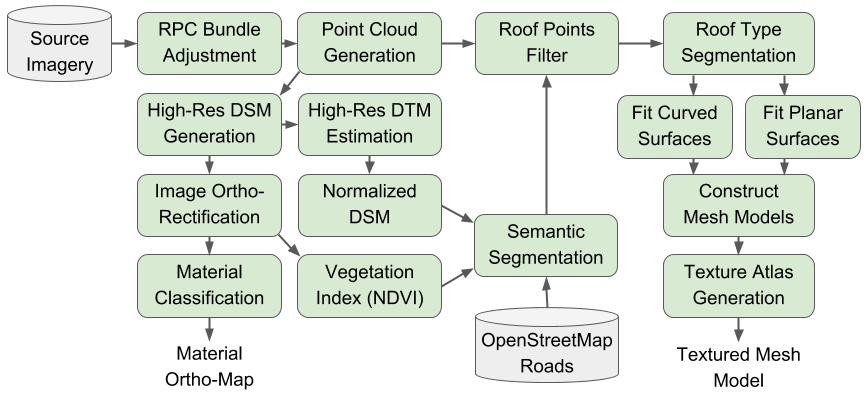

The rational polynomial coefficient (RPC) camera parameters are first refined using RPC Bundle Adjustment and a Dense Point Cloud is generated using multi-view stereo algorithms. This point cloud is then used to generate a Digital Surface Model (DSM), a Digital Terrain Model (DTM) and ortho-rectified images.

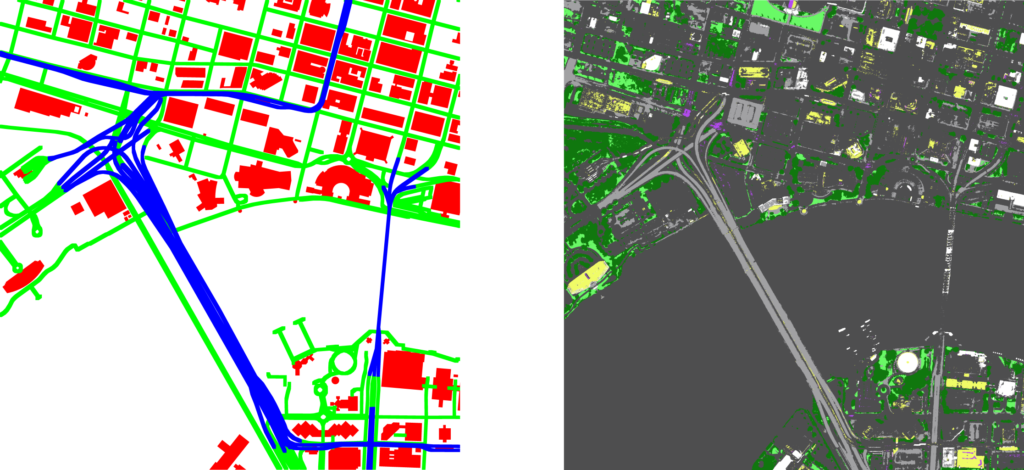

Deep learning based methods were developed for Semantic Segmentation of buildings and Material Classification on the multispectral rectified images.

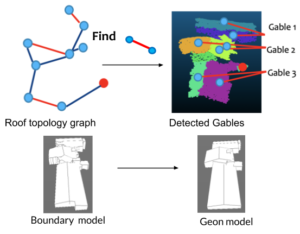

The point clouds are filtered to get roof points, which are then segmented and classified in different roof types (flat, sloped, domed, arched). Using the points of a building and its roof type, we construct a simplified Mesh Model. Finally, textures are generated for the buildings roofs and facades.

The Danesfield web-App

The Danesfield App is the web-based user interface for the system. It provides user, data, and job management. Users can interactively select the “area of interest” to process and visualize the 2D results on a map as well as geo-referenced textured 3D models. Processing of specific areas is thus done on-demand.

Metrics

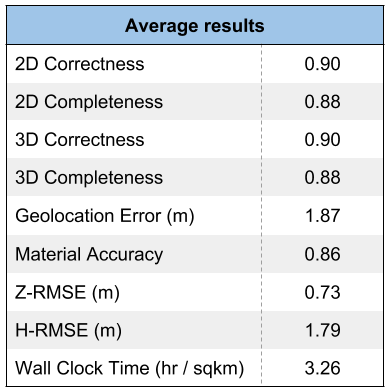

The final system was deployed on one AWS GPU-enabled instance (specifically: p3.2xlarge).

- 8 vCPU, 61 GB Main Memory

- NVIDIA Tesla V100, 16 GB GPU Memory

- Average hours / cost per sqkm: 3.26 / $9.98

Public examples of multi-view WV3 imagery are available from SpaceNet. Evaluation on ground truth data was performed using metrics and ground truth provided by Johns Hopkins University Applied Physics Laboratory.

All the algorithms and the web application from Kitware and the university partners are released open-source under the Apache 2.0 License and the sources can be found in their respective GitHub repositories: Danesfield and Danesfield app. However, the RPC bundle adjustment point cloud generation system is a commercial package from Raytheon called Intersect Dimension which is licensed to the US Government. US Government users can request a prebuilt Amazon Machine Image (AMI) to try out Danesfield. Other users can leverage the open source algorithms on their own data.

To provide a fully open source end-to-end system in the future we have begun work on adapting Kitware’s open source multi-view stereo algorithms to the satellite imagery domain.

Innovations

The development of these tools led to several innovations.

-

- Bundle adjustment and point cloud improvements (RSM BA, multi-template matching, view clustering, DENSR)

-

- Atmospheric calibration using BRDFs

-

- BRDF modeling of outdoor building materials

-

- Novel methods for material classification (reflectance residuals, angular histograms)

-

- Comparisons and combinations of deep networks for multi-class semantic segmentation

-

- Building points detection with hole filling and detail preserving smoothing

-

- Roof type segmentation with PointNet++ and novel training procedure

-

- Roof plane segmentation with multi-cue robust weighted RANSAC

-

- Roof topology and meshing using roof topology graph

-

- Texture mapping facades with shadow and occlusion reasoning

- Deployed system with job management and web UI with 3D model visualization

Interested?

Do not hesitate to contact us. We can adapt our CORE3D work and develop your custom solution.

The research is based upon work supported by the Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA), via Department of Interior/Interior Business Center (DOI/IBC) contract number D17PC00286. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the ODNI, IARPA, or the U.S. Government.

If it’s necessary, I would like to know more detail in CORE3D program in Columbia University. Thank you.