3D reconstruction from smartphone videos

In this blog, we will show how tools, initially developed for aerial videos, can be used for general object 3D reconstruction. These tools are completely open-source and enable you to process your data locally, assuring their privacy.

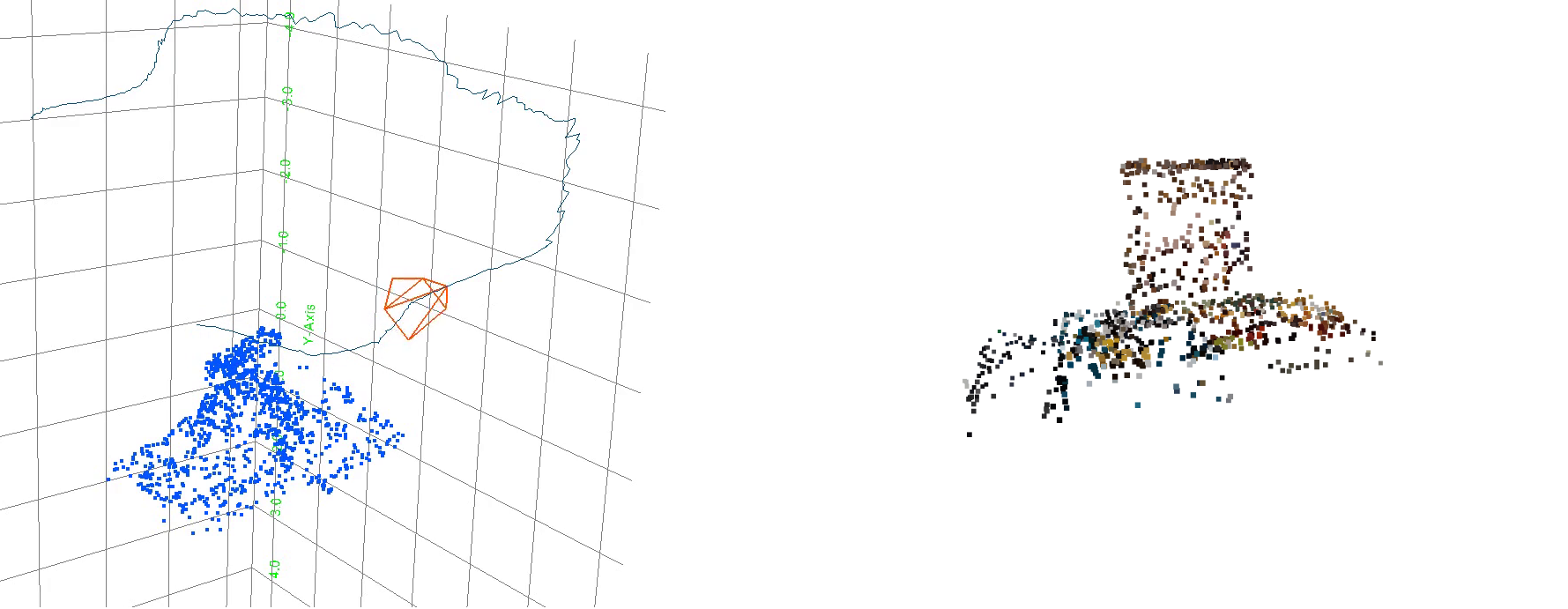

We first estimate the camera poses and obtain a sparse reconstruction. Then, a dense model is produced in the form a of mesh with a high-resolution texture.

The Software – A Brief History

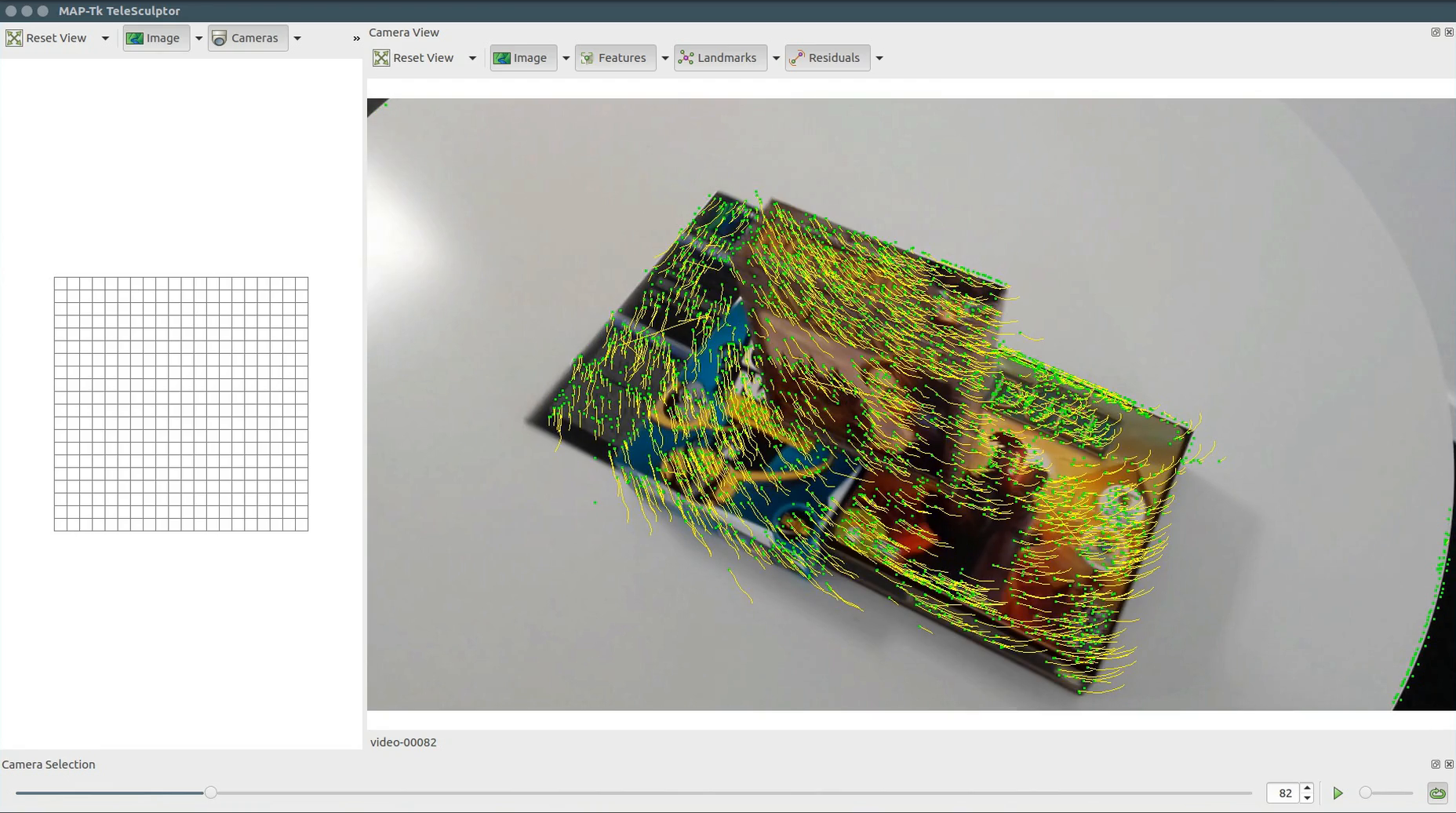

MAP-Tk / TeleSculptor

Let’s start with some history on the software use in this post. MAP-Tk (Motion-imagery Aerial Photogrammetry Toolkit) started as an open source C++ collection of libraries and tools for making measurements from aerial video. Initial capability focused on estimating the camera flight trajectory and a sparse 3D point cloud of a scene. Over time, additional features have been added, like dense depth map estimation and fusion of depth maps into full 3D mesh models. A graphical desktop application named TeleSculptor was built on top of MAP-Tk to provide an easier user interface and visualization of results. While aerial video is still a common use case today (you can explore such results here), MAP-Tk has expanded to support more general video. This blog post provides an example of using the MAP-Tk algorithms and TeleSculptor application to build a model from mobile phone video.

Becoming KWIVER

The software architecture and algorithms developed for MAP-Tk are highly modular and configurable. The MAP-Tk algorithm abstraction layer provides seamless interchange and run-time selection of algorithms from various other open source projects like OpenCV, VXL, Ceres Solver, and PROJ4. This framework was so useful that it became the foundation for KWIVER, Kitware’s computer vision toolkit handling a much broader array of vision tasks. Today, all of the software infrastructure and photogrammetry algorithms developed for MAP-Tk now reside within KWIVER. Only the TeleSculptor application remains in the original MAP-Tk repository.

Since MAP-Tk has evaporated away leaving TeleSculptor behind, we will soon be renaming the MAP-Tk repository to “TeleSculptor” to make its contents more obvious. Don’t worry, nothing is going away except the “MAP-Tk” name. KWIVER will provide direct access to all of the algorithms and command line tools for developers and power users. TeleSculptor will provide a more intuitive graphical interface for 3D reconstruction using KWIVER.

Reconstruction Pipeline

The only input needed is a video or a set of images showing a scene under different angles. For that, we took a video going around our scene.

We first use KWIVER to track image features. A feature is the 2D image coordinates of a distinguishable point in the scene that appears repeatedly across images. The software detects these features and associates them across multiple images forming a feature track. Each feature track has the potential to become a 3D landmark in the next step in the pipeline.

The high modularity of KWIVER enables the user to choose between different types of feature detectors, descriptors and tracking methods. We obtained our results with Speeded Up Robust Features (SURF) for detection and description. We used fundamental matrix based filtering to detect geometrically inconsistent outlier tracks.

A user can select and configure different algorithms with configuration file as shown below. However, the default settings provided with TeleSculptor are a good starting point for most applications.

# Algorithm to use for 'descriptor_extractor'. # Must be one of the following options: # - ocv_BRISK :: OpenCV feature-point descriptor extraction via the BRISK algorithm # - ocv_ORB :: OpenCV feature-point descriptor extraction via the ORB algorithm # - ocv_BRIEF :: OpenCV feature-point descriptor extraction via the BRIEF algorithm # - ocv_DAISY :: OpenCV feature-point descriptor extraction via the DAISY algorithm # - ocv_FREAK :: OpenCV feature-point descriptor extraction via the FREAK algorithm # - ocv_LATCH :: OpenCV feature-point descriptor extraction via the LATCH algorithm # - ocv_LUCID :: OpenCV feature-point descriptor extraction via the LUCID algorithm # - ocv_SIFT :: OpenCV feature-point descriptor extraction via the SIFT algorithm # - ocv_SURF :: OpenCV feature-point descriptor extraction via the SURF algorithm feature_tracker:core:descriptor_extractor:type = ocv_SURF # Extended descriptor flag (true - use extended 128-element descriptors; false - # use 64-element descriptors). feature_tracker:core:descriptor_extractor:ocv_SURF:extended = false # Threshold for hessian keypoint detector used in SURF feature_tracker:core:descriptor_extractor:ocv_SURF:hessian_threshold = 100 # Number of octave layers within each octave. feature_tracker:core:descriptor_extractor:ocv_SURF:n_octave_layers = 3 # Number of pyramid octaves the keypoint detector will use. feature_tracker:core:descriptor_extractor:ocv_SURF:n_octaves = 4 # Up-right or rotated features flag (true - do not compute orientation of # features; false - compute orientation). feature_tracker:core:descriptor_extractor:ocv_SURF:upright = false # Extended descriptor flag (true - use extended 128-element descriptors; false - # use 64-element descriptors). feature_tracker:core:feature_detector:ocv_SURF:extended = false ... # Algorithm to use for 'feature_matcher'. # Must be one of the following options: # - ocv_brute_force :: OpenCV feature matcher using brute force matching (exhaustive search). # - ocv_flann_based :: OpenCV feature matcher using FLANN (Approximate Nearest Neighbors). # - fundamental_matrix_guided :: Use an estimated fundamental matrix as a geometric filter to remove outlier matches. # - homography_guided :: Use an estimated homography as a geometric filter to remove outlier matches. # - vxl_constrained :: Use VXL to match descriptors under the constraints of similar geometry (rotation, scale, position). feature_tracker:core:feature_matcher:type = fundamental_matrix_guided feature_tracker:core:feature_matcher:fundamental_matrix_guided:feature_matcher:type = ocv_flann_based # Confidence that estimated matrix is correct, range (0.0, 1.0] feature_tracker:core:feature_matcher:fundamental_matrix_guided:fundamental_matrix_estimator:ocv:confidence_threshold = 0.99 # Algorithm to use for 'fundamental_matrix_estimator'. # Must be one of the following options: # - ocv :: Use OpenCV to estimate a fundimental matrix from feature matches. # - vxl :: Use VXL (vpgl) to estimate a fundamental matrix. feature_tracker:core:feature_matcher:fundamental_matrix_guided:fundamental_matrix_estimator:type = ocv ...

KWIVER then estimates the camera poses corresponding to each frame and triangulates 3D landmarks using structure-from-motion (SfM) algorithms. Again, a configuration file specifies options, such as initial values for the camera intrinsic parameters (e.g. focal length), or the method used to bootstrap the camera’s locations.

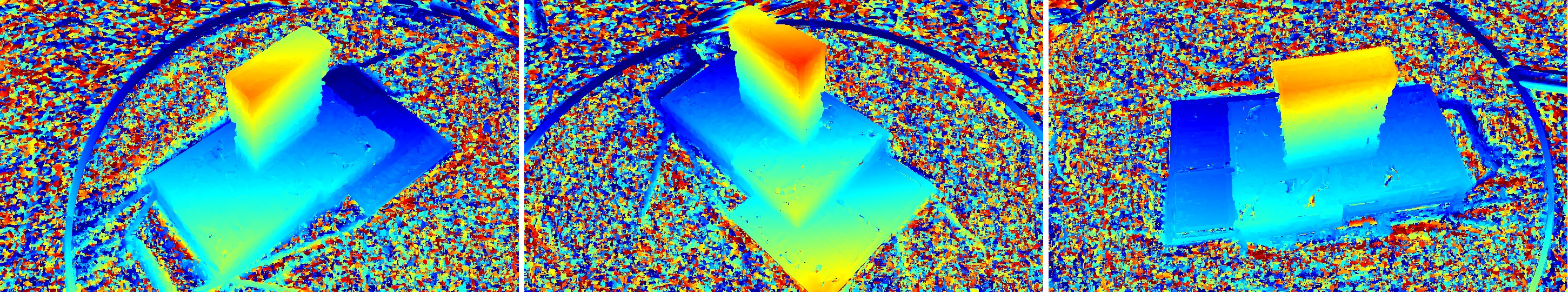

To produce a denser reconstruction, we used a fork of PlaneSweepLib. PlaneSweepLib is the CUDA accelerated depth map estimation library developed by ETH Zurich, and our fork can directly use the camera calibration from MAP-Tk as input and output the required VTI format for the next step. An upcoming release of TeleSculptor will include an alternate algorithm for dense reconstruction that is implemented directly in KWIVER and not subject to GPL licencing restriction like PlaneSweepLib.

We fuse the registered depth maps using a truncated signed distance function (TSDF) voxel volume. The fusion is highly parallelizable and implemented for the GPU with CUDA.

We fuse the registered depth maps using a truncated signed distance function (TSDF) voxel volume. The fusion is highly parallelizable and implemented for the GPU with CUDA.

The final step is texture mapping. This enhances the visual aspect of the final reconstruction with a high-resolution texture created from a set of input images covering each side of the scene.

All these tools are available for free and are accessible through command-line programs. Most, but not all, of the steps of this pipeline are also integrated into the TeleSculptor application for easier use. An upcoming release of TeleSculptor, currently undergoing testing, will include the entire end-to-end pipeline in the GUI with no requirement to use the command line.

The total computation time was less than 20 minutes for the complete pipeline. In total, accounting for human interaction, it took less than one wall-clock hour.

Computation time details:

- Features tracking: ~5min

- Poses estimation and sparse reconstruction: ~6min

- Depth maps estimation: ~8min

- Depth maps fusion: ~10s

- Texture mapping: ~30s

The KWIVER abstract APIs allows for making other algorithms or implementations easily available. Indeed, the upcoming versions of KWIVER (algorithms) and TeleSculptor (reconstruction GUI) will have the CudaDepthMapIntegration built in, as well as our DTAM-like alternative to PlaneSweepLib. Stay tuned !