Catalyst2: GPU resident workflows

We have developed a proof of concept for accelerated scientific workflows with ParaView-Catalyst2 such that the mesh data never leaves the GPU!

Accelerated algorithms, ray tracing and AI models are gaining immense popularity. This surge necessitates focusing more on maximizing data residency in order to reduce data movement especially in the realm of High Performance Computing (HPC). Consider a scenario where in-situ visualization, ray tracing and training workflows benefit from an innovative machine learning model capable of guiding the simulation. Given that most of these AI models train on powerful GPUs that are also capable of hardware-accelerated ray tracing, it’s immensely beneficial for Catalyst2 pipelines to keep data on the GPU, allowing direct access for an AI model or ray tracing. To meet these cutting-edge requirements, we made a proof of concept that runs a Catalyst2 pipeline entirely on the GPU. It not only streamlines the processing pipeline but also marks a significant step forward in the advancement of HPC. While this development is promising, we acknowledge that some edge cases are still under refinement.

Pass GPU data structures to Catalyst2

The transition to supporting GPU-resident workflows in ParaView posed a significant challenge, primarily centered around adapting a reader in VTK which converts conduit mesh blueprints into the VTK data model – vtkConduitSource, such that it can ingest data pointers from external memory spaces. An external memory space, in the context of GPU programming like CUDA, refers to the portion of memory situated outside the host system, often residing within the graphics card. This task was particularly complex due to the inherent access violations associated with dereferencing pointers from external memory spaces. Typically a simulation would populate the mesh blueprint with pointers residing in unmanaged Kokkos or CUDA address space.

Our solution harnesses the ArrayHandle infrastructure from VTK-m, effectively wrapping these external pointers for safe and efficient use. VTK-m supports many different kinds of accelerated devices. ParaView benefits from VTK-m using the VTK-m to VTK bridge and VTK-m filter overrides. You can read more about VTK-m filters in ParaView on our blog.

This approach allowed simulations to seamlessly send pointers related to mesh coordinates, connectivity, and fields directly to vtkConduitSource (vtk/vtk!10770). Simulations can send these pointers by populating nodes in the conduit mesh blueprint. Additionally, the simulations are required to specify the memory space of these pointers with a string, a small but vital detail for the system’s functionality. This identifier serves the purpose of informing VTK-m about the device adapter where the pointers live.

In this code snippet, grid is a data structure that stores points and cell connectivity on a CUDA device using unmanaged memory. attribs is another data structure that contains the values of pressure in the grid. This code was adapted from the Catalyst2 C++ examples in the ParaView repository.

#include <catalyst.hpp>

// ...

void CatalystAdaptor::Execute()

{

// ...

// Add channels.

auto channel = exec_params["catalyst/channels/grid"];

// Since this example is using Conduit Mesh Blueprint to define the mesh,

// we set the channel's type to "mesh".

channel["type"].set("mesh");

// [New] specify external space for the arrays on the 'grid' channel

channel["memoryspace"].set("cuda");

// now create the mesh.

auto mesh = channel["data"];

mesh["coordsets/coords/type"].set("explicit");

mesh["coordsets/coords/values/x"].set_external(

grid.GetPointsArray(),

grid.GetNumberOfPoints(),

/*offset=*/0,

/*stride=*/3 * sizeof(float));

// repeat for y, z values

// now fill in cell connectivity

mesh["topologies/mesh/type"].set("unstructured");

mesh["topologies/mesh/coordset"].set("coords");

mesh["topologies/mesh/elements/shape"].set("hex");

mesh["topologies/mesh/elements/connectivity"].set_external(

grid.GetCellPoints(0),

grid.GetNumberOfCells() * 8);

// now fill in the fields.

auto fields = mesh["fields"];

fields["pressure/association"].set("element");

fields["pressure/topology"].set("mesh");

fields["pressure/volume_dependent"].set("false");

fields["pressure/values"].set_external(

attribs.GetPressureArray(),

grid.GetNumberOfCells());

// ...

}Leveraging vtkmDataArray and refining vtkCellArray

In this new setup, vtkmDataArray plays a pivotal role. It wraps around a vtkm::cont::ArrayHandle, which in turn wraps around external pointers. Due to its design, being derived from vtkGenericDataArray, vtkmDataArray integrates well within VTK. It is compatible with vtkPoints and vtkDataSetAttributes, which accept un-typed vtkDataArray instances. However, we encountered a specific challenge with vtkCellArray. Unlike other classes, vtkCellArray had an optimal code path only for vtkTypeInt32Array/vtkTypeInt64Array. The point indices were deep copied for all other kinds of arrays. We optimized and refactored vtkCellArray (vtk/vtk!10769) to efficiently work with vtkDataArray, eliminating the need for deep copies. By resolving this issue, we’ve significantly optimized the process, paving the way for more efficient GPU-resident workflows.

Finally, the paraview catalyst implementation was updated to accommodate the new memory space identifier and configure vtkConduitSource for external memory spaces. paraview/paraview!6647 would enable GPU zero copy in ParaView-Catalyst2.

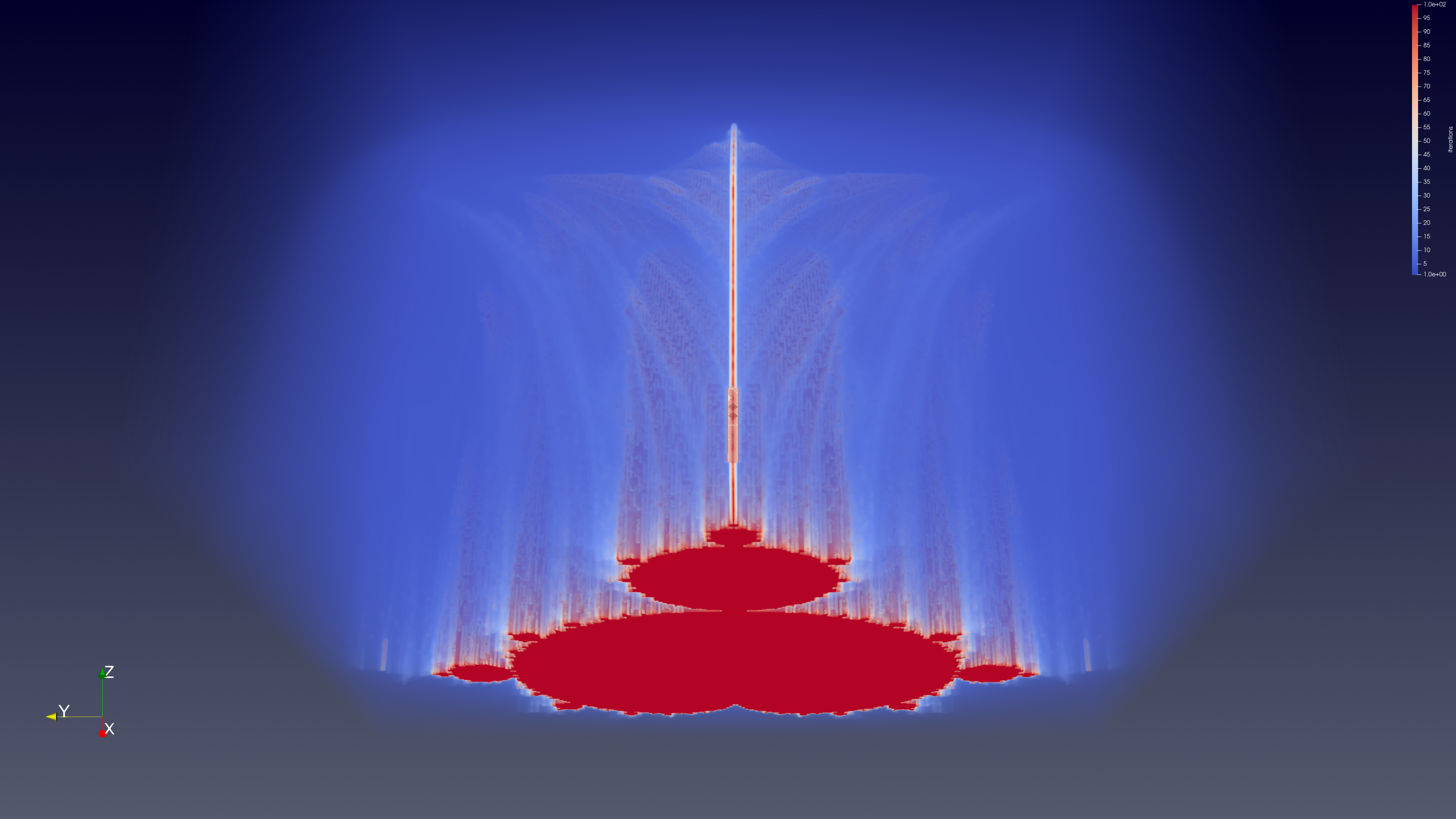

You can find an example of a GPU-resident Catalsyt2 pipeline using CUDA in the MandelbrotCatalyst2 repository.

However, it’s important to note that the cell connectivity of mixed cell unstructured grids is not yet zero-copied, but we plan to support this in future enhancements, further advancing the capabilities of VTK and ParaView in HPC environments.

Future work

In light of this being a proof of concept, a substantial portion of the work is presently undergoing comprehensive review. Our intention is to incorporate these enhancements into the master branches of VTK and ParaView in the forthcoming months. Feel free to connect with us should you wish to explore collaborative opportunities or seek additional insights regarding these advancements.

Acknowledgements

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration, responsible for delivering a capable exascale ecosystem, including software, applications, and hardware technology, to support the nation’s exascale computing imperative.

Fantastic work!