DIVE: Revolutionize Your AI Training with Advanced Video and Image Annotation

High-quality labeled data powers every successful AI model, yet preparing large-scale video and image datasets remains one of the most costly and time-consuming stages in the machine learning pipeline.

Kitware’s open source platform, DIVE, changes that. Built for speed, scalability, and accuracy, DIVE empowers teams to label and visualize imagery, then use those annotations to train and refine AI models – all within a single, versatile environment. Developed by Kitware, DIVE reflects our commitment to making open and accessible advanced AI tools, empowering organizations to turn raw data into insight with far less effort and cost.

How DIVE Works

DIVE is a powerful open source interface for annotating video and image data, training AI, and visualizing results in one integrated interface. It combines intuitive, interactive editing tools with automated AI-powered annotation to streamline data preparation and model refinement. Whether running on a desktop, secure server, or cloud cluster, it helps teams accelerate every stage of computer vision research and development:

- Annotate. Combine manual and AI-assisted labeling for video and imagery using bounding boxes, polygons, keypoints, and tracks. DIVE supports frame interpolation, multiple annotation formats, and collaborative, versioned datasets for reproducible workflows.

- Train. Launch and monitor model training jobs locally or in the cloud using frameworks like VIAME and PyTorch. DIVE supports both classical and deep-learning models, enabling rapid iteration on detectors, trackers, and classifiers without switching environments.

- Refine. Evaluate and compare model performance with built-in analytics for accuracy, recall, and confusion matrices. Retrain or reapply models to new data, export trained weights, or deploy models back into DIVE for assisted annotation and validation.

Designed as a flexible framework, DIVE’s open architecture integrates with multiple AI backends and containerized deployments for reproducibility. It can run in air-gapped environments or scale across multi-node clusters, supporting high-throughput labeling and training from quick experiments to production-grade pipelines.

Real-World Applications

DIVE is used across disciplines to accelerate research and operations. Here are a few examples of work we have done with DIVE.

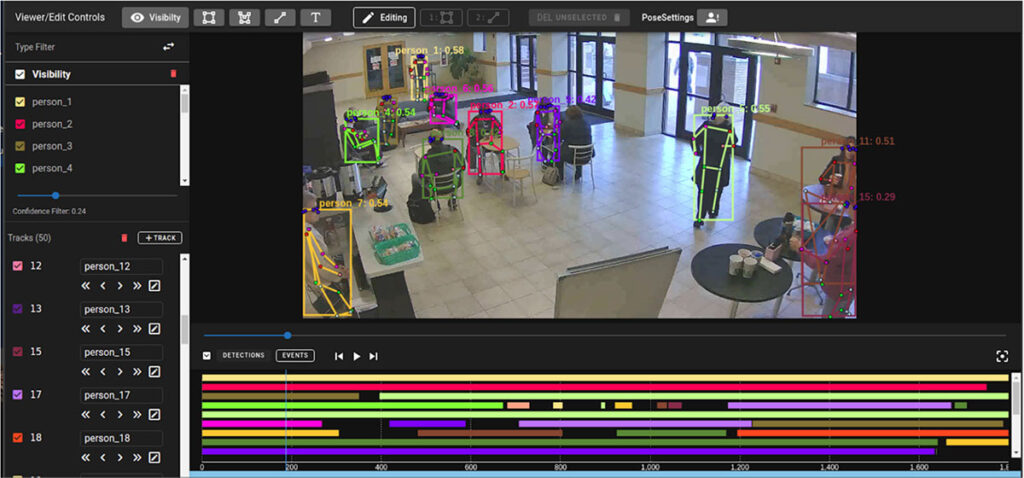

Activity Detection. The DIVA (IARPA – DIVA), MEVA (GitHub, arXiv), and BRIAR (IARPA – BRIAR) projects employed DIVE to annotate people and activities across multiple video datasets, helping researchers detect, track, and re-identify individuals for security research and advanced forensic analysis.

Acknowledgement: This research is based upon work supported in part by the Office of the Director of National Intelligence (ODNI), Intelligence Advanced Research Projects Activity (IARPA), via [2022-21102100003]. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of ODNI, IARPA, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for governmental purposes notwithstanding any copyright annotation therein.

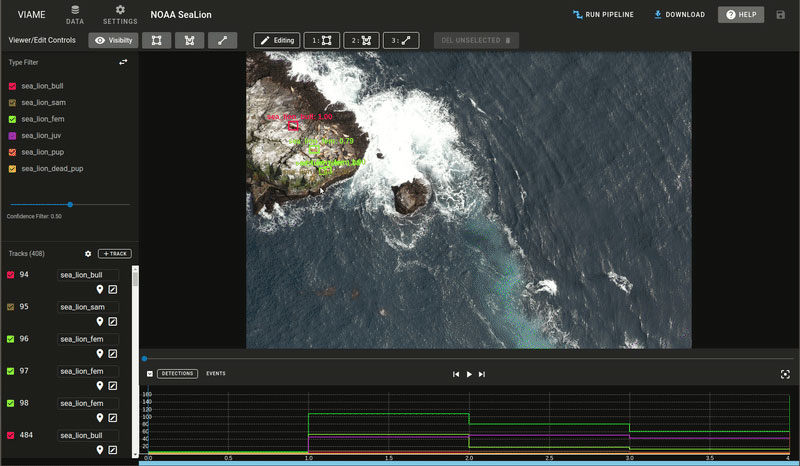

Marine Science. Scientists at NOAA leverage DIVE for fish species identification and population monitoring using drone and underwater camera video integrated with Kitware’s open source VIAME model backend. This functionality, available to the public through VIAME Web, helps researchers automate species detection, identification, and tracking to support marine conservation and ecosystem management.

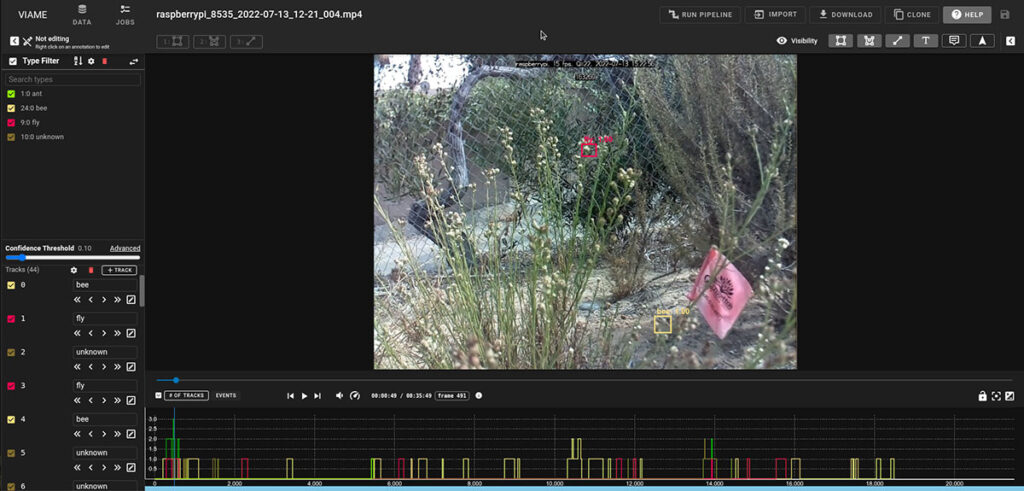

Ecology. Scientists and educators use DIVE to annotate camera trap video to detect bees and other pollinators acting on flowers and other plants in public garden spaces. Pollinators are a crucial component of the world’s food supply, and studying their behavior and activity helps researchers better understand and protect these essential ecosystems.

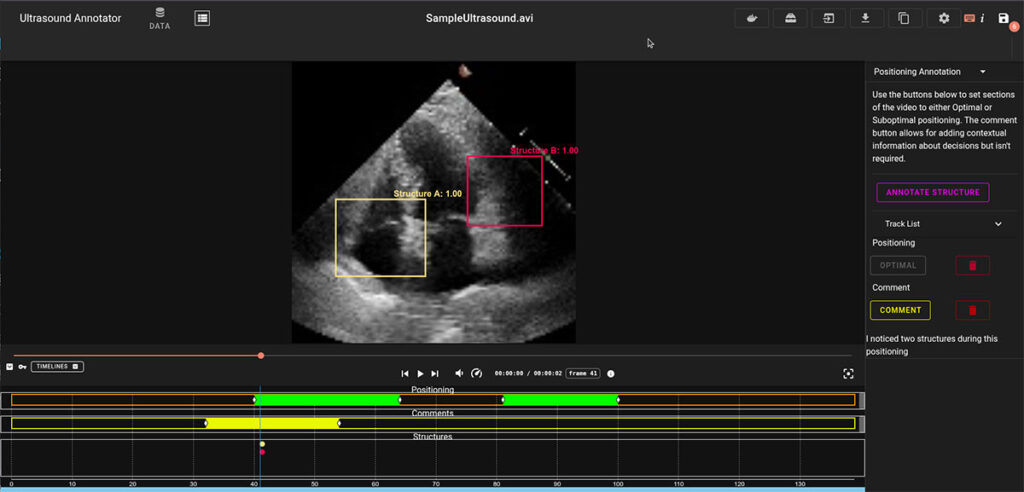

Medical Imaging. Biomedical scientists utilize Kitware’s DIVE-DSA customization of DIVE to annotate endoscopy and microscopy video to advance machine learning in clinical and research settings. As part of the Advanced Research Projects Agency for Health (ARPA-H) Saving Baby Hearts project, researchers also use DIVE-DSA to annotate neonatal ultrasound videos that train an AI-assisted device to optimize ultrasound positioning. This technology aims to improve diagnostic accuracy and reduce infant mortality through early detection of congenital heart conditions.

Acknowledgement: This project has been funded in whole or in part with Federal funds from the Advanced Research Projects Agency for Health (ARPA-H), National Institutes of Health, Department of Health and Human Services, under Contract No. 75N91024C00020

Social Science. The University of Maryland applied DIVE for work on a DARPA-funded program to study how humans and machines translate real-time conversations. Researchers labeled emotion, intensity, and cultural norm violations to test and evaluate cross-cultural understanding and machine translation algorithms.

Case Study: Marine Ecosystem Monitoring with Stereo Cameras

Marine scientists at the Game of Trawls project set out to make fishing more sustainable by identifying species in real-time during trawling. But their data wasn’t simple: stereo camera footage from moving vessels, shifting light conditions, and schools of fish crowding the frame. They needed a fast and accurate way to analyze what was happening underwater… something existing tools couldn’t handle.

Kitware partnered with Ifremer to extend DIVE to process stereo video, add tools for 3D measurement and tracking, and integrate deep-learning models for fish detection and classification using Mask R-CNN, alongside Structural RNNs for multi-frame tracking and motion analysis. The result is a powerful video analysis system that recognizes species and tracks their movement in real time – reducing bycatch by triggering nets to open or close based on what’s detected.

Get Started with DIVE

DIVE is open source and free to use. Try it directly in your browser using the Web Demo, install DIVE Desktop for local or offline work, or explore the User Guide. The complete source code is on GitHub, ready for you to build, customize, and extend, on your own or with Docker Compose.

DIVE can be used standalone or integrated into AI training loops and other annotation-based workflows. Its flexible architecture supports a range of workflows for model training and refinement:

- Web and cloud-based model training: Launch and manage training jobs on local servers or cloud platforms.

- Iterative improvement: Run training loops across multi-domain datasets to continuously improve performance.

- Model management: Export, import, and refine models within DIVE or alongside external AI frameworks.

In short, DIVE makes it easy to prepare, train, and deploy models efficiently, wherever your infrastructure lives.

Take DIVE Further with Kitware

DIVE is a powerful tool, and Kitware can help you get the most out of it. We’re the team behind DIVE, trusted by research institutions, government agencies, and innovators to turn open source tools into practical solutions that advance science. Kitware can:

- Train your team to master DIVE’s features and build in-house expertise.

- Customize DIVE for your data and annotation workflows to accelerate your scientific goals.

- Deploy DIVE securely on your infrastructure or preferred cloud provider.

- Scale your workflows from quick prototypes to production-ready systems.

Contact us for a free consultation and learn how we can help you achieve faster, smarter results with DIVE.