Enhance your ParaView and VTK pipelines with Artificial Neural Networks

VTK has recently introduced support for ONNX Runtime, opening new opportunities for integrating machine learning inferences into scientific visualization workflows. This feature is also available in ParaView through an official plugin.

What are ONNX and ONNX Runtime?

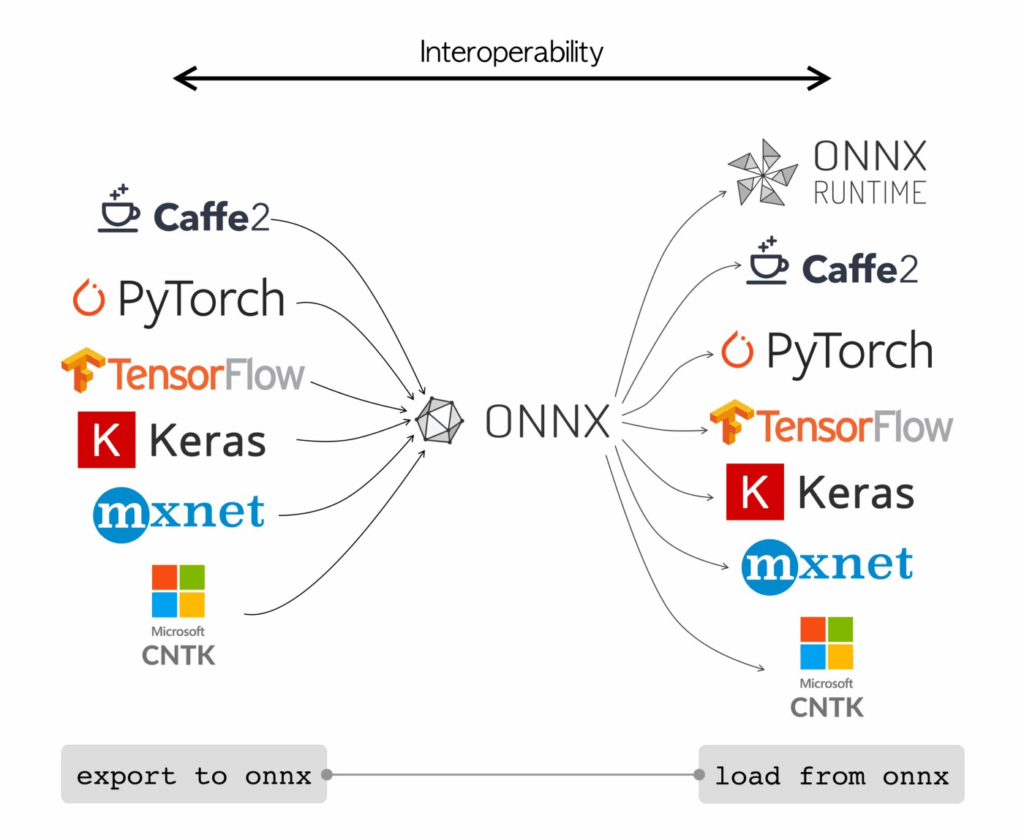

ONNX (Open Neural Network eXchange) is an open file format designed to represent machine learning models in a standardized way. It defines a common set of operators and ensures interoperability across frameworks. Most major machine learning libraries, such as PyTorch, TensorFlow, and Scikit-learn, support exporting and importing ONNX models (see Fig.1). This means models trained in one framework can be used elsewhere without compatibility concerns.

ONNX Runtime is an engine for executing ONNX models. It provides efficient inference across a wide range of hardware and operating systems. Even though built as a C++ library, it also offers wrapping for Python, C#, JavaScript, and more.

Basically, ONNX is a standard and ONNX Runtime is a cross-platform inference engine.

New VTK module

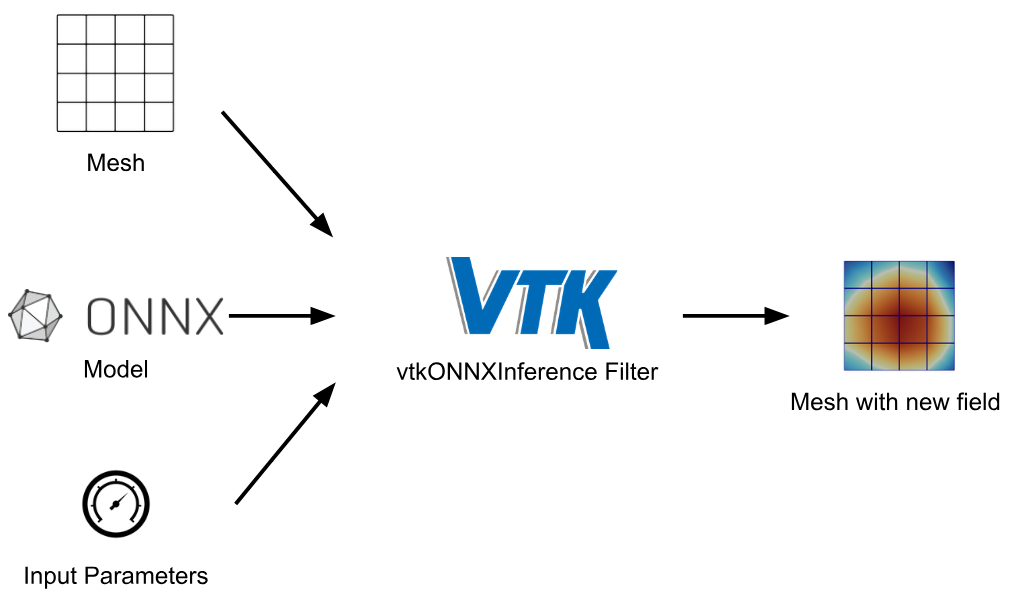

Going back to VTK, a new module has been introduced allowing to run ONNX models directly within a VTK pipeline. Let’s clarify what this filter can and cannot do. We can infer cell data or point data of any dimension on a DataSet given as input to the filter. The filter is oblivious to the shape and geometry of this input which acts only as a container for the inference output. The network inputs are set as a vector of parameters. One of these can optionally be tied to time, enabling to leverage the whole time related features of VTK and ParaView.

Here is a code snippet showing how to use the VTK Python API on a simple example:

input = … # your input

model_path = … # file path to your .onnx file

model = vtkONNXInference()

model.SetInputData(input)

model.SetModelFile(model_path)

model.SetNumberOfInputParameters(3)

model.SetInputParameter(0, 210000)

model.SetInputParameter(1, 0.25)

model.SetInputParameter(2, 13000)

model.Update()

New ParaView plugin

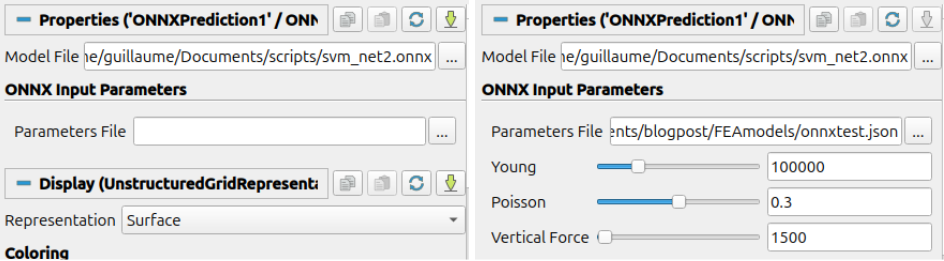

To have access to this feature from Paraview, a new official plugin has been added, soberly called ONNXPlugin. In addition, this plugin comes with a new interesting feature: dynamic Qt user-interfaces. By loading a JSON file into the filter, the plugin automatically generates the corresponding UI elements. This means the interface adapts to the requirements of the network, creating the appropriate number of inputs and valid ranges.

Practical examples in ParaView

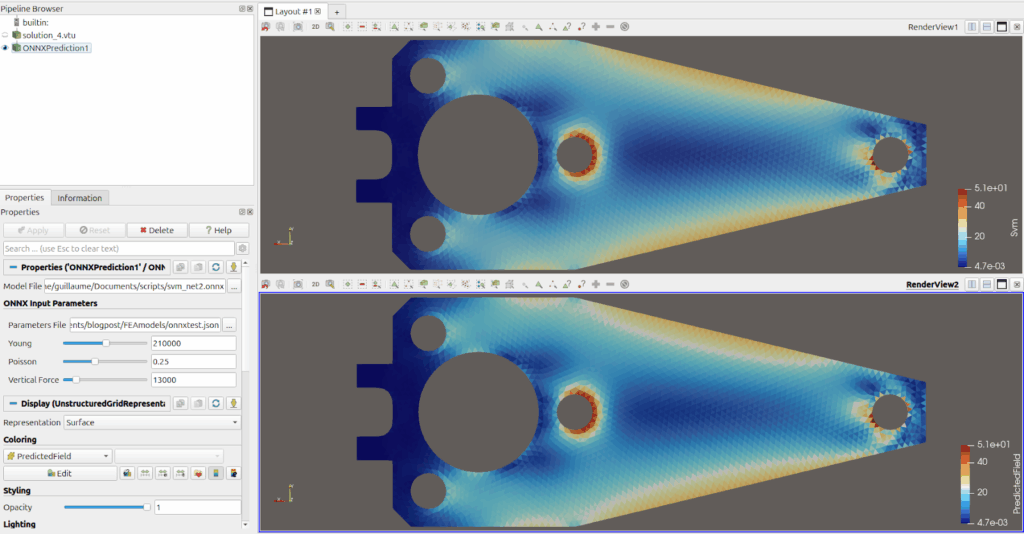

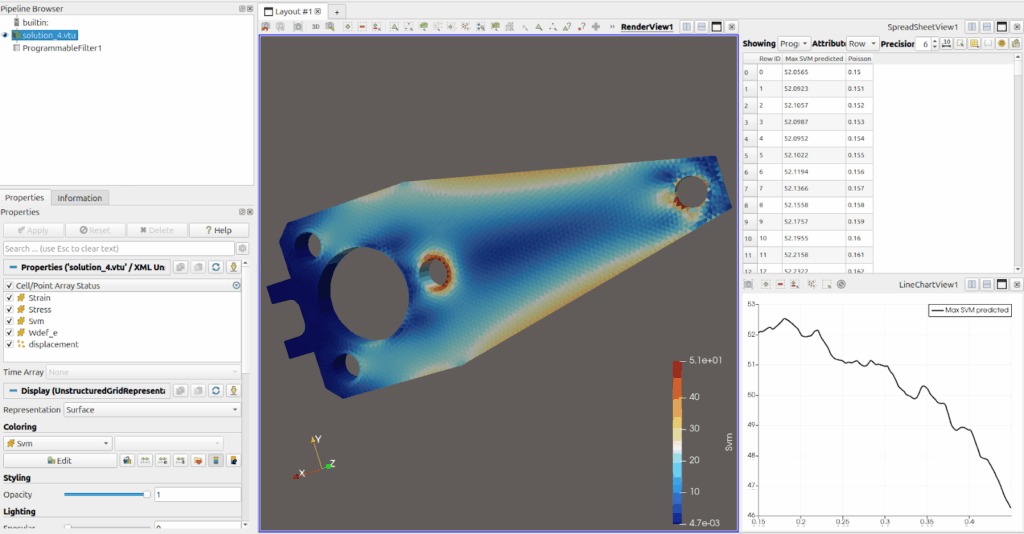

To illustrate the feature, we trained a simple multi layer perceptron (MLP) to reproduce the results of a simulation made with EasyFEA (https://github.com/matnoel/EasyFEA). To be precise, the network is trained to predict the Von-Mises Stress (SVM) for each cell of a mechanical piece.

This type of model training, where the goal is to reproduce the results of an existing simulation, is commonly referred to as a surrogate model. Such models only approximate the outcome but are much faster to compute. In this particular example, the simulation required about 10s on our local machines, whereas the ONNX filter produced the result in only 5ms, corresponding to a speed-up of about 2000x.

Such an improvement in the performances open the way to new possibilities. For example, one can study the evolution of the maximum predicted SVM as a function of a varying parameter (in our example the Poisson ratio of the object) . While completely possible with traditional simulations, the process quickly becomes tedious and impractical, especially in experimental settings where parameters must be modified and results recomputed many times. The Fig5 illustrates this idea with 300 function evaluations. Computations were completed in just 1.5s, compared to 50min with the standard simulation.

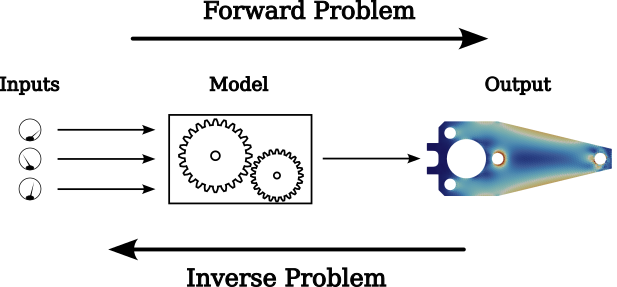

More interestingly, this also enables the computation of inverse problems. In the forward problem, we know parameters such as the object material and the applied force, and we compute the resulting SVM. In the inverse problem, we know something about the SVM and we want to know which input parameters would produce such an output.

However, solving inverse problems typically requires a large number of function evaluations (or even gradient evaluations, but that’s a different story). As a demonstration, we used the model to determine the maximum force that could be applied to the object while keeping the SVM below a specified threshold. The whole computations could here be completed in just a few seconds.

Conclusion

ONNX Runtime is a high-performance inference engine for machine learning models. With the new VTK module, you can now bring these models directly into your VTK and ParaView pipelines.

While this first release does not yet expose every capability of machine learning models (field data cannot be used as input, no new geometry can be generated, …), it marks a first step forward. Now is the perfect time to experiment with integrating your own ONNX models into VTK or ParaView.

The feature will be available in VTK 9.6.0 and ParaView 6.1 and is already available in ParaView master branch. Please note it is not available in the binary release of ParaView and require compiling ParaView.

And finally get in touch with Kitware to get guidance from our experts and explore new features for your post-processing.

Acknowledgments

This work was partially funded by EDF, as well as an internal effort of the Kitware Europe team.