The Geospatial Intelligence Symposium (GEOINT)

May 21-24, 2023 at America’s Center Convention Complex in St. Louis, Missouri

The GEOINT Symposium is the largest gathering of geospatial intelligence professionals in the U.S., with attendees from government, industry, and academia. Kitware is a regular supporter of GEOINT and we are proud to be a Silver-level sponsor and exhibitor in 2023. As highly involved members of the geospatial intelligence community, our AI, machine learning, and computer vision experts work closely with the intelligence community and government defense agencies. We develop and customize our open source software to meet the requirements of geospatial intelligence R&D projects.

We look forward to meeting with attendees and exhibitors to discuss our computer vision expertise and open source tools. You can visit us at booth 408 or schedule a meeting.

Software R&D expertise in AI and computer vision

Events Schedule

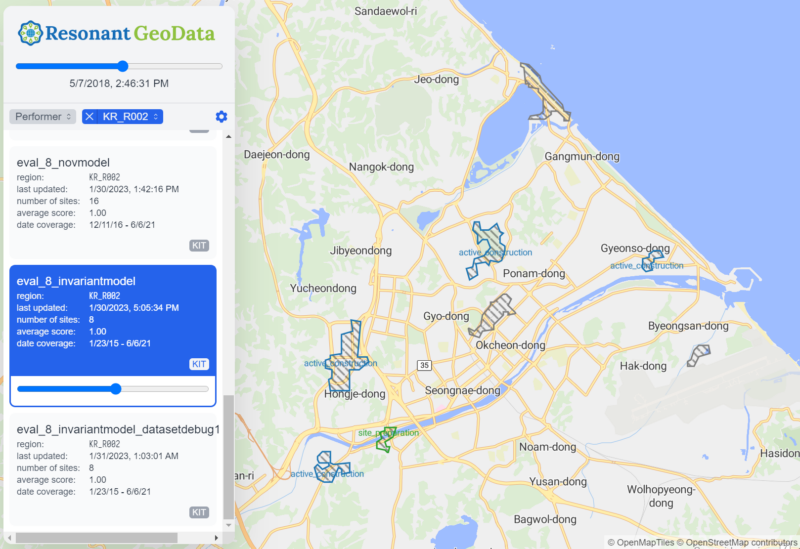

Predicted construction sites in our open source web visualization tool.

The availability of satellite imagery has grown enormously in recent years and the data volume is so large it is difficult to locate objects or activities of interest on a large scale. Deep learning can be used to solve this problem by training deep neural networks to analyze the imagery and perform broad area searches for specific spatial, temporal, and spectral signatures. Such networks are typically trained from a collection of annotated examples. However, the logistics of properly training such a network can be tricky, especially in the context of geospatial imagery that comes in a mix of spatial resolutions, spectral bands, revisit rates, etc. To make it easier to train and deploy geospatial search models, Kitware’s team (click here to see our subs) developed an open source software framework called WATCH (Wide Area Terrestrial Change Hypercube). Developed as part of the ongoing IARPA SMART program, WATCH aims to detecting and characterizing heavy construction sites across time using government and commercial imagery (LandSat, Sentinel-2, Maxar, and Planet). However, this software can be applied to other areas. In this training session, we will walk participants through an example of leveraging WATCH to train a broad area search model. We will also explain some of the theory and best practices for training such models and summarize some of the latest research advances in the field.

Remote sensing methods often use neural networks pretrained on large ground-level imagery datasets (e.g. Imagenet). Unfortunately, features trained from ground-level imagery show reduced discrimination on remote sensing images. It is also prohibitively costly to manually label huge datasets for remote sensing applications. In this training session, we will examine self-supervised techniques for remote sensing. Self-supervision allows automated remote sensing approaches to leverage large amounts of unlabeled data and improve accuracy across many tasks. Self-supervised techniques do not use labels and can pre-train or fine-tune models to create image embeddings that perform similarly to fully-labeled ImageNet embeddings. The techniques work particularly well for problems with few to no labels. Our team has developed and used these methods on DARPA Learning with Less Labeling (LwLL), DARPA Science of Artificial Intelligence and Learning for Open-world Novelty (SAIL-ON), and AFRL’s VIGILANT and Bomb Damage Assessment programs. Examples of the state-of-the-art techniques we will cover include contrastive learning techniques (SimCLR and SelfSim), reconstructing a masked image with Mask AutoEncoders (MAE), using GSD for superresolution masked reconstruction (ScaleMAE), and object detection-focused techniques (ReSIM and CutLER). Our focus will be effectiveness in image classification and object detection and we will touch on other applications such as perceptually lossless compression. This tutorial will introduce GEOINT attendees to the latest research on self-supervised learning in remote sensing (EBK T14. Neural Networks/Artificial Intelligence) so they can apply these techniques to improve automated image analysis results while reducing the need for expensive labeling in their projects.

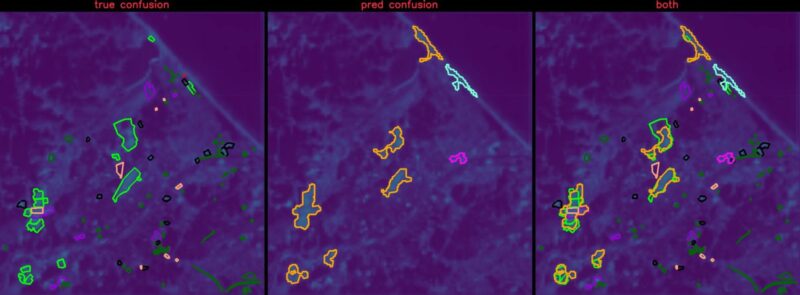

Ground truth construction sites vs. predicted sites overlaid on AI heatmap prediction

The IARPA SMART program aims to research new methods and build a system to search vast catalogs of satellite images from various sources to find and characterize relevant change events as they evolve over time. Kitware is leading one of the performer teams on this program to address the problems of broad area search to find man-made change, with an initial focus on construction sites. This is a “changing needle in a changing haystack” search effort. Our system called WATCH will categorize detected sites into stages of construction with defined geospatial and temporal bounds and predict end dates for activities that are considered in progress. WATCH has been deployed on AWS infrastructure and the software has been released as open source and is for free community use. This talk will summarize our progress during the first half of the eighteen-month Phase 2 program and share improvements since our early results presented at GEOINT 2022. Notably, our detection scores on validation regions have improved dramatically since last year. We will also highlight our research results, system integration/deployment progress, and open source tools that are now available for community use.

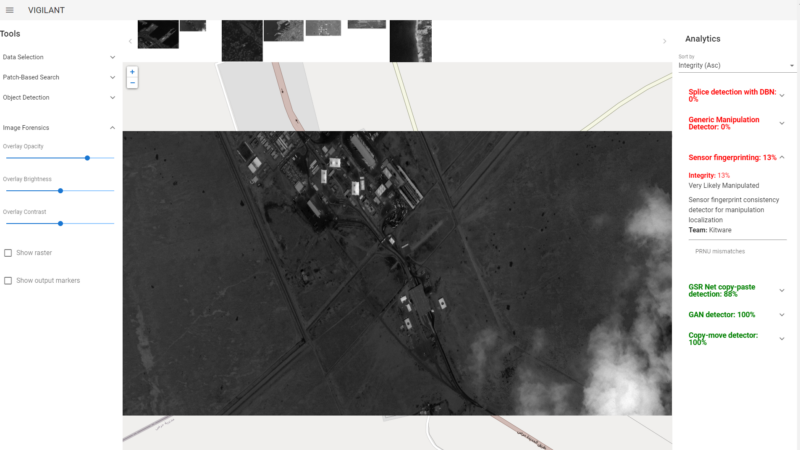

Three forensics analytics correctly identified this image as “Very Likely Manipulated where the clouds on the bottom right were added digitally. (Background image © Skybox Imaging, Inc. All Rights Reserved.)

To gather meaningful information from geospatial imagery, GEOINT analysts need to trust its authenticity. This is especially important today as we face an elevated threat of disinformation, a rapidly growing number of commercial satellite providers, and quickly evolving AI techniques for image manipulation and generation. Modern forensics technology that is specialized in overhead imagery and can detect and localize manipulations can provide analysts with the tools they need to establish trust. This presentation will highlight Kitware’s collaborative effort on the DARPA MediFor and SemaFor programs. We provide several image forensic analytics from five academic and industry partners through VIGILANT, our satellite imagery exploitation tool (developed by Kitware with funding from AFRL and NGA) that is available with unlimited rights to the government. The great variability in the pedigree of overhead images (panchromatic, multispectral, SAR, metadata, etc.) calls for a versatile suite of forensic tools that leverage domain knowledge to the fullest extent. During this talk, we will also discuss the latest developments in the image manipulation localization and characterization of image-text inconsistencies from the DARPA SemaFor program.

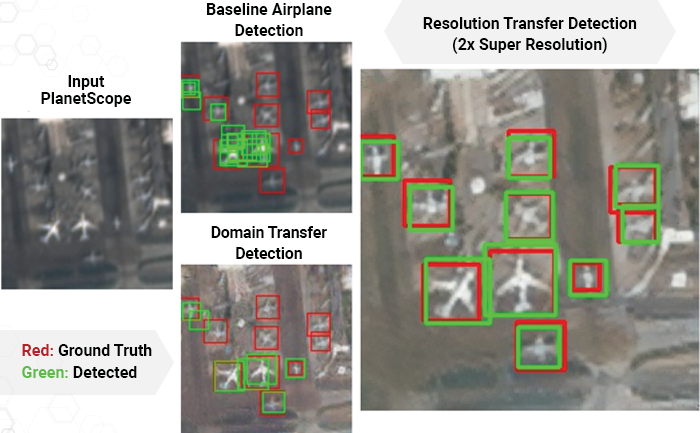

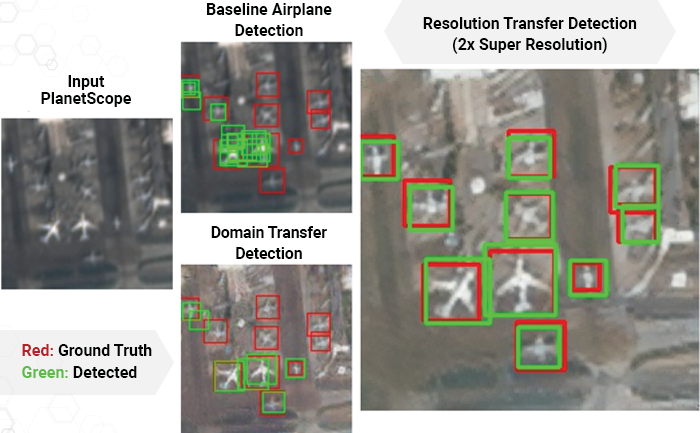

Performing fine-grained classification of aircraft from satellite imagery is challenging due to subtle inter-class differences and long-tailed training sample distributions. Kitware developed Low-Shot Attribute Value Analysis (LAVA) to address these unique challenges. First, we show that automatic keypoint detection using convolutional neural networks (CNNs) can effectively find the nose, tail, and wingtips of aircraft in satellite imagery. Second, because CNNs are not rotation equivariant, they are trained with data augmentation to be rotation invariant. We hypothesize this invariance restricts the ability of CNNs to learn valuable features for fine-grained classification. Instead of learned invariance, we rotate aircraft into a canonical orientation during training and testing, enabling better feature learning and improving performance. We canonicalize aircraft by determining their heading from the detected key-points placed at the nose, tail, and each wingtip. We synthetically rotate aircraft so their nose is to the upper left corner of each training and test image chip. Third, we show that breaking fine-grained object classification into attribute-based sub-classifications, in the form of predicting wing, engine, fuselage, and tail (WEFT) features, outperforms direct prediction of aircraft type while offering better explanations via WEFT attributes (e.g. 2 engines, swept wings, rounded nose). Detected keypoint-based mensuration, including the handling of variable wing sweep aircraft, is used to filter aircraft types, as aircraft may have similar WEFT features but different measured dimensions. While our results are focused on aircraft, keypoint prediction, mensuration, canonicalization, and attribute-based classification generally apply to remote sensing for fine-grained object classification.

This presentation summarizes work recently completed with NGA funding under the SAFFIRE program in which we characterized the performance of machine learning-based object detection models as a function of sensor design parameters, specifically for satellites and the resulting overhead imagery. As part of the study, Kitware extended its eXplainable Artificial Intelligence ToolKit (XAITK) to measure the performance of visual detectors over a range of sensor parameters. This extension leverages an image simulation tool called pyBSM to re-render imagery consistent with different sensor and/or platform configurations. Applying this set of tools to commercial satellite imagery, we characterized the performance of detectors for aircraft and several ground vehicle classes in the NIIRS 5-7 range. Experiments showed that deep network-based detectors have smooth performance degradations within the tested range of parameters. In a separate experiment, we attempted to validate a previously reported finding that the bit depth of overhead imagery has a significant impact on downstream detection; our experiments refuted this earlier work, as we found no significant effect from bit depth. Lastly, we used an end-to-end deep learning algorithm to select sparse multi-spectral bands from commercial satellite imagery that were co-optimized with downstream detection but found only minimal differences based on the specific bands that were selected. Kitware also performed experiments with classified imagery, using similar methodologies to those applied to unclassified data.

Kitware’s Computer Vision Focus Areas

Deep Learning

Through our extensive experience in AI and our early adoption of deep learning, we have made dramatic improvements in object detection, recognition, tracking, activity detection, semantic segmentation, and content-based retrieval. In doing so, we have addressed different customer domains with unique data, as well as operational challenges and needs. Our expertise focuses on hard visual problems, such as low resolution, very small training sets, rare objects, long-tailed class distributions, large data volumes, real-time processing, and onboard processing. Each of these requires us to creatively utilize and expand upon deep learning, which we apply to our other computer vision areas of focus to deliver more innovative solutions.

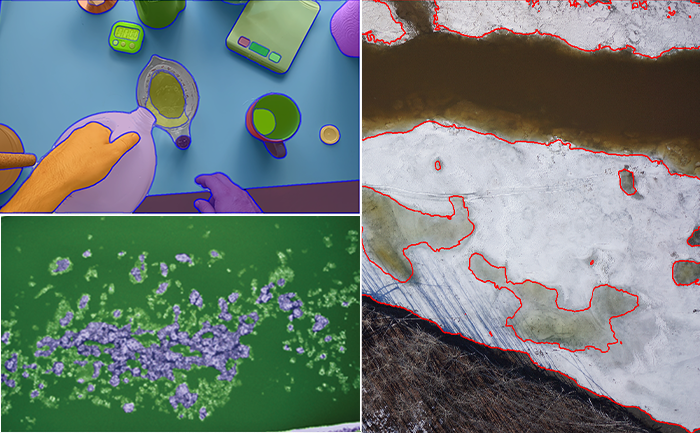

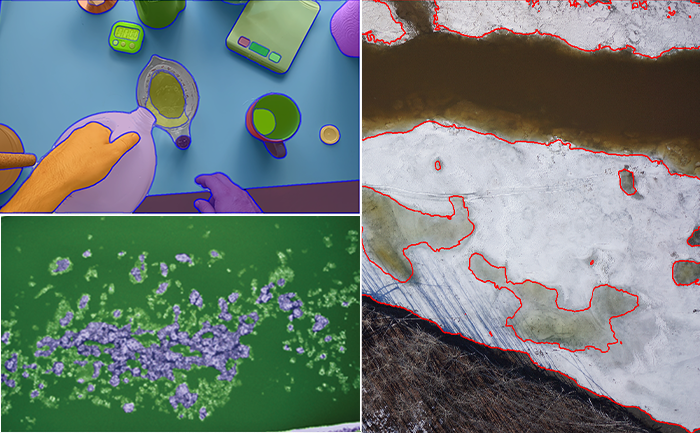

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams.

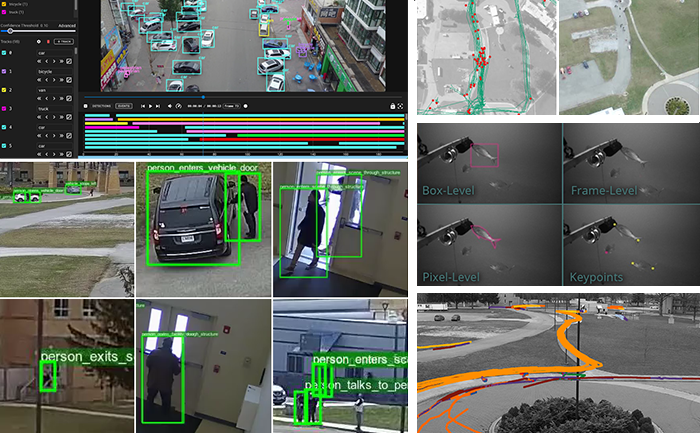

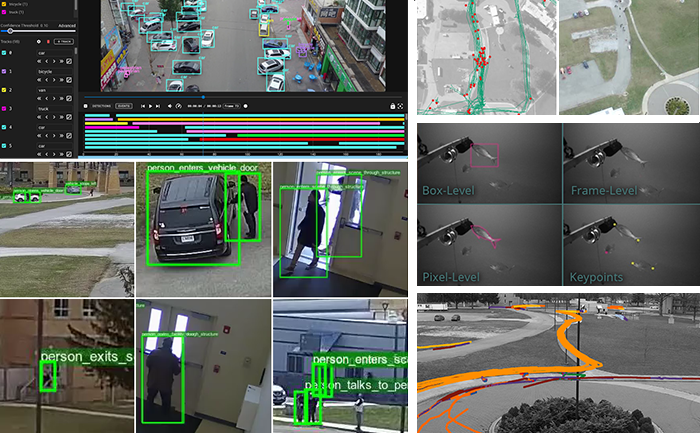

Interactive Do-It-Yourself Artificial Intelligence

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

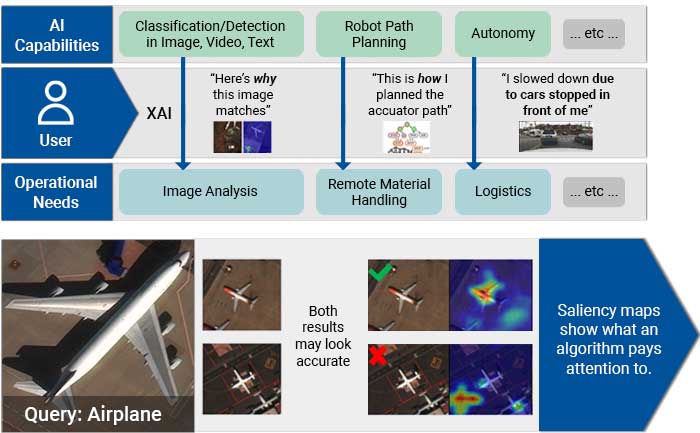

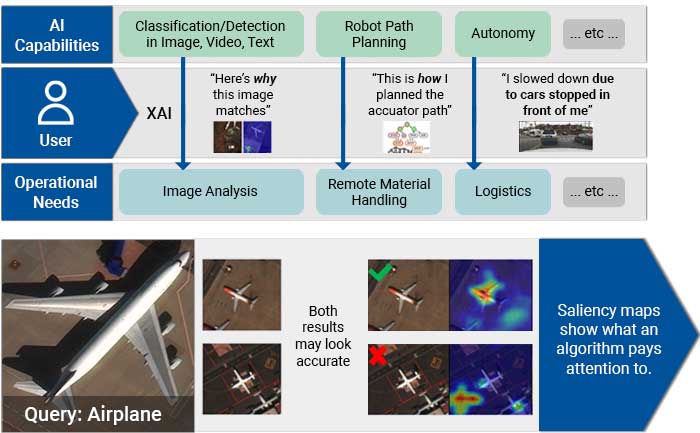

Explainable and Ethical AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is making deep neural networks explainable and robust when faced with previously-unknown conditions. In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Disinformation Detection

In this new age of disinformation, it has become critical to validate the integrity and veracity of images, video, and other sources. As photo-manipulation and photo generation techniques are evolving rapidly, we are continuously developing algorithms to automatically detect image and video manipulation that can operate at scale on large data archives. These advanced deep learning algorithms give us the ability to detect inserted, removed, or altered objects, distinguish deep fakes from real images, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

Super Resolution and Enhancement

Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

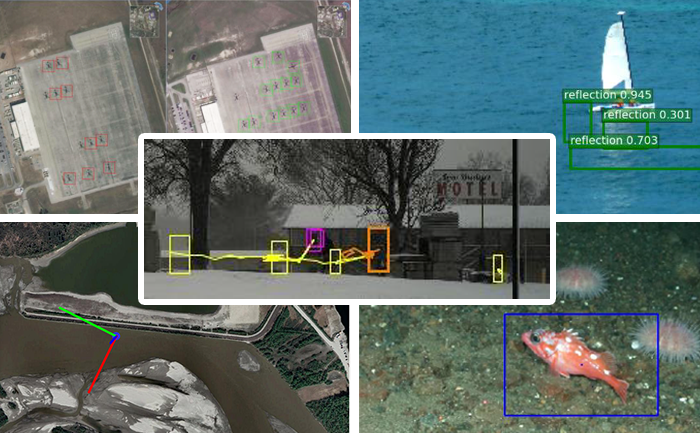

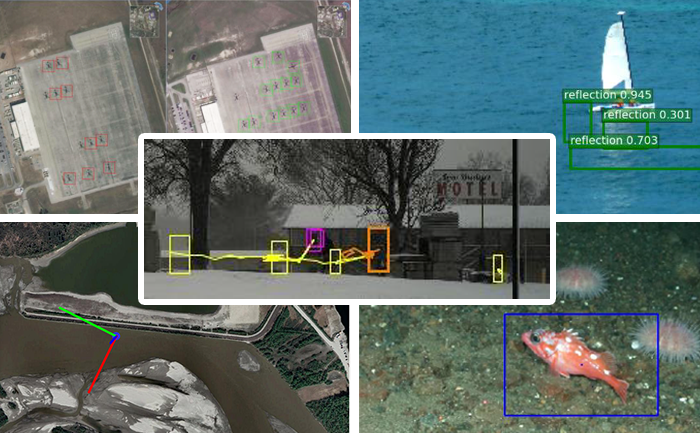

Object Detection, Recognition, and Tracking

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

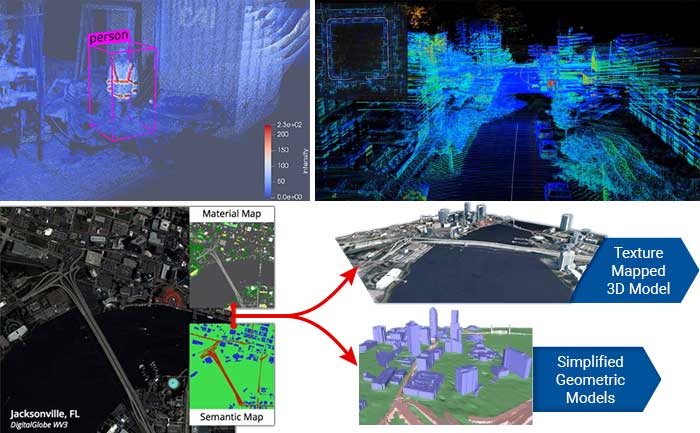

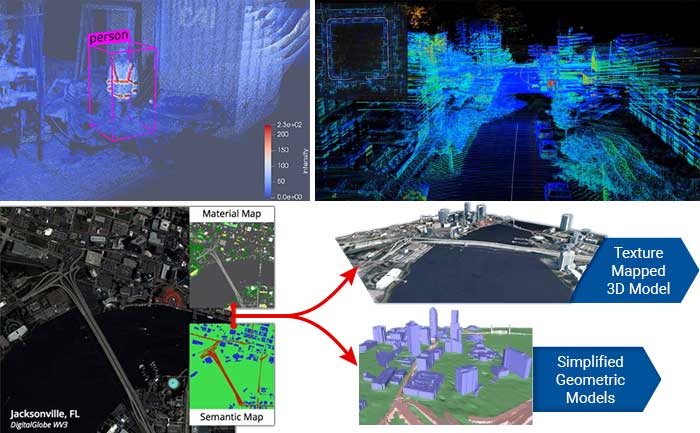

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

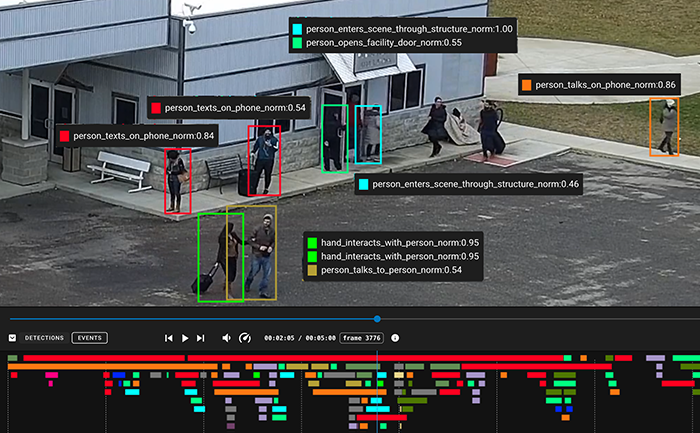

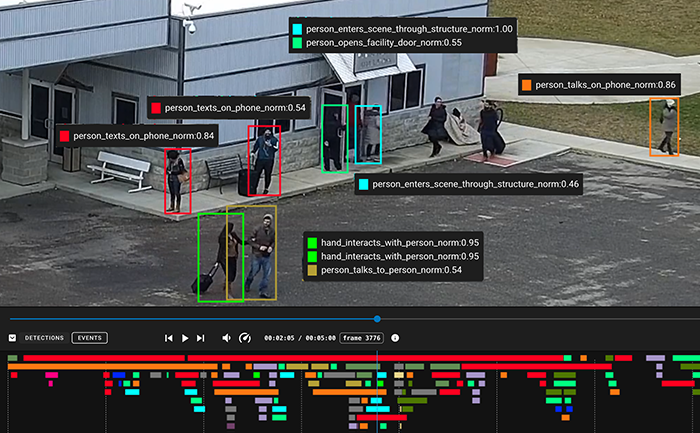

Complex Activity, Event, and Threat Detection

Kitware’s tools recognize high-value events, salient behaviors and anomalies, complex activities, and threats through the interaction and fusion of low-level actions and events in dense cluttered environments. Operating on tracks from WAMI, FMV, MTI or other sources, these algorithms characterize, model, and detect actions, such as people picking up objects and vehicles starting/stopping, along with complex threat indicators such as people transferring between vehicles and multiple vehicles meeting. Many of our tools feature alerts for behavior, activities and events of interest, including highly efficient search through huge data volumes, such as full frame WAMI missions using approximate matching. This allows you to identify actions in massive video streams and archives to detect threats, despite missing data, detection errors and deception.

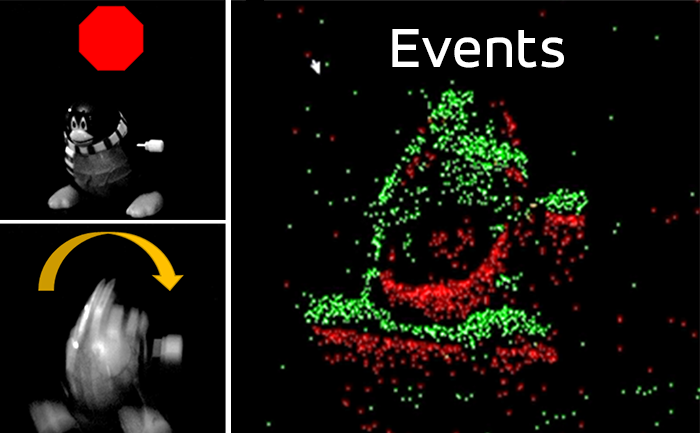

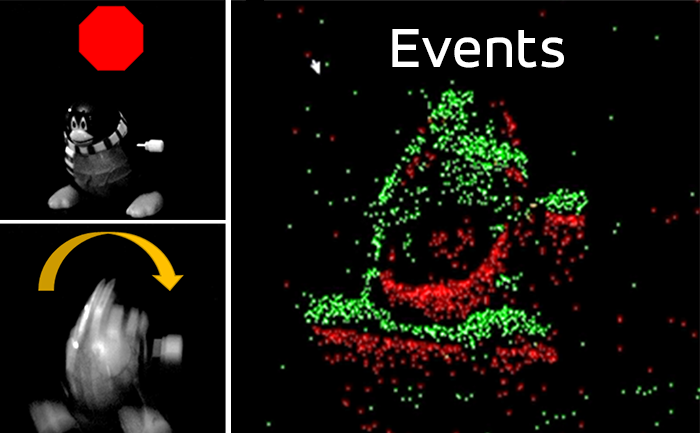

Computational Imaging

The success or failure of computer vision algorithms is often determined upstream, when images are captured with poor exposure or insufficient resolution that can negatively impact downstream detection, tracking, or recognition. Recognizing that an increasing share of imagery is consumed exclusively by software and may never be presented visually to a human viewer, computational imaging approaches co-optimize sensing and exploitation algorithms to achieve more efficient, effective outcomes than are possible with traditional cameras. Conceptually, this means thinking of the sensor as capturing a data structure from which downstream algorithms can extract meaningful information. Kitware’s customers in this growing area of emphasis include IARPA, AFRL, and MDA, for whom we’re mitigating atmospheric turbulence and performing recognition on unresolved targets for applications such as biometrics and missile detection.

Deep Learning

Through our extensive experience in AI and our early adoption of deep learning, we have made dramatic improvements in object detection, recognition, tracking, activity detection, semantic segmentation, and content-based retrieval. In doing so, we have addressed different customer domains with unique data, as well as operational challenges and needs. Our expertise focuses on hard visual problems, such as low resolution, very small training sets, rare objects, long-tailed class distributions, large data volumes, real-time processing, and onboard processing. Each of these requires us to creatively utilize and expand upon deep learning, which we apply to our other computer vision areas of focus to deliver more innovative solutions.

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams.

Interactive Do-It-Yourself Artificial Intelligence

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

Explainable and Ethical AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is making deep neural networks explainable and robust when faced with previously-unknown conditions. In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Disinformation Detection

In this new age of disinformation, it has become critical to validate the integrity and veracity of images, video, and other sources. As photo-manipulation and photo generation techniques are evolving rapidly, we are continuously developing algorithms to automatically detect image and video manipulation that can operate at scale on large data archives. These advanced deep learning algorithms give us the ability to detect inserted, removed, or altered objects, distinguish deep fakes from real images, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

Super Resolution and Enhancement

Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

Object Detection, Recognition, and Tracking

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

Complex Activity, Event, and Threat Detection

Kitware’s tools recognize high-value events, salient behaviors and anomalies, complex activities, and threats through the interaction and fusion of low-level actions and events in dense cluttered environments. Operating on tracks from WAMI, FMV, MTI or other sources, these algorithms characterize, model, and detect actions, such as people picking up objects and vehicles starting/stopping, along with complex threat indicators such as people transferring between vehicles and multiple vehicles meeting. Many of our tools feature alerts for behavior, activities and events of interest, including highly efficient search through huge data volumes, such as full frame WAMI missions using approximate matching. This allows you to identify actions in massive video streams and archives to detect threats, despite missing data, detection errors and deception.

Computational Imaging

The success or failure of computer vision algorithms is often determined upstream, when images are captured with poor exposure or insufficient resolution that can negatively impact downstream detection, tracking, or recognition. Recognizing that an increasing share of imagery is consumed exclusively by software and may never be presented visually to a human viewer, computational imaging approaches co-optimize sensing and exploitation algorithms to achieve more efficient, effective outcomes than are possible with traditional cameras. Conceptually, this means thinking of the sensor as capturing a data structure from which downstream algorithms can extract meaningful information. Kitware’s customers in this growing area of emphasis include IARPA, AFRL, and MDA, for whom we’re mitigating atmospheric turbulence and performing recognition on unresolved targets for applications such as biometrics and missile detection.

Kitware’s Automated Image and Video Analysis Platforms

Custom Solutions Built for You

Contact us to learn how we can help you solve your challenging intelligence problems using our software R&D expertise in AI and computer vision.