iMSTK 4.0 Released

We are pleased to announce the release of iMSTK version 4.0. The Interactive Medical Simulation Toolkit (iMSTK) is an open-source toolkit that allows faster prototyping of medical trainers and planners. iMSTK features libraries for comprehensive physics simulation, haptics, advanced rendering, and visualization, hardware interfacing, geometric processing, collision detection, contact modeling, and numerical solvers.

In this 4.0 release, major improvements were made to the simulation execution pipeline, geometry module, virtual reality support, and haptics. Extensive refactoring and numerous bug fixes were also made across the codebase to allow extensibility of certain classes, improve clarity, separate roles for different iMSTK libraries, and enforce consistent design and conventions across the toolkit.

Release Highlights

Major

- Implicit Geometry: ImplicitGeometry was introduced to iMSTK. This includes analytical geometry and signed distance fields. For iMSTK it provides extremely fast collision detection and haptics response. We can produce point contacts in O(1) by sampling the signed distance and gradient. These can then be used in most constraint-based solvers (Position-based and Rigid body dynamics).

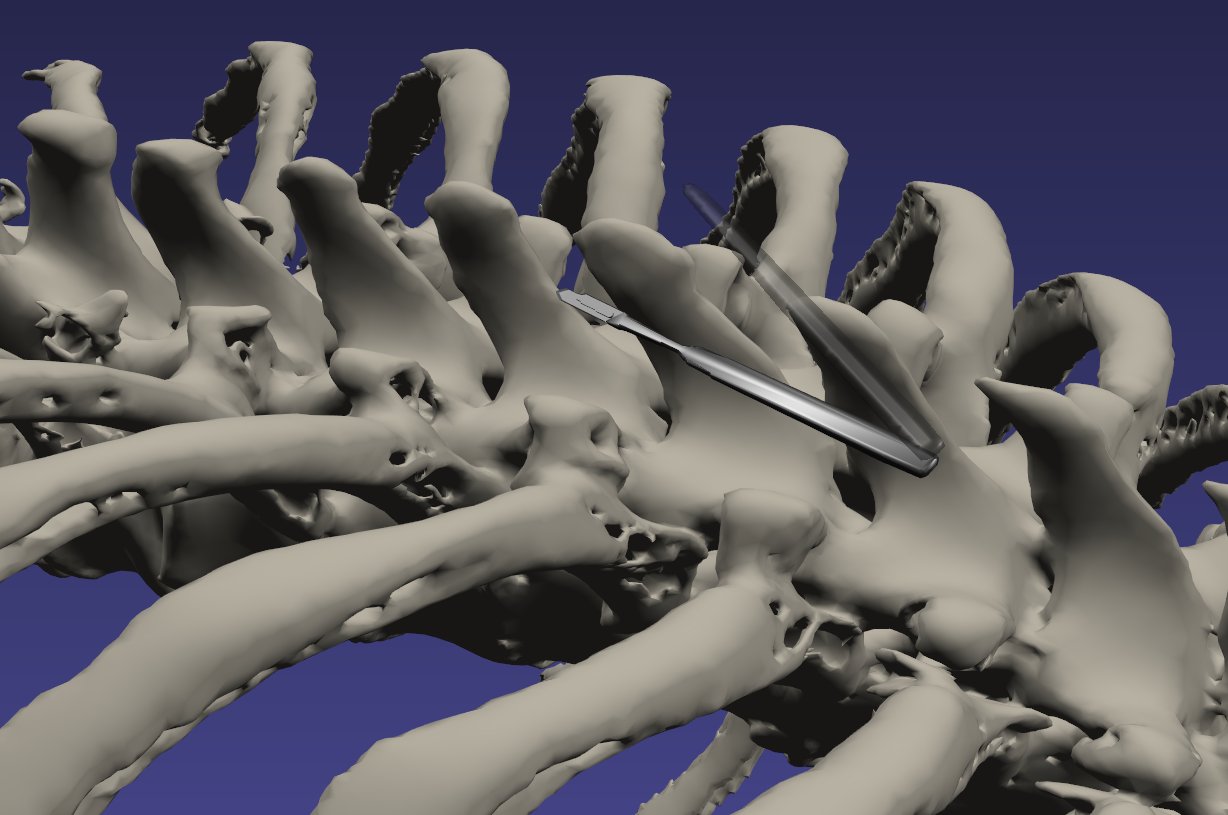

- Level sets: Basic level set evolution on regular grids were added. We’ve found it useful for fast (>1000hz) bone shaving, sawing, & burring type surgical actions. Our ImplicitGeometryCCD works well with them as it does not sample the level set anywhere but only at the zero-crossing.

- Alternate Rigid Body Dynamics model: RigidBodyModel2 was added to iMSTK that is based on the constraint-based formulation (see Tamis et al.’s review paper). This in-house implementation was added to provide API to customize constraints and contacts.

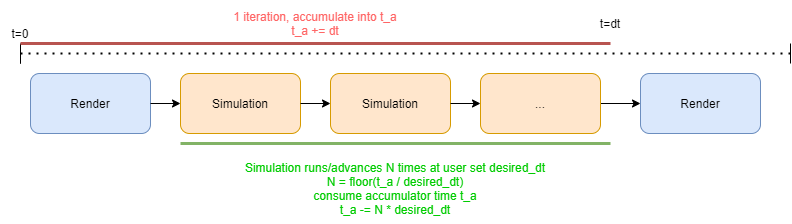

- SimulationManager and Module Refactoring: This refactoring provides a sequential sub-stepping approach to our execution pipeline, similar to Unity. This allows for a fixed timestep while varying the number of simulation updates. A fixed timestep is preferred to keep simulations stable. Whilst the varying amount of simulation steps keeps the simulation independent of the performance of the machine it’s running on (allowing reproducibility). This can be controlled with the desired timestep of the SimulationManager. Read more here.

- Event system: An new event system was added to iMSTK via the addition of the EventObject. EventObjects should be used for anything that needs to emit or receive an event. They have a set of queued and direct observers as well as a message queue.

- Direct events: Directly calls the observer on the same thread when the event is emitted.

- Queued events: Queues the event to the observer’s queue. The observer can consume when it wants. This could be on a different thread or at a later time.

- iMSTK Data Arrays: A dynamic array implementation is now provided in iMSTK that supports events and memory mapping. Outside of construction cost, the performance was measured the same as our previously used STL arrays.

- Revamped the documentation

Minor

- New Geometry Filters: Complete list of available filters here. Notable additions include LocalMarching Cubes, ImageDistanceTransform, ImplicitGeometryToImage, SurfaceMeshDistanceTransform, and FastMarch.

- Geometry Attributes: With the addition of our dynamic arrays we now store all our attributes (similar to VTK’s) in abstract data arrays. You may store per cell or per-vertex normals, scalars, displacements, transforms, UV coordinates, etc.

- Multi-Viewer Support: RenderDelegates used to use flags in geometry to observe the changes of geometry. Now that they use events we can have multiple viewers of the same scene. This includes viewers of different types such as the VTKViewer and VTKOpenVRViewer.

- Shared RenderMaterials: VisualModels may now share materials. Their changes are also event-based now.

- Camera refactor: View matrix can now be set independently of focal point and position allowing things like lerp-ed and slerp-ed camera transitions.

- Refactor transforms: Geometry base class now uses only a compound 4×4 transform matrix as opposed to separate transforms that were eventually combined into a 4×4 anyways.

- PBD Picking CollisionHandler: Fixes PBD vertices to control/grab them.

- Topology changes supported: The topology of meshes may be changed at runtime now, but the dynamic systems governing them won’t change for you automatically.

- imstkNew: Quick way to make shared pointers. imstkNew<Sphere> sphere(Vec3d(0.0, 1.0, 0.0), 5.0);

- Offscreen rendering support through VTK: Allows for headless builds. Such as those on WSL.

- Refactor VTKRenderDelegates: Reduced complexity, improved dependencies (the base class was doing way too much before).

API Changes

- VR, Keyboard, and Mouse device refactoring: Mouse and Keyboard now provided under the same DeviceClient API as our haptic devices. You may acquire these from the viewer. They emit events, you can also just ask them about their state.

std::shared_ptr<KeyboardDeviceClient> keyboardDevice = viewer->getKeyboardDevice();

std::shared_ptr<MouseDeviceClient> mouseDevice = viewer->getMouseDevice();

std::shared_ptr<OpenVRDeviceClient> leftVRController = vrViewer->getVRDevice(OPENVR_LEFT_CONTROLLER);- Controls: Our controls are now abstracted. Any control simply implements a device. You may subclass KeyboardControl or MouseControl. We also provide our own default controls:

// Add mouse and keyboard controls to the viewer

imstkNew<MouseSceneControl> mouseControl(viewer->getMouseDevice());

mouseControl->setSceneManager(sceneManager);

viewer->addControl(mouseControl);

imstkNew<KeyboardSceneControl> keyControl(viewer->getKeyboardDevice());

keyControl->setSceneManager(sceneManager);

keyControl->setModuleDriver(driver);

viewer->addControl(keyControl);- Event System: Key, mouse, haptic, and VR device event callback can be done like this now.

- You may alternatively use queueConnect as long as you consume it somewhere (sceneManager consumes all events given to it).

- Your own custom events may be defined in iMSTK subclasses with the SIGNAL macro. See KeyboardDeviceClient as an example.

connect<KeyEvent>(viewer->getKeyboardDevice(), &KeyboardDeviceClient::keyPress,

sceneManager, [&](KeyEvent* e)

{

std::cout << e->m_key << " was pressed" << std::endl;

});- iMSTK Data Arrays: Data arrays and multi-component data arrays provided. They are still compatible with Eigen vector math.

VecDataArray<double, 3> myVertices(3);

myVertices[0] = Vec3d(0.0, 1.0, 0.0);

myVertices[1] = Vec3d(0.0, 1.0, 1.0);

myVertices[2] = myVertices[0] + myVertices[1];

std::cout << myVertices[2] << std::endl;- SimulationManager may now be setup and launched as follows:

// Setup a Viewer to render the scene

imstkNew<VTKViewer> viewer("Viewer");

viewer->setActiveScene(scene);

// Setup a SceneManager to advance the scene

imstkNew<SceneManager> sceneManager("Scene Manager");

sceneManager->setActiveScene(scene);

sceneManager->pause(); // Start simulation paused

imstkNew<SimulationManager> driver;

driver->addModule(viewer);

driver->addModule(sceneManager);

driver->start();- VisualObject typedef removed. Just use SceneObject.

- HDAPIDeviceServer renamed to HapticDeviceManager

- HDAPIDeviceClient renamed to HapticDeviceClient

- VTKViewer split into VTKViewer and VTKOpenVRViewer

Please refer to the release notes for a full list of changes and contributors. For additional information about iMSTK, please follow the links below.

- Website: imstk.org

- Repository: gitlab.kitware.com/iMSTK/iMSTK

- Discourse: discourse.kitware.com/c/imstk

- Documentation: imstk.readthedocs.io/en/latest

- Source documentation: imstk.gitlab.io

Future Work

Next, we will release iMSTK asset on the Unity store later this year. A working prototype of this undergoing testing revealed substantial improvements to the time-to-prototype as well as improved end-user experience. We are also exploring GPU acceleration of key modules through the extension of the existing task graph.

Acknowledgments

The research reported in this publication was supported, in part, by the following awards from NIH: R44DE027595, 1R44AR075481, 1R01EB025247, 2R44DK115332.

Note: The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or its institutes.