LiDAR SLAM : spotlight on Kitware’s open source library

Kitware is pleased to present its open source LiDAR SLAM (Simultaneous Localization And Mapping) versatile library which has been developed since 2018 and enriched through different projects along the years. Its Apache 2 license allows you to fully copy/modify it to integrate it into any of your projects (commercial or not). 3D LiDARs have been increasingly used in many fields such as automotive, robotics, architecture or even surveying in the past decade. This is mainly due to their lowering cost, their increasing resolution, their good accuracy and their high range. This library gathers all the main functionalities to precisely map an environment and/or to localize a vehicle, robot or pedestrian using a 3D LiDAR sensor. SLAM is powerful as a pre-processing step for more advanced tasks, such as 3D modeling (in a BIM context for instance), object detection/segmentation, change detection and much more.

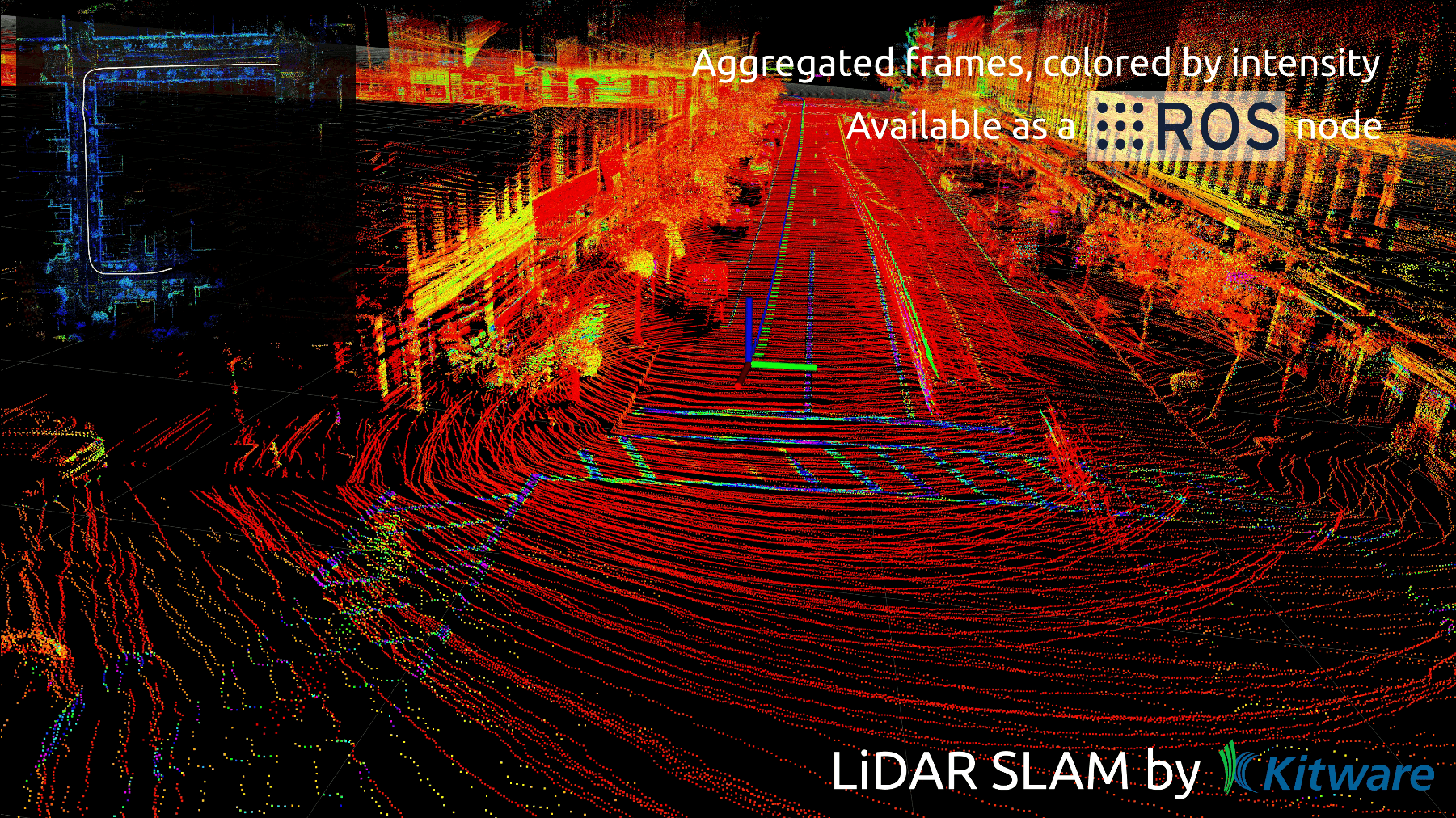

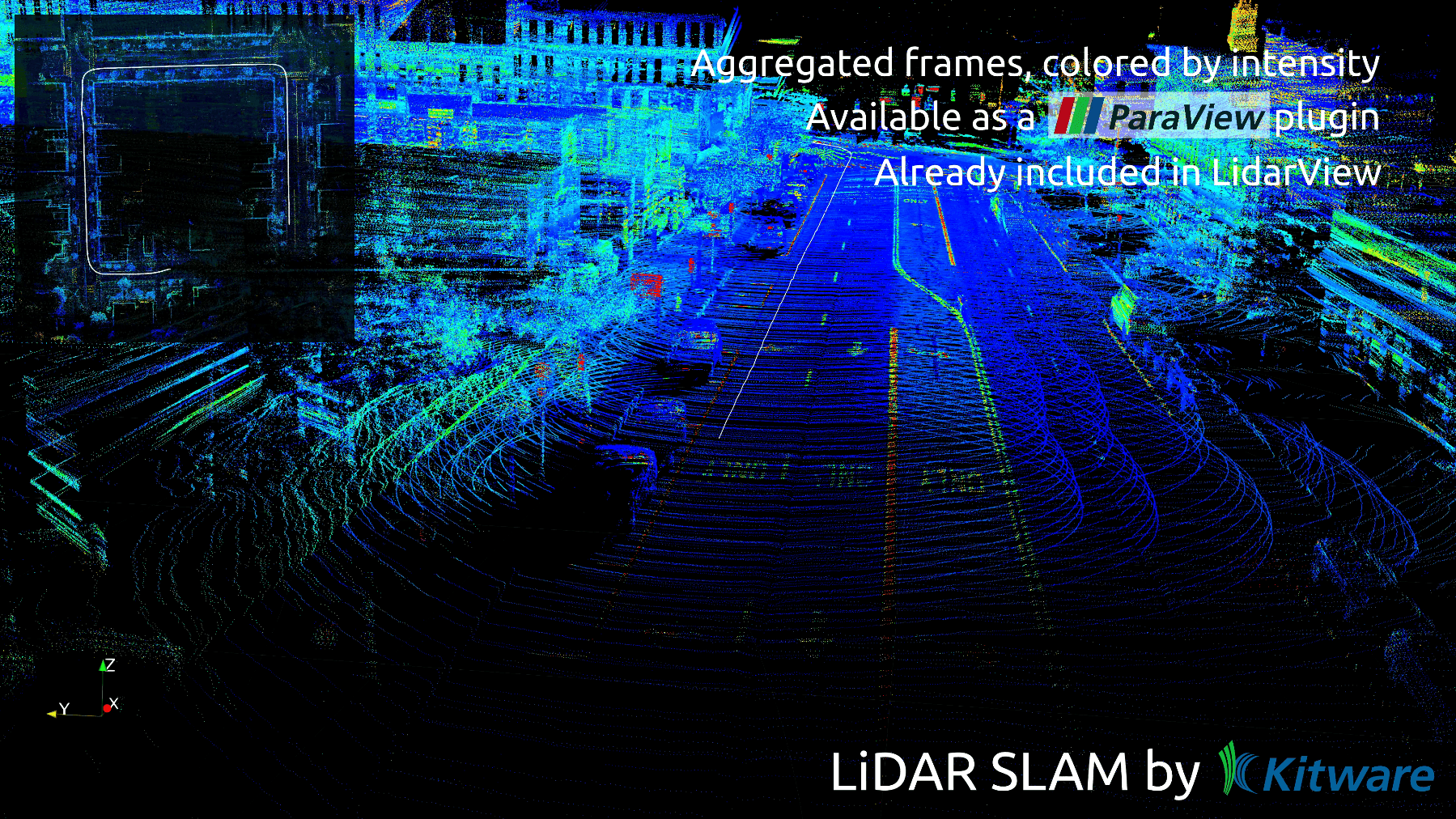

This library is wrapped into a ParaView plugin (loadable in both ParaView and LidarView) and is also available as a ROS package (ROS1, ROS2). The wrappings allow you to supply the inputs, set the parameters, apply the whole SLAM process and to get and display the outputs easily. Those wrappings may serve as examples to integrate the library into any custom application. You can try it hassle-free through the last LidarView package.

Special care is taken to remain updated to what is done in the research world and to integrate as many features as possible from the state of the art. Notably, the loop closure tools or the IMU handling are inspired by recent papers. It is directly usable on any rotative LiDAR or scan lines based LiDAR data. More sensors will be handled soon.

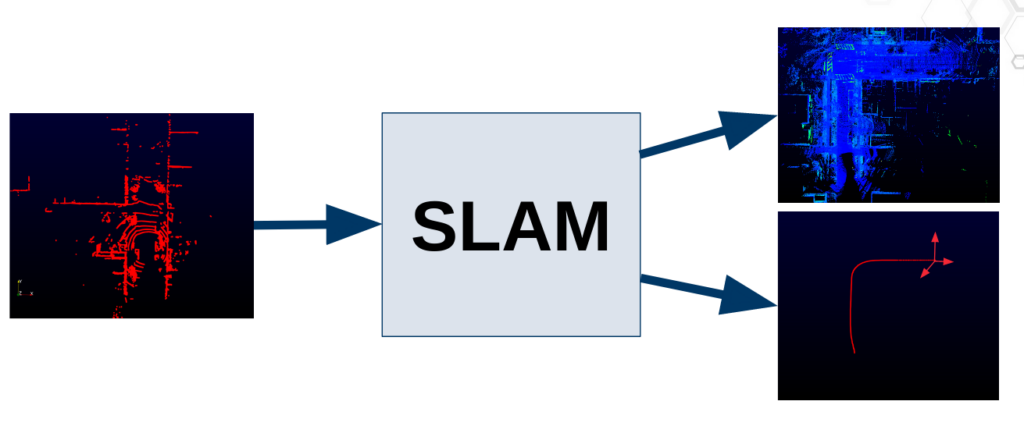

The main algorithm takes a LiDAR frame as input and outputs a pose for this frame in the environment. This process is called odometry. From the output poses, one can build a dense point cloud map of the environment aggregating the LiDAR frames.

However, you can do a lot more than that… Let’s take a tour of the available tools!

Robotic community

Do you need to localize your robot in real-time in a limited environment using your robot’s LiDAR sensor? This library is what you need.

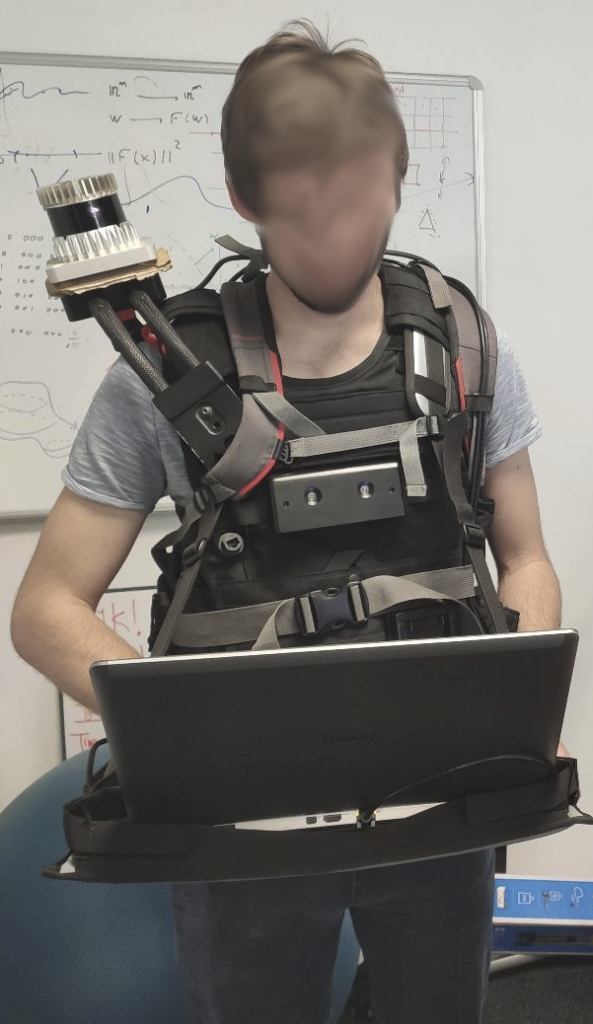

However, you may face some classic issues. When using rotative LiDARs, the elevation field of view can be reduced causing some delocalization in narrow areas. With Kitware SLAM, you can use multiple LiDARs in the same SLAM process to solve this issue. A tool is also provided to calibrate various LiDARs together performing a basic procedure. You can also set a 2D constraint to limit the elevation drift.

More generally, you can link an external sensor to help you solve the missing degree of liberty. This can also solve the field/corridor/tunnel instabilities. These sensors include IMU, GNSS, INS, wheel odometer, RGB camera (for images or for tag detections), etc. Each problem finds its corresponding sensor answer!

Note that most of the calibration process is taken into account in the core SLAM so you can provide raw data without worrying about referentials nor time synchronization.

In some cases, you may need to fix an input-accurate map to localize precisely in it. This is particularly useful when using indoor robots and this can be done using the SLAM library through different modes. You can, for example, allow to add new static objects into the map or forget some of them.

Another useful feature is confidence estimation. Different metrics have been implemented to supervise your robot and check it didn’t get into trouble! You can also enable failure detection, which allows entering a recovery mode in which it can be localized again, getting back from where it went.

Mapping community

Do you need to map a large environment with a variety of scenes with a LiDAR sensor? We have all you need here. When mapping an environment, one may face the drift issue. Basically, LiDAR is always only seeing its direct surroundings in a potentially very large environment. So, when localizing a frame, the SLAM process might add some light noise which ends up accumulating in the complete map and this can cause distortion. Don’t worry, we provide you with the tools to overcome this problem.

You can enable the loop closure process to force your SLAM to be consistent over long trajectories if getting back to a visited place. Another interesting tool for you might be the trajectory manager. Indeed, at any time you can load a trajectory into the SLAM which can be obtained by another process (visual SLAM, INS, etc.) and replace or correct the current map with it, using a pose graph strategy.

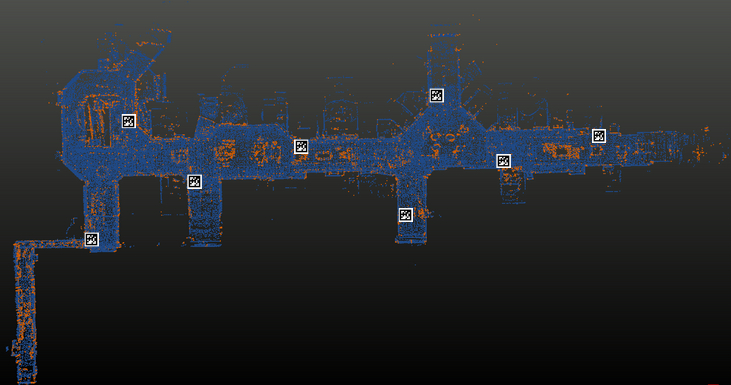

Localized tags like QR codes or April tags are also useful to correct the drift if you have access to a camera.

The LiDAR frames might have been acquired in motion, and might appear blurry or skewed, but don’t worry, the Kitware SLAM library has a lot of interpolation modes allowing you to compensate for this motion using external sensors or not and getting to the most accurate map. Note that you can also remove some moving objects from the current map to get a clean and static map. If you are mapping with a GNSS or INS system you trust, but you are scared to lose your signal, you can link it to the SLAM process so it takes over the mapping process when needed. You don’t have to worry about the signal recovery, the SLAM can take care of the transitions to benefit from all sensors.

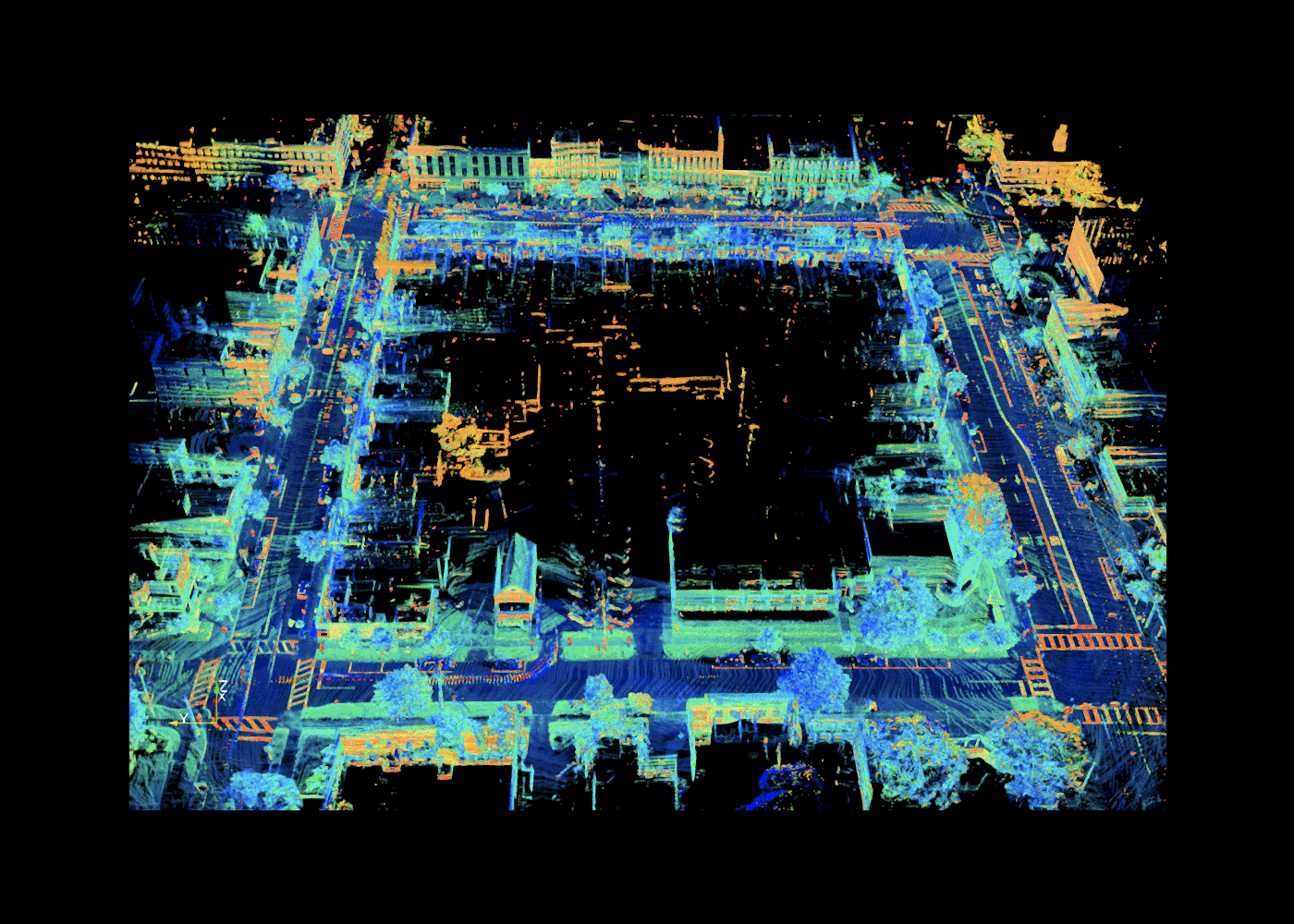

Check out how you can combine a map created by SLAM processing terrestrial LiDAR data with aerial LiDAR data provided by Lyon Metropôle, captured in April 2018 :

The following table summarizes the different issues you may face when trying to SLAM with a 3D LiDAR and the different solutions that are available in Kitware‘s library :

| Issue | Library solution |

| The point cloud map is blurry | – Add an IMU and enable its use to de-skew the frames – Try another interpolation mode |

| Localization is lost or the pose is hovering | – Close a loop when it recovers – Add another sensor and/or landmarks (second LiDAR, IMU, wheel odometer, RGB camera) and enable its use in the optimization – Enable failure detection to trigger recovery mode |

| Some traces appear on the map | Add a GNSS and calibrate it with a short SLAM trajectory |

| Some traces appear in the map | Remove dynamic objects |

| I have a trajectory I want to integrate into the SLAM | Upload the trajectory in the required format and choose whether to replace the SLAM trajectory or to update it with new constraints |

The SLAM library is updated continually and we have plenty of ideas for further developments, including multi-agent SLAM with shared map, front-end and back-end split, improving the availability of the features in real-time, bundle adjustment, semantic moving objects removal, adaptation to newly released LiDARs, etc.

A lot of tools are already available but maybe your perfect scenario has not been implemented yet. Thus, don’t hesitate to collaborate to enrich the wrappings with your ideas! Reach out to us if you want to discuss SLAM or computer vision related requests.