Nek5000 support in VTK and ParaView

Jean M. Favre, Swiss National Supercomputing Centre, Dan Lipsa, Kitware

Nek5000 [4] is a high-order computational fluid dynamics code based on the spectral element method, which has been in development for more than 35 years. It features state-of-the-art, scalable algorithms that are fast and efficient on platforms ranging from laptops to the world’s fastest computers. Applications span a wide range of fields, including fluid flow, thermal convection, combustion and magnetohydrodynamics. The development of Nek5000 continues today with nekRS targeting both classical processors and accelerators like GPUs [P. Fischer et al. NekRS, a GPU-accelerated spectral element Navier–Stokes solver, Par. Comput., 114, 2022].

Up until version 5.11 of ParaView, Nek5000/nekRS data could be imported, in serial mode, via the VisIt bridge plugin, or via a native parallel plugin developed by the first author, based on an earlier code provided by Joe Insley of Argonne National Laboratory. With the upcoming new release v5.12, the reader has been integrated as a native VTK IO class to support parallel reading and much larger simulation meshes.

File format details

A Nek5000/nekRS dataset consists of an ASCII header file describing a set of filenames corresponding to each solution in time, and a set of binary files, one for each timestep. The solution files store the coefficients of the high-order polynomials approximating the solution within each spectral element. Distributing the mesh across multiple MPI tasks is done on an element-based linear ordering. The spectral elements are stored with duplicate node (vertex) data on their boundaries. Data variables are stored at the vertices of the VTK cells.

The binary files encode data as arrays of structures, such that at each node of a spectral element, the coordinates x, y, z of a single vertex are followed by an optional set of floating point values representing the solution fields (velocity components (Vx, Vy, Vz), pressure, temperature, and other scalars). Thus, to obtain a complete array of a given field, strided memory access is obligatory. The binary files contain a packed list of all fields for all vertices, and to attach these fields to a geometric model, we construct a polygonal set of quadrilaterals in 2D, or a set of hexahedra in 3D.

Fig. 1 Discretization of a 3D domain into conforming hexahedral spectral elements is shown on the left image. A 2D spectral element discretized into quads is shown on the right.

Resource consumption

For a given mesh of Nelts spectral elements of order N, a mesh of Nelts*(N-1)^2 2D cells or Nelts*(N-1)^3 3D cells is created. Reading a basic set of one scalar field, one vector field, and the mesh would result in the memory footprint summarized in the following table, assuming that dependent variables are stored as float32, and connectivity lists of the unstructured mesh use 64-bit integer indices. In VTK, unstructured grids are made of arbitrary combinations of any possible cell type. Thus, two lists of integers are required: a list of cell types (VTK_QUAD, or VT_HEXAHEDRON) as int32 numbers, and a list of connectivity indices ( five indices per cell in 2D, or nine indices per cell in 3D), as int64 numbers.

| Nelts spectral elements of order N | 2D case | 3D case |

| Vertex coordinates | Nelts*N^2 * 3 * 4 bytes | Nelts*N^3 * 3 * 4 bytes |

| mesh connectivity | Nelts*(N-1)^2 * (4 + 5*8) bytes | Nelts*(N-1)^3 * (4 + 9*8) bytes |

| Scalar field | Nelts*N^2 * 4 bytes | Nelts*N^3 * 4 bytes |

| Vector field | Nelts*N^2 * 3 * 4 bytes | Nelts*N^3 * 3 * 4 bytes |

Hardware testbed

We tested the reader on a small set of compute nodes hosted at the Swiss National Supercomputing Centre (CSCS). The nodes are composed of an AMD EPYC 7713 64-Core Processor with 2 threads per core and 512 GB of RAM, and four NVIDIA PG506-232 (Ampere) GPUs with 96 GB of RAM.

Multi-threaded acceleration: the case of merging coincident points

As already mentioned, neighboring spectral elements share nodes on their boundaries. Internal to the reader, we use an extremely fast, SMP-accelerated filter, the vtkStaticCleanUnstructuredGrid class [5], to merge duplicate points. This is an option the user should toggle in the reader’s GUI (toggle the button “Merge Points to Clean grid”. Modern compute nodes such as the AMD EPYC nodes with 128 maximum number of threads per node are a blessing for this cleanup operation.

Fig 2: The reader’s GUI enables the selection of which Point Arrays to read, and the binary switching of two options, to clean up duplicate points, and generate a cell-based array encoding the original spectral element Id.

Ghost-cells: the case of External Surface

Our largest test case is a dataset made of 4,385,132 spectral elements of order 10, resulting in the generation of 3,196,761,228 hexahedra. Parallel reading is done with 32 MPI tasks (each MPI task handling roughly 100 million hexahedra). Reading of one scalar and one vector field requires about 20 seconds, with 8 MPI tasks per node, 4 nodes, and 16 threads per task. However, no ghost-cells are available in the original data files read. We decided to postpone their generation after reading the data in memory, delegating this task to the optimized vtkGhostCellsGenerator class [6]. Computing the external surface of a model is the perfect example for such use. Without ghost-cells, the outside surface results in a polygonal mesh of 2.1 billion triangles. The generation of ghost cells takes roughly 43 seconds, but enables us to extract the exact external surface, constructed of only 12,992,863 triangles.

Fig 3: Cut-off view of the head of the internal combustion engine currently under study by our colleague Christos Frouzakis at the Laboratory of Combustion and Acoustics for Power & Propulsion Systems of ETH Zurich in the frame of the Center of Excellence in Combustion (https://coec-project.eu/).

Application: Volume rendering

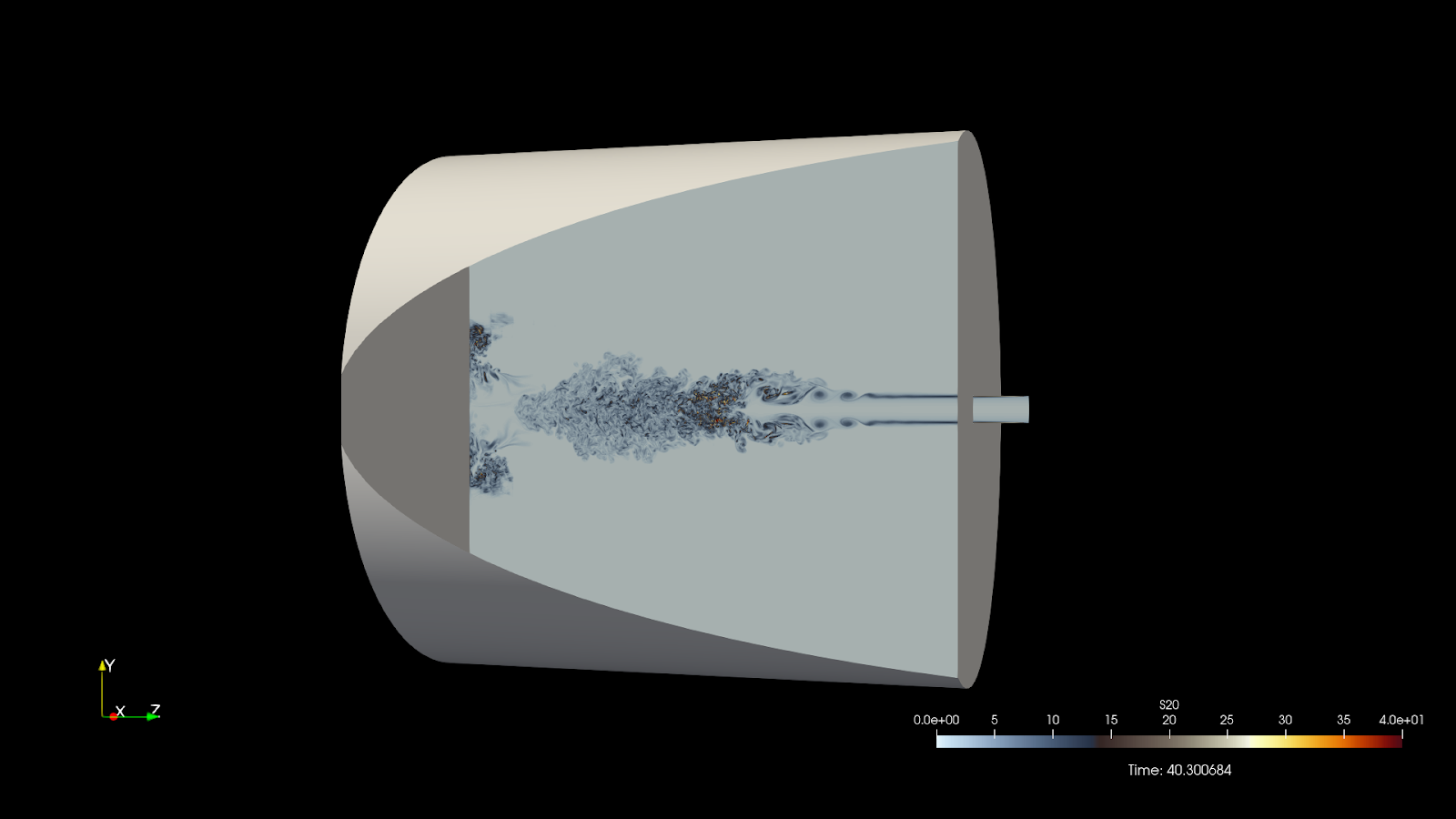

In many fluid dynamics and combustion simulations, the visualization of the solution structures in 3D is of great importance for the scientists. Given the unstructured nature of the meshes created by the Nek5000 reader, one technique supported on NVIDIA GPUs is Volume Rendering with the NVIDIA IndeX library, a highly efficient method supported by ParaView [2]. We produced an animation of the flow in a turbulent jet ignition setup of a lean methane-air mixture with the plugin version of the reader available for earlier versions of ParaView (movie file [3]).

Fig 4: Cut-off view of an ignition chamber studied by our collaborators [3].

Acknowledgments

Christos Frouzakis [1], senior scientist in the Laboratory of Combustion and Acoustics for Power & Propulsion Systems (CAPS) of ETH Zurich, has provided all the datasets and consulting to understand the details of the Nek5000 file format.

References

[1] https://caps.ethz.ch/the-laboratory/people/senior-scientific-collaborators/Christos.html

[2] https://www.kitware.com/kitware-integrates-nvidia-index-enables-interactive-volume-visualization-of-large-datasets/

[3] https://www.youtube.com/watch?v=JL_IbfZvNrE

[4] Nek5000: https://nek5000.mcs.anl.gov/

[5] https://vtk.org/doc/nightly/html/classvtkStaticCleanUnstructuredGrid.html

[6] https://vtk.org/doc/nightly/html/classvtkGhostCellsGenerator.html