Responsible and Explainable AI

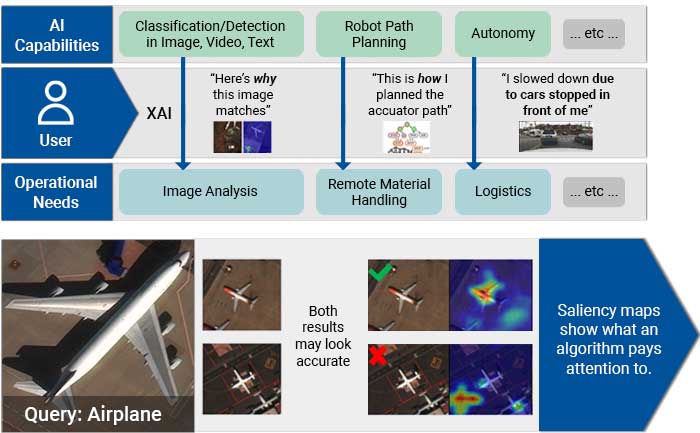

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is also making deep neural networks more robust when faced with previously-unknown conditions, by leveraging AI test and evaluation (T&E) tools such as the Natural Robustness Toolkit (NRTK). In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Need expert help with your project? Speak directly with one of our developers.