Supercharging VolView Insight with MedGemma: Advancing Multimodal Clinical AI

We previously introduced VolView Insight, a powerful extension of the VolView medical visualization platform, that is designed to integrate imaging studies with real-world clinical data. Built for interoperability and flexibility, Volview Insight allows clinicians and researchers to view medical images, run multimodal AI pipelines, and access relevant patient records, all within a modern and intuitive web interface.

In this follow-up blog, we highlight its extensibility by demonstrating how we incorporated MedGemma, a cutting-edge multimodal large language model. This enables AI-powered clinical report generation, summarization, and visual question answering, leveraging both clinical images and textual context based on electronic health records. The MedGemma integration showcases VolView Insight‘s versatility in supporting customized pipelines, data formats, and compute environments, providing an end-to-end platform for advanced clinical workflows.

What is MedGemma?

MedGemma is a collection of open-source generative models developed by Google DeepMind, built on top of Gemma-3, and designed to accelerate AI innovation in healthcare and life sciences. The collection includes 4B- and 27B-parameter models capable of interpreting diverse medical imaging datasets and text inputs and generating natural-language descriptions of findings with clinical reasoning. Additionally, the recently released 27B variant adds support for longitudinal electronic health record interpretation, enabling interaction with the FHIR-based resources used by modern EHR systems.

These models can be customized and fine-tuned to achieve state-of-the-art performance on tasks such as clinical report generation or medical question answering. Furthermore, MedSigLP, the lightweight image and text encoder that powers MedGemma, can be adapted independently for image and text classification tasks.

VolView Insight Architecture

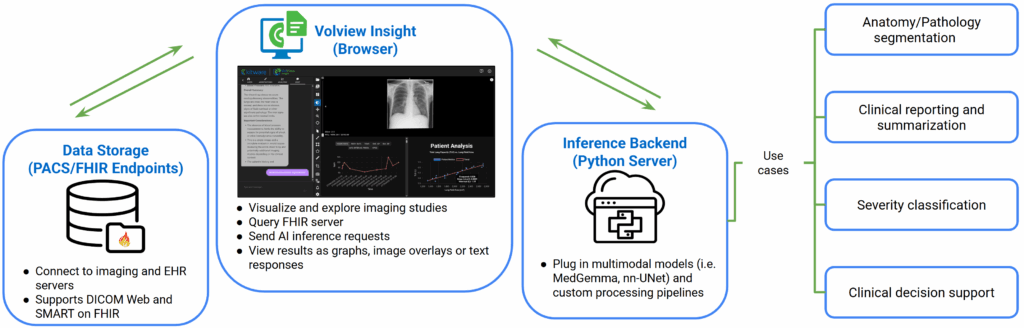

VolView Insight was built for extension. While the base VolView handles high-performance, browser-based interaction with DICOM and NIfTI images, VolView Insight adds a flexible bridge to clinical EHR systems and AI inference pipelines. Users send selected imaging studies to a Python-based inference backend, which can run custom models on any machine with sufficient compute (e.g., a GPU instance). Then, results – whether they are visual overlays, structured outputs, or natural language summaries – are rendered in the web app. The decoupled architecture makes it easy to plug-in new models and pre-processing pipelines, adapt data formats, or scale compute, enabling institutions to tailor the system for specific imaging protocols, documentation practices, or innovations from R&D teams. The system consists of three key components:

- VolView Insight (Frontend): A browser-based viewer for interacting with patient data, images, and clinical notes. No installation or local compute required.

- Inference Backend (Server-side): A Python service that can deploy any model, whether MedGemma, a segmentation model like nnU-Net, or your own research prototype, with minimal integration effort.

- PACS & FHIR Endpoints: Connections to standard FHIR endpoints (Epic, Cerner, etc.) and PACS endpoints (Orthanc, GE HealthCare Centricity, EHR-provided, etc.) to fetch imaging studies, patient metadata, or clinical context. Suitable for deployment in both research settings and real-world hospital networks.

A Physician Companion in Action

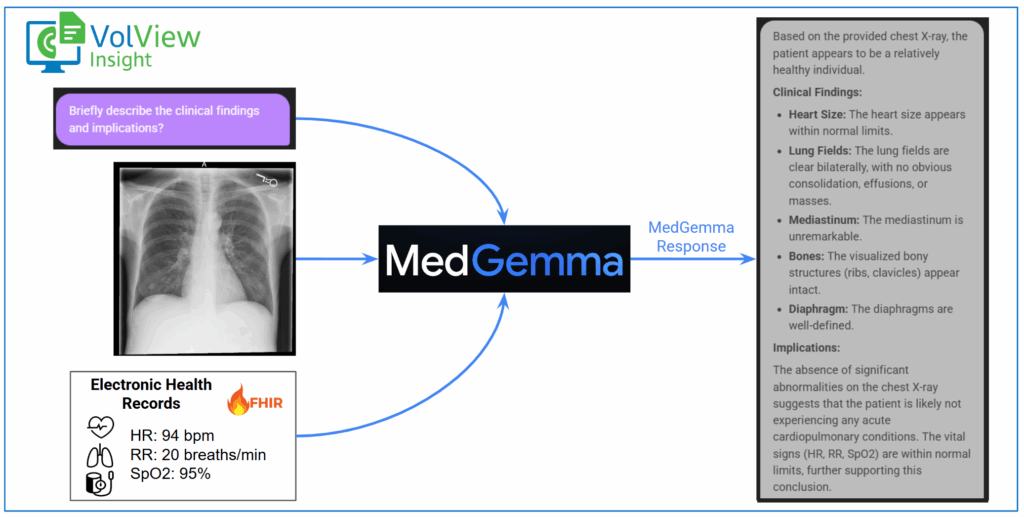

We added a chat interface to the VolView Insight frontend, allowing users to interact with MedGemma using natural language. After selecting a patient and imaging study, users can pose questions such as, “Please describe the findings for this patient’s X-ray,” or “Given the patient’s report, do you think this image shows a pleural effusion?” . These queries are sent to the backend server for inference. As cutting-edge language models continue to evolve, integrating an AI chatbot is becoming an important tool – whether to support clinical decision-making or to assist in research workflows. VolView Insight enables this integration within the secure network environment of a clinic or research group.

In our demonstration video, we include the patient’s most recent vital signs, such as heart rate, respiratory rate, SpO2, and blood pressure, retrieved via the frontend from a FHIR resource server. Through the power of JavaScript, we can easily customize the retrieval of other FHIR resources as needed for a particular analysis. The FHIR-derived clinical data, imaging data, and user query are securely transmitted to the Python backend. There, the DICOM image is converted into a format suitable for model input, and the structured clinical data is transformed into a natural language prompt that can be understood by MedGemma. The backend then runs inference using model weights which can be sourced from Hugging Face, loaded from a local path, or configured to point to any institution-specific model repository. Finally, VolView Insight returns the model’s response directly to the frontend chat interface.

Beyond MedGemma: Building Your Own AI/ML Pipelines

MedGemma is just one example of how AI can be integrated into VolView Insight. Due its modular design, VolView Insight can be easily adapted to run your own model, whether for segmentation, severity classification, or multimodal reasoning. These models can run entirely on your own infrastructure, giving you full control over code, data, and compute.

- For Clinicians: Access AI-driven insights from imaging and patient history directly within the viewer – no new interfaces required.

- For Researchers: Easily integrate, test, and iterate on models, conduct ablation studies, and prototype benchmarks with real or custom multimodal datasets.

Schedule a Live Demo

At Kitware, we collaborate with clinical and academic teams to design, fine-tune, and deploy custom AI models that bridge imaging and EHR data.

If you are interested in learning more about VolView Insight and the different ways we can customize it for your research and product development needs, click HERE to schedule your live demo today. Our team will walk you through the features and functionalities, showing how this tool can support your efforts.