Test Driven Development (TDD) : in a Nutshell : Session (0x01)

May 10, 2010

This sequence of posts intends to cover the basic concepts of TDD, by taking excerpts from the Book: “Growing Object-Oriented Software, Guided by Tests” by Steve Freeman and Nat Pryce (published by Addison Wesley)

[and Yes: taking excerpts from a book for the purpose of comments and criticism is Fair Use under US Copyright Laws

http://www.copyright.gov/title17/92chap1.html#107 ]

TDD in a Nutshell

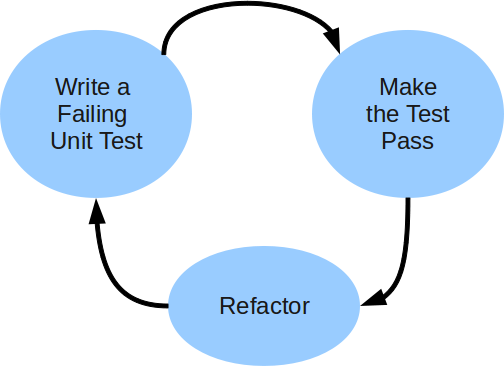

The cycle at the heart of TDD is:

- Write a test

- Write some code to get the test to pass

- Refactor the code to be as simple an implementation of the tested featurs as possible

- Repeat

As illustrated in the figure

Tests provide feedback regarding

- Whether the system works

- If the system is well-structured

By writing the Test first, we get double the benefit. The Exercise of Writing the Tests:

- Makes us clarify the acceptance criteria for the next piece of work – we have to ask ourselves how we can tell when we are done (design)

- Encourages us to write loosely coupled components, so they can easily be tested in isolation and, at higher levels, combined together (design)

- Adds an executable description of what the code does (design)

Whereas Running the Tests :

- Detects errors while the context is fresh in our mind (implementation)

- Let us know when we’ve done enough, discouraging “gold plating” and unnecessary features (design)

This feedback cycle can be summed up by the Golden Rule of TDD: “Never write new functionality without a failing test”

Tags:

What framework are you guys using for unit testing C++ code? Can you point me towards some of your tests?

Ben,

We use the framework of CMake/CTest/CDash.

These tools enable us to perform multi-platform testing at large scale (hundreds of computers and thousands of tests run daily for any given project).

All of these tools are Open Source (distributed under the simplified BSD license).

To see the testing framework in action,

please look at the following links

1) ITK Dashboard:

http://public.kitware.com/dashboard.php?name=itk

(~1700 tests)

2) VTK Dashboard:

http://public.kitware.com/dashboard.php?name=vtk

(~1200 tests)

3) KDE Testing

http://techbase.kde.org/Development/Tutorials/Unittests#About_Unit_Testing

Instructions on how to use CTest/CDash are available at

http://www.cmake.org/Wiki/CMake_Testing_With_CTest

The CDash dashboard also include reports on

a) Code coverage:

(see for example in ITK:)

http://www.cdash.org/CDash/index.php?project=Insight#Coverage

and

b) Dynamic Analysis:

(Valgrind analysis of memory leaks, uninitialized variables..)

(see for example in ITK:)

http://www.cdash.org/CDash/index.php?project=Insight#DynamicAnalysis

The mailing list of CMake and CDash are good places for posting detailed questions about the tools.

A) http://www.cdash.org/cdash/help/mailing.html

B) http://www.cmake.org/cmake/help/mailing.html

Note that the tools above are essential for supporting the automation of the repetitive tasks involved in testing software. However, there is a human component that must be invested in creating the initial tests, verifying their initial output, monitoring the Dashboard on a daily basis, and going after the skull of developers who break the tests… a culture of “respect for the Dashboard” must be built into the developers community of any given project.