Point-cloud processing using VeloView: Automatic Lidar-IMU Calibration and Object-Recognition

A few months ago, Actemium and Kitware were pleased to present their fruitful collaboration using a LiDAR to detect, locate and seize objects on a construction site for semi-automated ground drilling. The project is now completed and the algorithms are running at production level.

A few months ago, Actemium and Kitware were pleased to present their fruitful collaboration using a LiDAR to detect, locate and seize objects on a construction site for semi-automated ground drilling. The project is now completed and the algorithms are running at production level.

In this blogpost, we provide details on components – soon or already released – that were developed or improved on the way:

- PCL integration within ParaView and VeloView

- Improved SLAM algorithms for computation of the Lidar motion purely based on the Lidar-data

- Data-driven auto-calibration of the relative position and orientation between multiple sensors (here Lidar-vs-robot-arm)

- Easy use of AI and Machine Learning tools

The developed algorithms use our open source LiDAR based SLAM-algorithm available in VeloView 4.0 and the recently developed Paraview PCL Plugin available here.

Project description

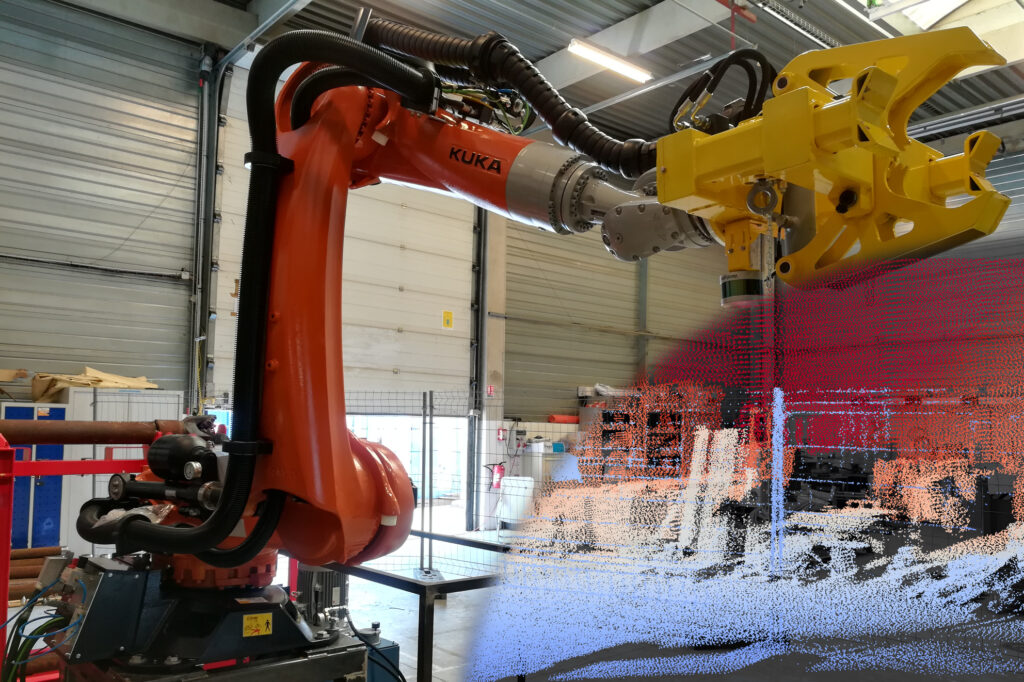

The collaboration aims to partially automate the ground drilling process using a robot to detect and locate the ground driller and manage the drilling tubes. A KUKA robot arm driven by Kitware vision algorithms is used to put additional tubes into the ground driller head. Actemium chose a Velodyne VLP-16 LiDAR sensor as an input and asked Kitware to develop the pattern recognition algorithms based on VeloView.

The outdoor usage, on a muddy construction site and all weather conditions led to the choice of a LiDAR sensors, since they are insensitive to illumination variation and work in all weather conditions. However, their output 3D point clouds are usually sparse.

Using motion estimation algorithms or motion measurement, together with an accurate geometric calibration, it is possible to aggregate frames to obtain a dense and sharp point cloud.

Video: Presentation of the automated drilling pipeline

VeloView: A Paraview-Based open source software

VeloView is a free open-source Paraview-based application developed by Kitware. It is designed to visualize and analyze raw data from LiDAR sensors as 3D point clouds. Customer-specific versions are regularly developed, containing specific enhancements such as:

– Drivers: IMU, GPS or any specific hardware

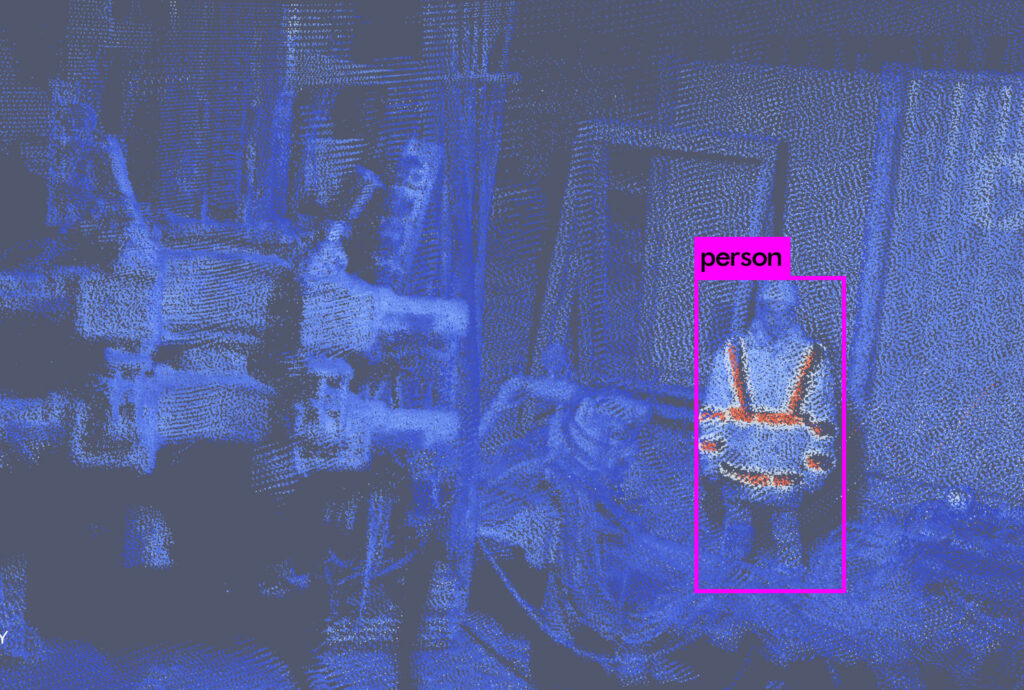

– Algorithms: point cloud analysis, deep learning for object recognition (pedestrians, traffic signs, vehicles, …), semantic classification, SLAM, dense 3D reconstruction, …

– Custom graphical interface: Customer process simplification, or to show the live detection of objects or pedestrians

Multiple sensors automatic and accurate geometric calibration

While passing the prototyping stage we were confronted with a new challenging problem: the manual measurement of the location and orientation of the Lidar on the arm was way too inaccurate, as we needed sub-centimeter and sub-degree accuracy.

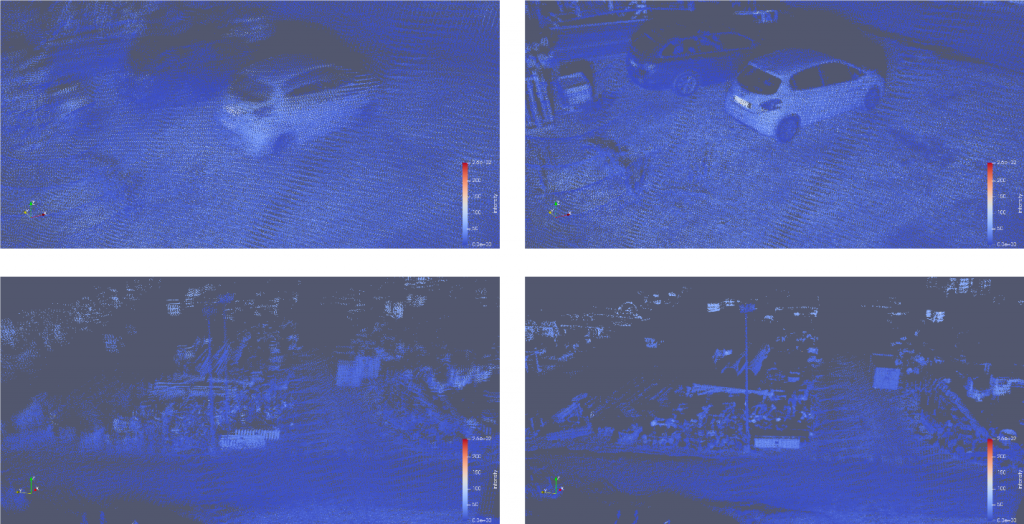

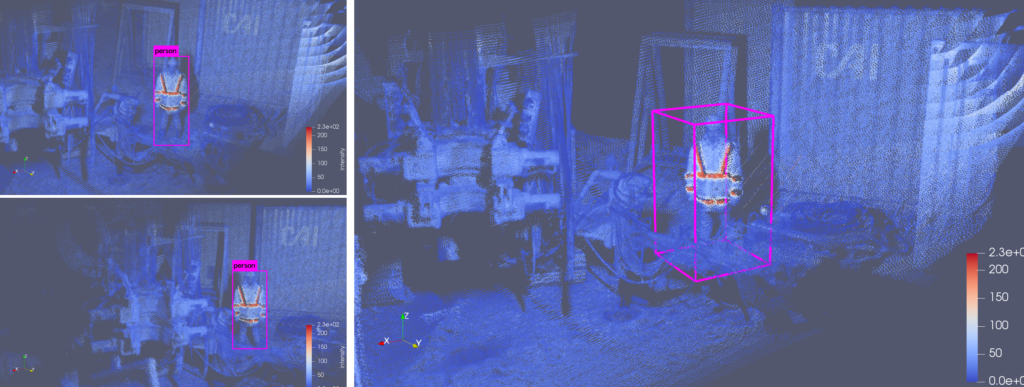

We decided to adapt and improve an automatic geometrical calibration tool we had developed for a car-based Lidar scanning project, for this LiDAR to robot-arm calibration. The geometrical calibration is required to aggregate the LiDAR 3D points using the position robot arm reports over time. This aggregation in a common fixed reference frame results in a dense and sharp point cloud (see Figure 1, Figure 3 and Figure 4).

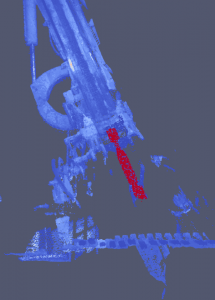

Right: Acquired point cloud of the drill.

Thanks to our developed algorithms, we are now able to automatically calibrate a pair of sensors. This pair can be composed of LiDAR, RGB-Camera or IMU / SLAM sensors. In our example, this calibration estimation results in a dense and high-accuracy point cloud that provides measures with millimeter accuracy (see Figure 3).

Right: accurately estimated calibration using our algorithms resulting in a sharp point cloud.

Point-cloud processing using the Paraview PCL Plugin

We used our recently developed PCL Plugin for Paraview to take advantage of the PCL library within the VeloView software. Once the point-cloud are expressed in the robot reference frame, we aim to detect and locate the drill and the tubes within the 3D scene. We use a 3D model of the drill to perform a registration of the model within the 3D-acquired scene. We firstly use 3D geometric key points extractions and descriptors using PCL Fast Point Feature Histogram (FPFH) to perform a coarse registration. Then, we refine the result using an Iterative Closest Point (ICP) strategy that we designed in our SLAM-algorithm. Using this two-step location together with the estimated calibration allows us to provide the orientation and the position of the drill within the robot reference frame with a millimeter and a tenth of degree accuracy.

Conclusion

By using a Lidar as an outdoor and weatherproof 3D sensor, whose data can robustly be processed, this project successfully automated the tube positioning process.

This saves workers from having to dangerously adjust them by hand, and open the door to multiple other application in the field.

Pierre Guilbert is a R&D Engineer in Computer Vision at Kitware, France.

He has experience in Deep-Learning classification and regression for medical imagery. He is familiar with 2D and 3D processing techniques and related mathematical tools. Since 2016, he works at Kitware on multiple projects related to multiple-view geometry, SLAM, point-cloud analysis and sensor fusion.

Bastien Jacquet, PhD is a Technical Leader at Kitware, France.

Bastien Jacquet, PhD is a Technical Leader at Kitware, France.

Since 2016, he promotes and extends Kitware’s Computer Vision expertise in Europe. He has experience in various Computer Vision and Machine Learning subdomains, and he is an expert in 3D Reconstruction, SLAM, and related mathematical tools

Update Q1 2025: VeloView is not maintained anymore. But the same processing may be developped in LidarView, on any of the supported sensors (you may find the list directly on LidarView repository).

Referred PCL plugin is also now obsolete, and its functionalities have been ported to the one located here : https://gitlab.kitware.com/LidarView/plugins/pcl-plugin

Feel free to contact us to develop such projects for your use case!

When is Veloview 4.0 going to be released? Or anywhere I can download/build it to test SLAM features (without an IMU/GPS)?

Hello Ellie,

VeloView 4.0 should be released soon, in the meantime you can download and build the sources from the public github repository:

https://github.com/Kitware/VeloView

Specifics build instructions are available in the issue #51:

https://github.com/Kitware/VeloView/issues/51

Regards,

Pierre Guilbert

how can i convert .pcap to .las. Can you guys offer any advice?

Hi,

can you share some details on the automatic geometric calibration part? It looks great! Will it be part of veloview 4.0?

Thanks!

Hello,

The SLAM code is already online within LidarView, on github.com/Kitware/LidarView

Admittedly, it is not yet a one-button clic operation to make it work.

The automatic geometric calibration has the same status for now.

So you can either inspect the code and tune it to your needs, or we offer support contracts to help you doing so.

Best regards,

Bastien Jacquet,

LidarView Project Leader

Kitware Europe