Open Source Tools for Fighting Disinformation

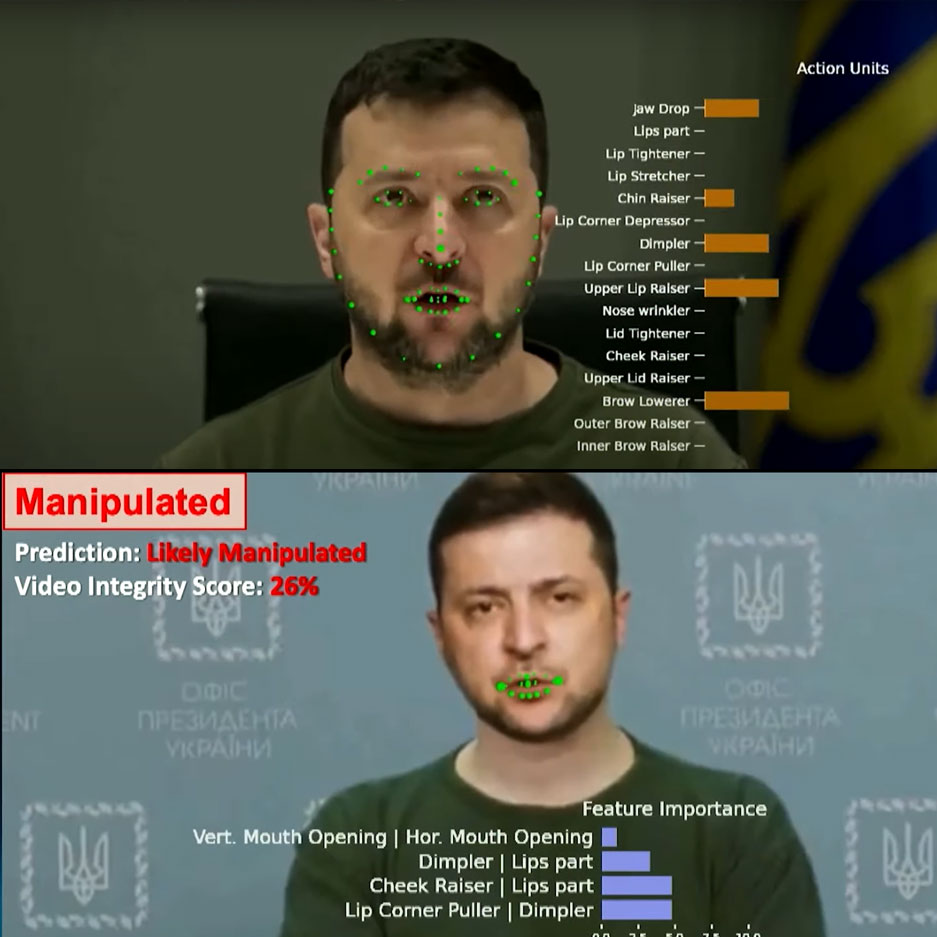

Deepfakes and disinformation have the ability to move financial markets, influence public opinion, and scam businesses and individuals out of millions of dollars. The Semantic Forensics program (SemaFor) is a DARPA-funded initiative to create comprehensive forensic technologies to help mitigate online threats perpetuated via synthetic and manipulated media. Over the last eight years, Kitware has helped DARPA create a powerful set of tools to analyze whether media has been artificially generated or manipulated. Kitware and DARPA are now bringing those tools out of the lab to defend digital authenticity in the real world.

Kitware has a history of building various image and video forensics algorithms to defend against disinformation by detecting various types of manipulations, beginning with DARPA’s Media Forensics (MediFor) program. Building on this foundation, our team expanded its focus to include multimodal analysis of text, audio, and video under the SemaFor program. For additional information about Kitware’s contributions to SemaFor, check out the “Voices from DARPA” podcast episode, “Demystifying Deepfakes,” where Arslan Basharat, assistant director of computer vision at Kitware, is a guest speaker.

DARPA has recently announced two initiatives related to the SemaFor program: AI FORCE and Analytic Catalog. The AI Forensics Open Research Challenge Evaluations (AI FORCE) are a series of publicly available challenges aimed at developing defensive, forensics techniques related to generative AI capabilities. The Analytic Catalog is a collection of open source tools available for the research community and the industry to advance the state-of-the-art in digital forensics. In keeping with Kitware’s open source software heritage, we have made significant contributions to launching the Analytic Catalog with several detection and attribution analytics from our team related to images, video, text, and multimodal news articles. We plan to add additional cutting-edge techniques for combatting disinformation to this catalog in the future.

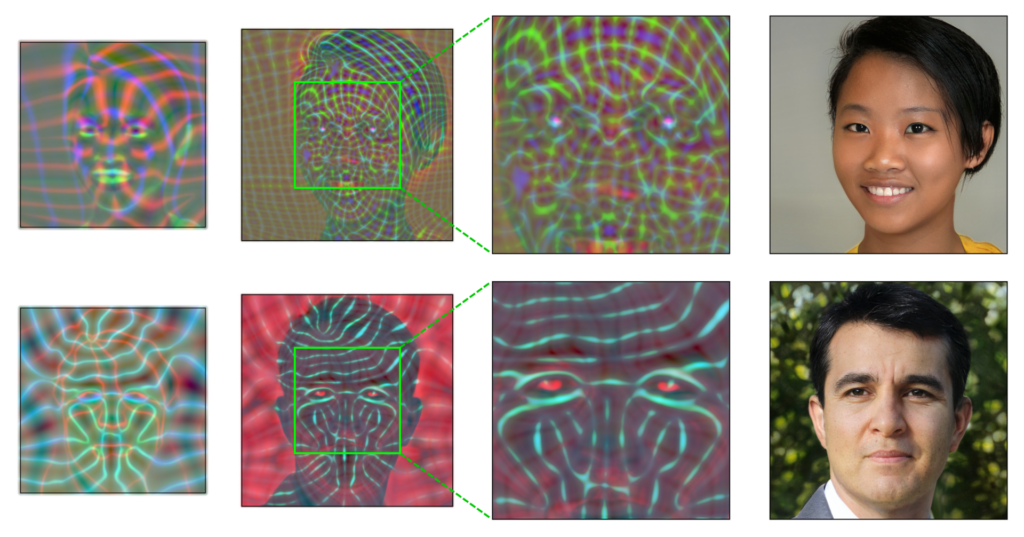

For instance, one of these analytics can help detect AI-generated images, such as those created by Generative Adversarial Network (GAN) algorithms. Recently we have also developed methods for more modern AI-generation techniques like the Diffusion generators.

In addition to our work on photos and video, our team members have also contributed to the development of tools for detecting and analyzing other forms of disinformation, such as synthetic audio as well as AI-generated tweets and news articles. These tools are crucial for combating the spread of false information and propaganda on social media and other digital formats.

We are also working to build a community of researchers and practitioners who are dedicated to digital forensics. By providing a platform for researchers, developers, and organizations to work together, the project has laid the groundwork for a more informed and resilient digital future, where deepfakes and disinformation can be effectively detected and defanged.

If you are interested in working with Kitware to detect and analyze deepfakes or other forms of disinformation, please send us a message. We’d be happy to schedule a meeting to discuss your project and how we can leverage our expertise and open source tools to help you meet your goals.

This material is based upon work supported by the Defense Advanced Research Projects Agency (DARPA) under Contract No. HR001120C0123. Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the Defense Advanced Research Projects Agency (DARPA).

1. K. Regmi, M. Kirchner, and A. Basharat, “Generalizable synthetic image detection,” in Proceedings of the National Security Sensor and Data Fusion Committee (NSSDF), 2023.