Leaders in Artificial Intelligence, Machine Learning, and Computer Vision

WACV is a premier international computer vision conference that attracts vision researchers and practitioners from around the world. Being an academic conference, WACV emphasizes papers on systems and applications with significant, interesting vision components and is highly selective, with fewer than 30% of submissions accepted.

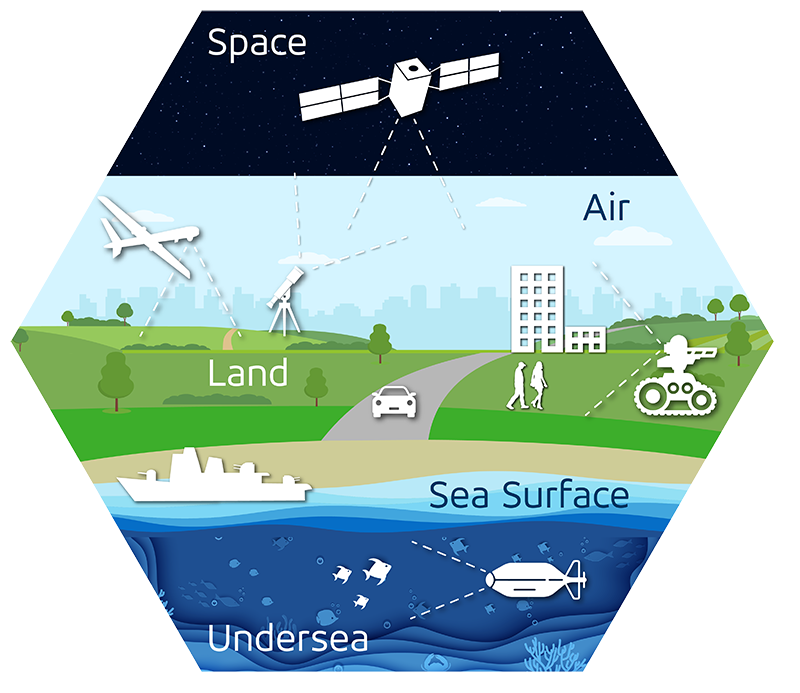

Kitware has supported WACV over the past few years as a sponsor, exhibitor, and presenter. This year, we are a Gold-level sponsor and will have an in-person exhibit space where we will highlight our ongoing research. Visit us to learn how we apply computer vision to solve challenging problems across sea, air, space, terrestrial, and internet domains. We are proud to have three papers accepted and to be co-chairing four workshops at WACV this year (see “Events” section below for more information).

Request a meeting with our team to discuss your project and how we can help you leverage our open source tools.

Learn more about our computer vision capabilities

Events Schedule

In this paper, we present the Multi-view Extended Videos with Identities (MEVID) dataset for large-scale, video person re-identification (ReID) in the wild. To our knowledge, MEVID represents the most-varied video person ReID dataset, spanning an extensive indoor and outdoor environment across nine unique dates in a 73-day window, various camera viewpoints, and entity clothing changes. While other datasets have more unique identities, MEVID emphasizes a richer set of information about each individual. Being based on the MEVA video dataset, we also inherit data that is intentionally demographically balanced to the continental United States. To accelerate the annotation process, we developed a semi-automatic annotation framework and GUI that combines state-of-the-art, real-time models for object detection, pose estimation, person ReID, and multi-object tracking. Link to paper ->

Track: 4B Tracking & Reidentification

Room: Naupaka VII

Reconstructing Humpty Dumpty: Multi-feature Graph Autoencoder for Open Set Action Recognition

Authors: Dawei Du (Kitware), Ameya Shringi, Christopher Funk (Kitware), Anthony Hoogs (Kitware)

This paper focuses on action recognition datasets and algorithms that operate within open set problems, where test samples maybe drawn from either known or unknown classes. Existing open set action recognition methods are typically based on extending closed set methods by adding post hoc analysis of classification scores or feature distances and do not capture the relations among all the video clip elements. Our approach uses the reconstruction error to determine the novelty of the video since unknown classes are harder to put back together and, therefore, have a higher reconstruction error than videos from known classes. Our solution is a novel graph-based autoencoder that accounts for contextual and semantic relations among the clip pieces for improved reconstruction. Link to Paper

Track: 8B Action Recognition

Room: Naupaka VII

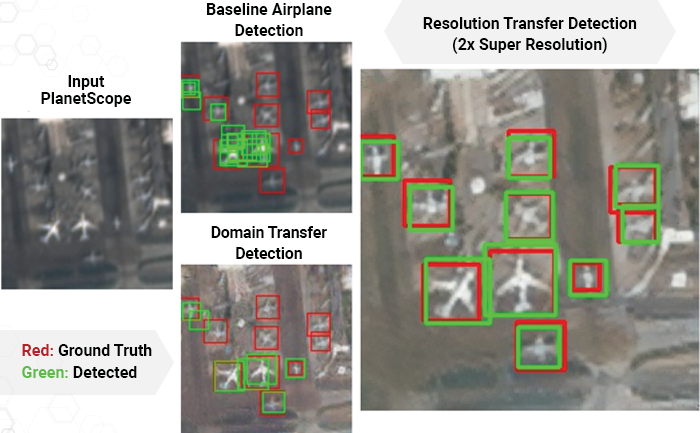

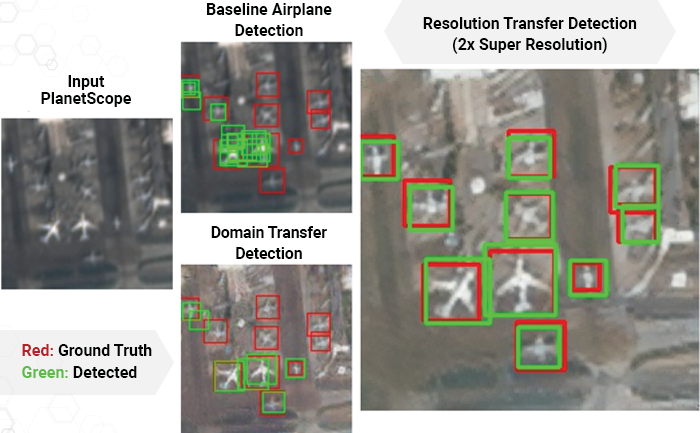

Handling Image and Label Resolution Mismatch in Remote Sensing

Scott Workman, Armin Hadzic, M. Usman Rafique (Kitware)

There are unique challenges to semantic segmentation in the remote sensing domain. For example, the differences in ground sample distance results in a resolution mismatch between overhead imagery and ground-truth label sources. This paper presents a supervised method using low-resolution labels (without upsampling), that takes advantage of an exemplar set of high-resolution labels to guide the learning process. Our method incorporates region aggregation, adversarial learning, and self-supervised pre-training to generate fine-grained predictions, without requiring high-resolution annotations. Extensive experiments demonstrate the real-world applicability of our approach. Link to paper ->

Track: 9B Remote Sensing, Agriculture and Biology, Embedded & Real-Time, Few-Shot Learning

Room: Naupaka VII

Workshop on Maritime Computer Vision

Co-organizer: Matthew Dawkins (Kitware)

Over the past few years, many computer vision applications have emerged in the maritime and freshwater domains. Autonomous vehicles have made accessing maritime environments easier by providing the potential for automation on busy waterways and shipping routes and airborne applications. Computer vision plays an essential role in accurate navigation when operating these vehicles in busy traffic or close to the shores. This workshop aims to bring together Maritime Computer Vision researchers and promote deploying modern computer vision approaches in airborne and surface water domains.

2nd Workshop on Dealing with the Novelty in Open Worlds

Co-chairs: Christopher Funk, Ph.D. (Kitware), Dawei Du, Ph.D. (Kitware)

Computer vision algorithms are often developed inside a closed-world paradigm (e.g. recognizing objects from a fixed set of categories). However, the real-world is open, and constantly and dynamically changes. As a result, most computer vision algorithms can’t detect the change and continue to perform their tasks with incorrect and sometimes misleading predictions. Many real-world applications considered at WACV must deal with changing worlds where a variety of novelty is introduced (e.g., new classes of objects). In this workshop, we aim to facilitate research directions that operate well in the open-world while maintaining performance in the closed-world. We will explore mechanisms to measure competence at recognizing and adapting to novelty.

This workshop will cover topics related to the application of computer vision in real-world surveillance, the challenges associated with this surveillance, and mitigation strategy on topics such as object detection, scene understanding, and super-resolution. The workshop will also address legal and ethical issues of computer vision applications in these real-world scenarios, for example, detecting bias toward gender or race.

Long-Range Recognition

Co-chair: Scott McCloskey, Ph.D. (Kitware)

Vision-based recognition in uncontrolled environments has been a topic of interest for researchers for decades. The addition of large standoff (lateral and/or vertical) distances between sensing platforms and the objects being sensed adds new challenges to this area. This workshop will cover some of the focused research programs addressing this issue, along with the supporting challenges of data collection, data curation, etc. and highlight architectures for multimodal recognition that seem to offer potential for strong performance. This workshop also aims to develop implicit consensus for current best practices on data-related matters and identify topics that need new focused attention.

Kitware is Hiring

3D Computer Vision Researcher

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Cleared Computer Vision C++ Developer

(Clifton Park NY, Arlington VA)

Cleared Machine Learning Engineer

(Clifton Park NY, Arlington VA)

Computer Vision C++ Developer

(Clifton Park NY, Arlington VA)

Computer Vision Research Internship

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Computer Vision Researcher

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Machine Learning Engineer

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Technical Leader – Natural Language Processing (NLP)

(Clifton Park NY, Carrboro NC, Arlington VA, Minneapolis MN, Remote)

Technical Leader of Computer Vision

Kitware’s Computer Vision Areas of Expertise

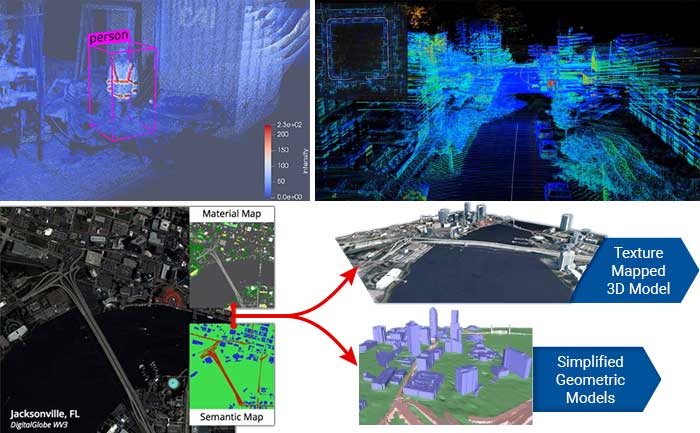

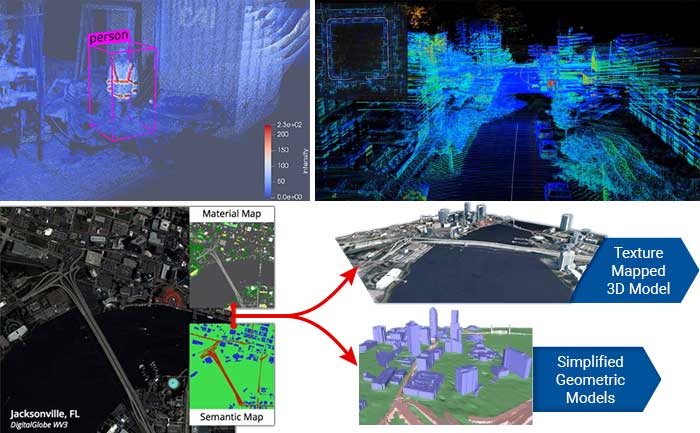

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

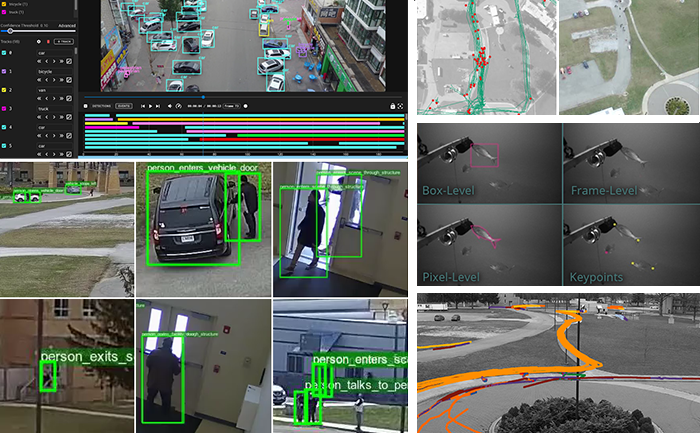

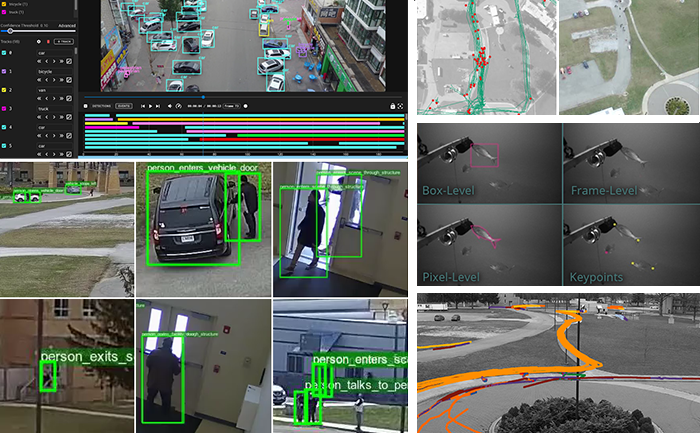

Complex Activity, Event, and Threat Detection

Kitware’s tools detect high-value events, behaviors, and anomalies by analyzing low-level actions and events in complex environments. Using data from sUAS, fixed security cameras, WAMI, FMV, MTI, and various sensing modalities such as acoustic, Electro-Optical (EO), and Infrared (IR), our algorithms recognize actions like picking up objects, vehicles starting/stopping, and complex interactions such as vehicle transfers. We leverage both traditional computer vision deep learning models and Vision-Language Models (VLMs) for enhanced scene understanding and context-aware activity recognition. For sUAS, our tools provide precise tracking and activity analysis, while for fixed security cameras, they monitor and alert on unauthorized access, loitering, and other suspicious behaviors. Efficient data search capabilities support rapid identification of threats in massive video streams, even with detection errors or missing data. This ensures reliable activity recognition across a variety of operational settings, from large areas to high-traffic zones.

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams. Data collected or curated by Kitware includes the MEVA activity and MEVID person re-identification datasets, the VIRAT activity dataset, and the DARPA Invisible Headlights off-road autonomous vehicle navigation dataset.

Generative AI, LLMs, VLMs

Through our extensive experience in AI and our early adoption of deep learning, we have developed state-of-the-art capabilities in many current areas of generative AI including vision-language models (VLMs), retrieval-augmented generation (RAG), unsupervised training of multimodal foundation models, human-aligned reasoning in LLMs, image generation and style transfer, and image/video captioning. Our methods enable powerful, domain-specific applications based on the latest breakthroughs in generative AI, such as sample-efficient methods to adapt LLMs for human-aligned decision-making in the medical triage domain.

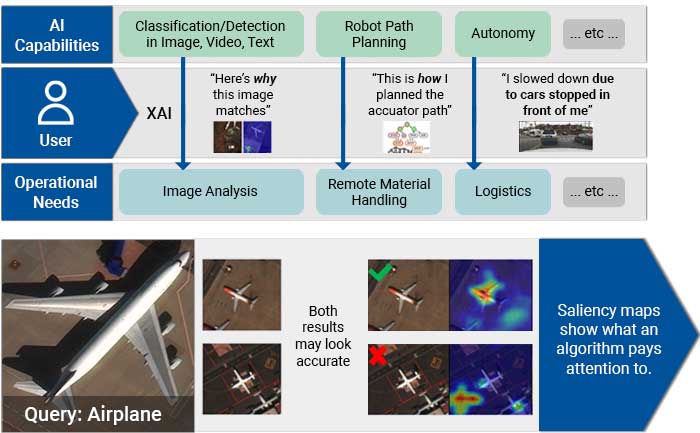

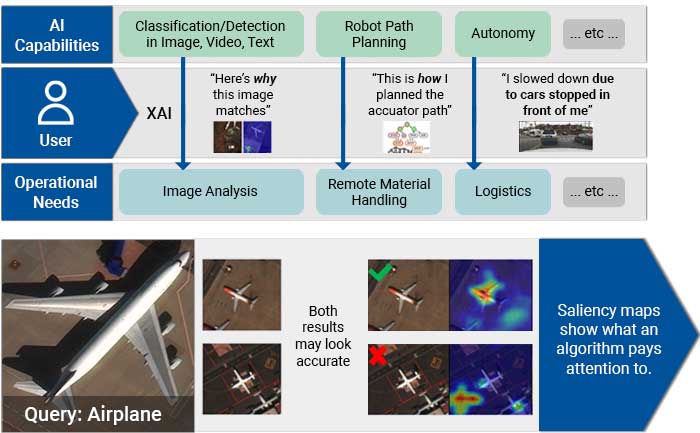

Responsible and Explainable AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is also making deep neural networks more robust when faced with previously-unknown conditions, by leveraging AI test and evaluation (T&E) tools such as the Natural Robustness Toolkit (NRTK). In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

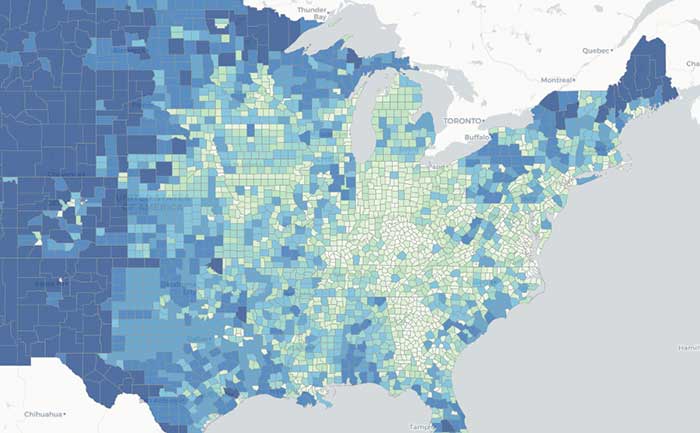

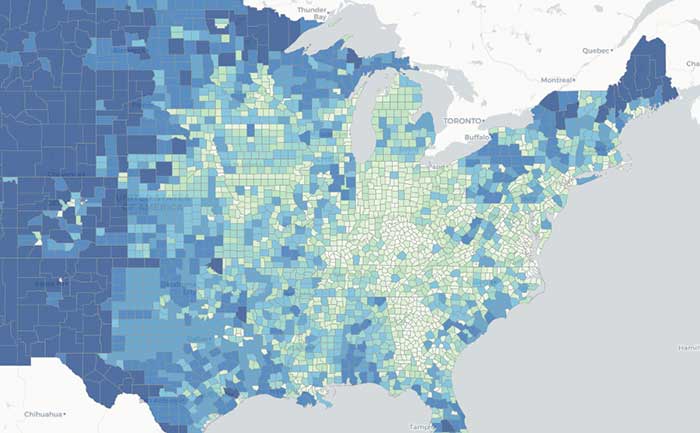

Geospatial Information Systems and Visualization

We offer advanced capabilities for geospatial analysis and visualization. We support a range of use cases from analyzing geolocated Twitter traffic, to processing and viewing complex climate models, to working with large satellite imagery datasets. We build AI systems for cloud-native geospatial data analytics at scale and then construct customized web-based interfaces to intuitively visualize and interact with these results. Our open source tools and expert staff provide full application solutions, linking raw datasets and geospatial analyses to custom web visualizations.

Multimedia Integrity Assurance

In the age of disinformation, it has become critical to validate the integrity and veracity of images, video, audio, and text sources. For instance, as photo-manipulation and photo-generation techniques are evolving rapidly, we continuously develop algorithms to detect, attribute, and characterize disinformation that can operate at scale on large data archives. These advanced AI algorithms allow us to detect inserted, removed, or altered objects, distinguish deep fakes from real images or videos, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

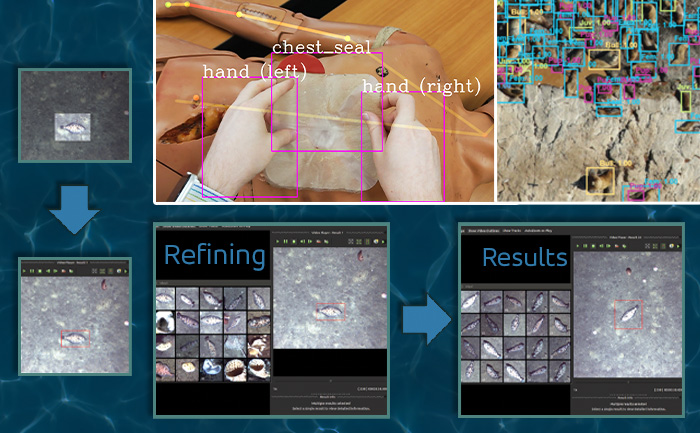

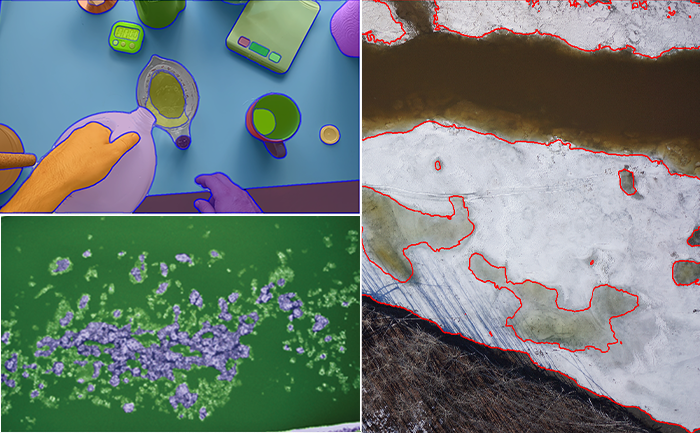

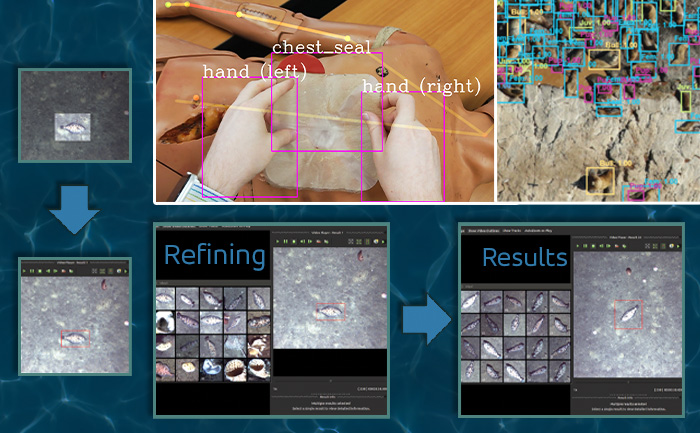

Interactive Artificial Intelligence and Human-Machine Teaming

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

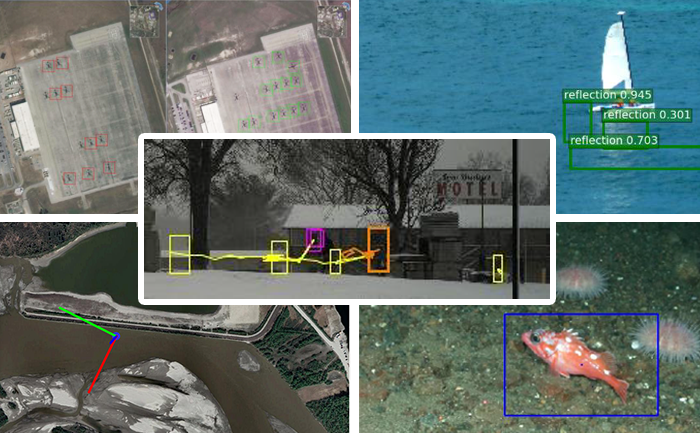

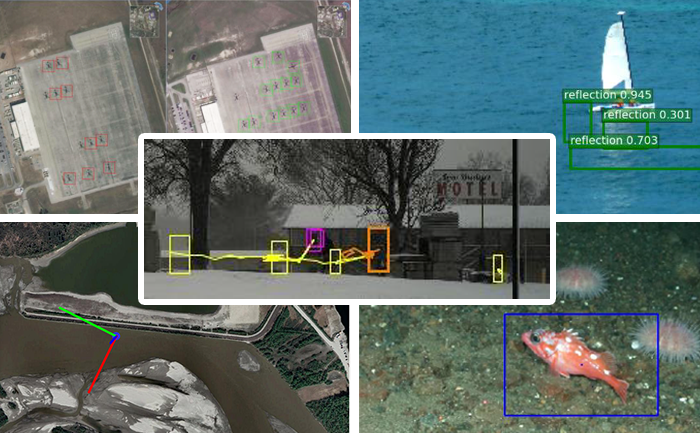

Object Detection and Classification

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

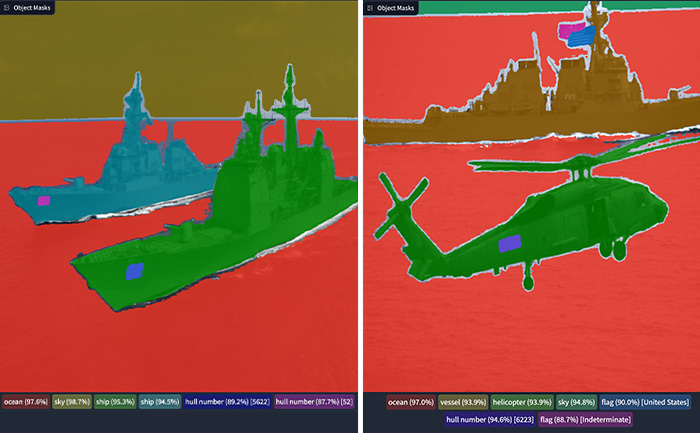

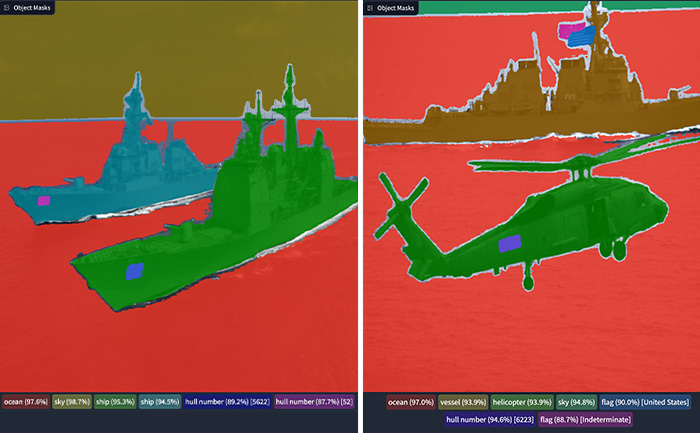

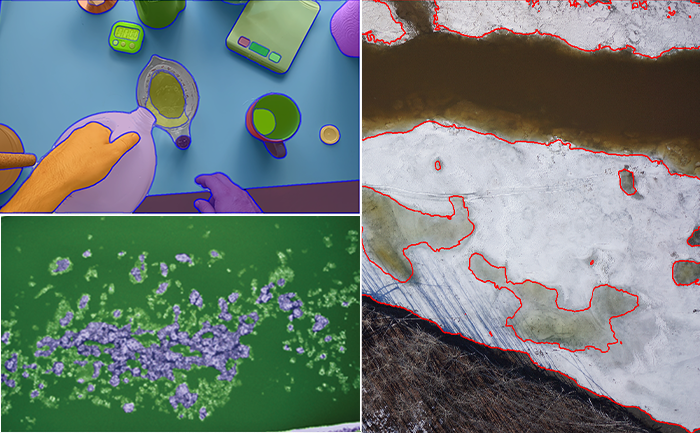

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

Super Resolution and Enhancement

Images and videos often come with unintended degradation – lens blur, sensor noise, environmental haze, compression artifacts, etc., or sometimes the relevant details are just beyond the resolution of the imagery. Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. Our experience includes both powerful generative AI methods and simpler data-driven methods that avoid hallucination. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

3D Reconstruction, Point Clouds, and Odometry

Kitware’s algorithms can extract 3D point clouds and surface meshes from video or images, without metadata or calibration information, or exploiting it when available. Operating on these 3D datasets or others from LiDAR and other depth sensors, our methods estimate scene semantics and 3D reconstruction jointly to maximize the accuracy of object classification, visual odometry, and 3D shape. Our open source 3D reconstruction toolkit, TeleSculptor, is continuously evolving to incorporate advancements to automatically analyze, visualize, and make measurements from images and video. LiDARView, another open source toolkit developed specifically for LiDAR data, performs 3D point cloud visualization and analysis in order to fuse data, techniques, and algorithms to produce SLAM and other capabilities.

Complex Activity, Event, and Threat Detection

Kitware’s tools detect high-value events, behaviors, and anomalies by analyzing low-level actions and events in complex environments. Using data from sUAS, fixed security cameras, WAMI, FMV, MTI, and various sensing modalities such as acoustic, Electro-Optical (EO), and Infrared (IR), our algorithms recognize actions like picking up objects, vehicles starting/stopping, and complex interactions such as vehicle transfers. We leverage both traditional computer vision deep learning models and Vision-Language Models (VLMs) for enhanced scene understanding and context-aware activity recognition. For sUAS, our tools provide precise tracking and activity analysis, while for fixed security cameras, they monitor and alert on unauthorized access, loitering, and other suspicious behaviors. Efficient data search capabilities support rapid identification of threats in massive video streams, even with detection errors or missing data. This ensures reliable activity recognition across a variety of operational settings, from large areas to high-traffic zones.

Cyber-Physical Systems

The physical environment presents a unique, ever-changing set of challenges to any sensing and analytics system. Kitware has designed and constructed state-of-the-art cyber-physical systems that perform onboard, autonomous processing to gather data and extract critical information. Computer vision and deep learning technology allow our sensing and analytics systems to overcome the challenges of a complex, dynamic environment. They are customized to solve real-world problems in aerial, ground, and underwater scenarios. These capabilities have been field-tested and proven successful in programs funded by R&D organizations such as DARPA, AFRL, and NOAA.

Dataset Collection and Annotation

The growth in deep learning has increased the demand for quality, labeled datasets needed to train models and algorithms. The power of these models and algorithms greatly depends on the quality of the training data available. Kitware has developed and cultivated dataset collection, annotation, and curation processes to build powerful capabilities that are unbiased and accurate, and not riddled with errors or false positives. Kitware can collect and source datasets and design custom annotation pipelines. We can annotate image, video, text and other data types using our in-house, professional annotators, some of whom have security clearances, or leverage third-party annotation resources when appropriate. Kitware also performs quality assurance that is driven by rigorous metrics to highlight when returns are diminishing. All of this data is managed by Kitware for continued use to the benefit of our customers, projects, and teams. Data collected or curated by Kitware includes the MEVA activity and MEVID person re-identification datasets, the VIRAT activity dataset, and the DARPA Invisible Headlights off-road autonomous vehicle navigation dataset.

Generative AI, LLMs, VLMs

Through our extensive experience in AI and our early adoption of deep learning, we have developed state-of-the-art capabilities in many current areas of generative AI including vision-language models (VLMs), retrieval-augmented generation (RAG), unsupervised training of multimodal foundation models, human-aligned reasoning in LLMs, image generation and style transfer, and image/video captioning. Our methods enable powerful, domain-specific applications based on the latest breakthroughs in generative AI, such as sample-efficient methods to adapt LLMs for human-aligned decision-making in the medical triage domain.

Responsible and Explainable AI

Integrating AI via human-machine teaming can greatly improve capabilities and competence as long as the team has a solid foundation of trust. To trust your AI partner, you must understand how the technology makes decisions and feel confident in those decisions. Kitware has developed powerful tools, such as the Explainable AI Toolkit (XAITK), to explore, quantify, and monitor the behavior of deep learning systems. Our team is also making deep neural networks more robust when faced with previously-unknown conditions, by leveraging AI test and evaluation (T&E) tools such as the Natural Robustness Toolkit (NRTK). In addition, our team is stepping outside of classic AI systems to address domain independent novelty identification, characterization, and adaptation to be able to acknowledge the introduction of unknowns. We also value the need to understand the ethical concerns, impacts, and risks of using AI. That’s why Kitware is developing methods to understand, formulate and test ethical reasoning algorithms for semi-autonomous applications. Kitware is proud to be part of the AISIC, a U.S. Department of Commerce Consortium dedicated to advancing the development and deployment of safe, trustworthy AI.

Geospatial Information Systems and Visualization

We offer advanced capabilities for geospatial analysis and visualization. We support a range of use cases from analyzing geolocated Twitter traffic, to processing and viewing complex climate models, to working with large satellite imagery datasets. We build AI systems for cloud-native geospatial data analytics at scale and then construct customized web-based interfaces to intuitively visualize and interact with these results. Our open source tools and expert staff provide full application solutions, linking raw datasets and geospatial analyses to custom web visualizations.

Multimedia Integrity Assurance

In the age of disinformation, it has become critical to validate the integrity and veracity of images, video, audio, and text sources. For instance, as photo-manipulation and photo-generation techniques are evolving rapidly, we continuously develop algorithms to detect, attribute, and characterize disinformation that can operate at scale on large data archives. These advanced AI algorithms allow us to detect inserted, removed, or altered objects, distinguish deep fakes from real images or videos, and identify deleted or inserted frames in videos in a way that exceeds human performance. We continue to extend this work through multiple government programs to detect manipulations in falsified media exploiting text, audio, images, and video.

Interactive Artificial Intelligence and Human-Machine Teaming

DIY AI enables end users – analysts, operators, engineers – to rapidly build, test, and deploy novel AI solutions without having expertise in machine learning or even computer programming. Using Kitware’s interactive DIY AI toolkits, you can easily and efficiently train object classifiers using interactive query refinement, without drawing any bounding boxes. You are able to interactively improve existing capabilities to work optimally on your data for tasks such as object tracking, object detection, and event detection. Our toolkits also allow you to perform customized, highly specific searches of large image and video archives powered by cutting-edge AI methods. Currently, our DIY AI toolkits, such as VIAME, are used by scientists to analyze environmental images and video. Our defense-related versions are being used to address multiple domains and are provided with unlimited rights to the government. These toolkits enable long-term operational capabilities even as methods and technology evolve over time.

Object Detection and Classification

Our video object detection and tracking tools are the culmination of years of continuous government investment. Deployed operationally in various domains, our mature suite of trackers can identify and track moving objects in many types of intelligence, surveillance, and reconnaissance data (ISR), including video from ground cameras, aerial platforms, underwater vehicles, robots, and satellites. These tools are able to perform in challenging settings and address difficult factors, such as low contrast, low resolution, moving cameras, occlusions, shadows, and high traffic density, through multi-frame track initialization, track linking, reverse-time tracking, recurrent neural networks, and other techniques. Our trackers can perform difficult tasks including ground camera tracking in congested scenes, real-time multi-target tracking in full-field WAMI and OPIR, and tracking people in far-field, non-cooperative scenarios.

Semantic Segmentation

Kitware’s knowledge-driven scene understanding capabilities use deep learning techniques to accurately segment scenes into object types. In video, our unique approach defines objects by behavior, rather than appearance, so we can identify areas with similar behaviors. Through observing mover activity, our capabilities can segment a scene into functional object categories that may not be distinguishable by appearance alone. These capabilities are unsupervised so they automatically learn new functional categories without any manual annotations. Semantic scene understanding improves downstream capabilities such as threat detection, anomaly detection, change detection, 3D reconstruction, and more.

Super Resolution and Enhancement

Images and videos often come with unintended degradation – lens blur, sensor noise, environmental haze, compression artifacts, etc., or sometimes the relevant details are just beyond the resolution of the imagery. Kitware’s super-resolution techniques enhance single or multiple images to produce higher-resolution, improved images. We use novel methods to compensate for widely spaced views and illumination changes in overhead imagery, particulates and light attenuation in underwater imagery, and other challenges in a variety of domains. Our experience includes both powerful generative AI methods and simpler data-driven methods that avoid hallucination. The resulting higher-quality images enhance detail, enable advanced exploitation, and improve downstream automated analytics, such as object detection and classification.

Interested in learning more? Let’s talk!

We are always looking for collaboration opportunities where we can apply our automated image and video analysis expertise. Let’s schedule a meeting to talk about your project and see how we can apply our open source technology.